Daniel Miessler's Blog, page 7

August 27, 2024

UL NO. 447: Sam Curry on Bug Bounty Careers, Slack Data Exfil, The Work Lie

SECURITY | AI | MEANING :: Unsupervised Learning is a stream of original ideas, story analysis, tooling, and mental models designed to help humans lead successful and meaningful lives in a world full of AI .

Continue reading online to avoid the email cutoff… TOCNOTESOk, tons of content this week—super excited for this episode!

Going all-text this time—callback to old-school

Upcoming Speaking: Snyk’s conference in October, Cyberstorm in Switzerland in October, BlackHat in Rihyad in November

The one AI tool you should be trying out from the last couple of weeks is CursorAI. Lots of people are switching to it from Copilot. The big feature seems to be an editor that understands your full codebase.

Ok, let’s go…

MY WORKMy new essay on why layoffs, hiring, the job market, and work in general just sucks right now. One of my top 20 essays ever. READ IT

The new way I explain AI—and specifically LLMs—to people. READ IT

SECURITYCrowdStrike's 2024 Threat Hunting Report reveals that North Korean operatives, posing as job applicants, have infiltrated over 100 U.S.-based companies in sectors like aerospace, defense, retail, and tech. Not much coverage of Blue Friday. MORE

State-linked Chinese entities are using cloud services from Amazon and its rivals to access advanced U.S. chips and AI capabilities they can't get otherwise. MORE

Cisco has patched multiple vulnerabilities, including a high-severity bug (CVE-2024-20375) in its Unified Communications Manager products. This flaw, reported by the NSA, affects SIP call processing and can be exploited remotely to cause a denial-of-service condition. MORE

Sponsor

Is Foreign Software Running in Your Environment?

Shadow I.T., foreign software, and even unpatched vulnerabilities could be lurking in your corporate mandated devices. To resolve this, ThreatLocker® is offering free I.T. security health reports to organizations looking to harden their environment and mitigate the risks of potential nation-state attacks, all on a single pane of glass.

ThreatLocker’s free report audits what is occurring in your environment, including:

Information about executables, scripts, and libraries.

Files that have been accessed, changed, or deleted.

All network activity, including source and destination IP addresses, port numbers, users, and processes.

Identify and prevent installed software from communicating with entities in Russia, China, or other threat actors.

threatlocker.com/pages/software-audit

Get Your Free Software ReportTwo U.S. lawmakers are urging the Commerce Department to investigate cybersecurity risks associated with TP-Link routers, citing vulnerabilities and potential data sharing with the Chinese government. MORE

Quarkslab found a major backdoor in RFID cards made by Shanghai Fudan Microelectronics, one of China's top chip manufacturers. This backdoor allows for the instant cloning of contactless smart cards used globally to open office doors and hotel rooms. MORE

The AI Risk Repository now lists over 700 potential risks that advanced AI systems could pose, making it the most comprehensive source for understanding AI-related issues. MORE

Sponsor

13 Cybersecurity Tools. One Platform. Built for IT Teams

There are thousands of cybersecurity point solutions. Many of them are good—but managing more than a dozen tools, disparate reports, invoices, trainings, etc. is challenging for small IT teams.

We’ve built a platform that does assessments, testing, awareness training, and 24/7/365 managed security all in a single pane of glass. Because every company deserves robust cybersecurity.

Book A DemoResearchers found a way to exfiltrate data from Slack's AI by using indirect prompt injection. MORE

The U.S. Navy is rolling out Starlink on its warships to provide high-speed, reliable internet connections, significantly improving operational capabilities and crew morale. MORE

Continue reading online to avoid the email cutoff… AI / TECHAnthropic has published the system prompts for its latest AI models, including Claude 3 Opus, Claude 3.5 Sonnet, and Claude 3.5 Haiku. MORE

AGIBOT—a Chinese company—just unveiled a fleet of five advanced humanoid robots to compete directly with Tesla’s Optimus bot. These models, including the flagship Yuanzheng A2, are designed for tasks ranging from household chores to industrial operations and will start shipping by the end of 2024. I’ll be waiting for an American option. MORE

💡I am anti-Chinese-imports for both robotaxis and humanoid robots. The market is too big, China moves too fast, and we need to give American companies (Elon) time to compete.

I don’t like this take. I don’t like slowing pressure from the outside, and if it were India, or Ireland I’d be ok with applying that pressure. But not China. They’re too obviously a malicious actor to allow them to dominate these new markets.

Speaking of that, Tesla is hiring people to train its Optimus humanoid robot by wearing motion capture suits and mimicking actions it will perform. The job, listed as “Data Collection Operator,” pays up to $48 per hour and involves walking for over seven hours a day while carrying up to 30 pounds and wearing a VR headset. MORE

Waymo is looking to launch a subscription service called "Waymo Teen" that would allow teenagers to hail robotaxis solo, with prices ranging from $150 to $250 per month for up to 16 rides. MORE

An AI scientist developed by the University of British Columbia, Oxford, and Sakana AI is creating its own machine learning experiments and running them autonomously. This is where most innovation will come from AI. Not just in implementing tasks, but in doing new research. I talked about it here. MORE

Victor Miller, a mayoral candidate in Wyoming’s capital city, has vowed to let his customized ChatGPT named Vic (Virtual Integrated Citizen) help run the local government if elected. MORE

💡I’m working on how to articulate a political platform for any level of office using Substrate.

You basically define exactly what you want to do, and it branches out with all the Problems, Strategies, KPIs, etc., all in a single platform file that people’s AIs can evaluate and compare to their own beliefs and goals.

I think this is where leadership is heading. Transparent descriptions of vision, strategy, and outcome measurement.

Sean Ammirati, a professor at Carnegie Mellon, noticed a massive up-leveling of progress in his entrepreneurship class this year thanks to generative AI tools like ChatGPT, GitHub Copilot, and FlowiseAI. Students used these tools for marketing, coding, product development, and recruiting early customers, resulting in venture capitalists flocking to the campus. MORE

💡This is what I’ve been talking about with AI Augmentation. If you were competing with a 95/100 person before, because they went to CMU—well, now you’re competing with a 130/100 because they went to CMU AND they use AI for everything.

—

I read better articles because of AI

Therefore I get better ideas because of AI

Therefore I build better stuff because of AI

Etc.

And I do this all faster than was possible before

Upgrade or lose. Those are your options.

GM is cutting over 1,000 software engineers to streamline its software and services organization. Streamlining by cutting out 1,000 devs? The way I read this is “Start from scratch and only hire A’s from now on.” See: all of my other posts about companies only wanting Killer Cult Members from now on. MORE

Meta is using AI to streamline system reliability investigations with a new root cause analysis system. This system combines heuristic-based retrieval and large language model (LLM)-based ranking, achieving 42% accuracy in identifying root causes at the investigation's start. MORE

AI companies are shifting focus from creating god-like AI to building practical products. Gasp! This isn’t a bubble-pop; it’s just natural maturity of a thing that came out 13 minutes ago. People are still figuring this stuff out, and it’s still day 1 in terms of AI capabilities. MORE

Canada is slapping a 100% import tariff on China-made electric vehicles starting October 1, following similar moves by the US and EU. MORE

Former Google CEO Eric Schmidt predicts rapid advancements in AI, with the potential to create significant apps like TikTok competitors in minutes within the next few years. MORE

Anthropic Claude 3.5 can now create iCalendar files from images, and Greg's Ramblings shows how you can use this feature to generate calendar entries just by snapping a photo of a schedule or event flyer. MORE

AWS CEO Adam Selipsky predicts that within the next 24 months, most developers might not be coding anymore due to AI advancements. He emphasizes that the real skill will shift towards innovation and understanding customer needs rather than writing code. MORE

Chinese companies have ramped up their imports of chip production equipment, spending nearly $26 billion in the first seven months of the year. They need to equip 18 new fabs expected to start operations in 2024 and are seriously worried about export controls. MORE

HUMANSCisco is laying off 7% of its workforce, which is around 5,900 employees, as it pivots towards AI and cybersecurity. The company is investing $1 billion in tech startups like Cohere, Mistral, and Scale, and has partnered with Nvidia to develop AI infrastructure. MORE

McKinsey's new study reveals that business leaders are missing the mark on why employees are quitting. They say companies are focusing on transactional perks like compensation and flexibility, but employees are actually seeking meaning, belonging, holistic care, and appreciation at work. Couldn’t have been better timed with this week’s Work essay. MORE

Twenty-four brain samples collected in early 2024 measured on average about 0.5% plastic by weight. MORE

Gallup has released its 2023 Global Emotions report, which measures the world's emotional temperature through the Positive Experience Index and Negative Experience Index. The data comes from surveys conducted in 142 countries, using a mix of telephone, face-to-face, and some web surveys, with about 1,000 respondents per country. MORE

💡Exceedingly cool research and data and visualizations! MORE

Nonsmokers who avoided the sun had a life expectancy similar to smokers who got the most sun, according to a study of nearly 30,000 Swedish women over 20 years. The research suggests that avoiding the sun is as risky as smoking. This is the type of thing that needs way more research, but damn. More sun for me, regardless. It’s a massive boost for me in the morning. MORE

Stanford researchers have found that blocking the kynurenine pathway in the brain can reverse the metabolic disruptions caused by Alzheimer’s disease, improving cognitive functions in mice. I’m starting to feel like we’re about to make massive progress on both Alzheimer’s and Cancer, and it’s making me want to invest in 2-3 of the top drug companies. MORE

Using air purifiers in two Helsinki daycare centers reduced kids' sick days by about 30%, according to preliminary findings from the E3 Pandemic Response study. The research, led by Enni Sanmark from HUS Helsinki University Hospital, aims to see if air purification can also cut down on stomach ailments. MORE

University of Missouri scientists have developed a liquid-based solution that removes over 98% of nanoplastics from water. It uses natural, water-repelling solvents to absorb plastic particles, which can then be easily separated and removed. I expect to see a lot of similar products soon. I feel like microplastics might be the new health scare. Not sure if that’s justified or not. Can’t wait for the Huberman episode. MORE

Eli Lilly's weight loss drug tirzepatide, found in Zepbound and Mounjaro, reduced the risk of developing Type 2 diabetes by 94% in obese or overweight adults with prediabetes, according to a long-term study. Dayum. 94%. MORE

Apple Podcasts is losing ground to YouTube and Spotify, with a recent study showing YouTube now leads in podcast consumption at 31%, followed by Spotify at 21%, and Apple Podcasts trailing at 12%. MORE

IDEASDISCOVERY

Damn, just thought of a super cool use case for Fabric + Telos + Substrate.

1. Maintain a list of everything I've been REALLY wrong about. (Already working on this list)

2. Write a Fabric pattern that looks at that list and identifies key ways that I miss.

3. Recommend.

— ᴅᴀɴɪᴇʟ ᴍɪᴇssʟᴇʀ ⚙️ (@DanielMiessler)

8:39 PM • Aug 22, 2024

ffufai uses ffuf and AI to find more web hacking targets, by Joseph Thacker. MORE

gofuzz.py recursively looks at JavaScript files and finds endpoints that can be tested. MORE

analyze_interviewer_techniques is a new Fabric pattern that will capture the ‘je ne se quoi’ of a given interviewer. I’ve been using it on Dwarkesh and Tyler Cowen. MORE

harness is a quick tool I put together to test the efficacy of one prompt vs. another. It runs both against an input and then scores the output using a third, objective prompt that rates how well they followed the plot. MORE

State and time are the same thing — Hillel Wayne explores the concept that state and time are interchangeable. MORE

Don’t force yourself to become a bug bounty hunter, by Sam Curry. MORE

67 years of old Radio Shack catalogs have been scanned and are now available online. MORE

mdrss is a Go-based tool that converts markdown files to RSS feeds. You can write articles in a local folder, and it automatically formats them into an RSS-compliant XML file, handling publication dates and categories. MORE

No "Hello", No "Quick Call", and No Meetings Without an Agenda — This blog post highlights common remote work mistakes like starting conversations with "Hi" and waiting for a response, asking for "quick calls" without context, and scheduling meetings without agendas. 😡💪 MORE

Roger Penrose's book "The Emperor's New Mind" explores the relationship between the human mind and computers, arguing that human consciousness cannot be replicated by machines. MORE

A Collection of Free Public APIs That Are Tested Daily MORE

RECOMMENDATION OF THE WEEKTake the time to read this week’s main essay—We’ve Been Lied To About Work.

But more than just reading it, think about what it means if I’m right. Think about what that means for you and your career, but also all the young people you know and care about.

I didn’t talk about it in that piece, but the solution is the transition to a Human 3.0 mindset, which—in this context—means taking the same skills that you’re good at and that you do for someone else, and doing that for yourself.

More help is coming from me on how exactly to do that, but start thinking about it now.

APHORISM OF THE WEEK Become a Member to Augment yourselfPowered by beehiiv

August 26, 2024

World Model + Next Token Prediction = Answer Prediction

Table of Contents

Table of ContentsA new way to explain LLM-based AI

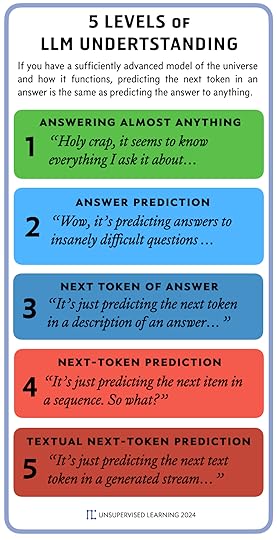

The 5 Levels of LLM Understanding

Answers as descriptions of the world

Human vs. absolute omniscience

The argument in deductive form

A new way to explain LLM-based AIThanks to Eliezer Yudkowsky, I just found my new favorite way to explain LLMs—and why they’re so strange and extraordinary.

Here’s the post that sent me down this path.

"it just predicts the next token" literally any well-posed problem is isomorphic to 'predict the next token of the answer' and literally anyone with a grasp of undergraduate compsci is supposed to see that without being told.

— Eliezer Yudkowsky ⏹️ (@ESYudkowsky)

2:17 PM • Aug 23, 2024

And here’s the bit that got me…

well-posed problem = prediction of next token of answer

Like—I knew that. And I have been explaining the power of LLMs similarly for over two years now. But it never occurred to me to explain it in this way. I absolutely love it.

Typically, when you’re trying to explain how LLMs can be so powerful, the narrative you’ll get from most is…

There’s no magic in LLMs. Ultimately, it’s nothing but next token prediction.

(victory pose)

A standard AI skeptic argument

The problem with this argument—which Eliezer points out so beautifully—is that—with an adequate understanding of the world—there’s not much daylight between next token prediction and answer prediction.

So, here’s my new way of responding to the “just token prediction” argument, using 5 levels of jargon removal.

The 5 Levels of LLM Understanding

The 5 Levels of LLM UnderstandingTIER 1: “LLMs just predict the next token in text.”

TIER 2: “LLMs just predict next tokens.”

TIER 3: “LLMs predict the next part of answers.”

TIER 4: “LLMs provide answers to really hard questions.”

TIER 5: “HOLY CRAP IT KNOWS EVERYTHING.”

That resonates with me, but here’s another way to think about it.

Answers as descriptions of the worldIf you understand the world well enough to predict the next token of an answer, that means you have answers. 🤔

Or:

The better an LLM understands reality and can describe that reality in text, the more “predicting the next token” becomes knowing the answer to everything.

But “everything” is a lot, and we’re obviously never going to hit that (see infinity and the limits of math/physics, etc.).

So the question is: What’s a “good enough” model of the universe—for a human context—to be effectively everything?

Human vs. absolute omniscienceIf you’re tracking with me, here’s where we’re at—as a deductive argument1 .

If you have a perfect model of the universe, and you can predict the next token of an answer about anything in that universe, then you know everything.

But we don’t have a perfect model of the universe.

Therefore—no AI (or any other known system) can know everything.

100% agreed.

But the human standard for knowing everything isn’t actually knowing everything . The bar is much lower than that.

The human standard isn’t:

Give me the location of every molecule in the universe

Predict the exact number of raindrops that will hit my porch when it rains next

Predict the exact price of NVIDIA stock at 3:14 PM EST on October 12, 2029.

Tell me how many alien species in the universe have more than a 100 IQ equivalent.

These are—as far as we know of physics—completely impossible to know because of the limits of the physical, atom-based, math-based world. So we can’t ever know “everything”, or really anything close to it.

But take that off the table. It’s impossible, and it’s not what we’re talking about.

What I’m talking about is human things. Things like:

What makes a good society?

Is this policy likely to increase or decrease suffering in the world?

How did this law effect the outcomes of the people it was supposed to help?

These questions are big. They’re huge. But there’s an “everything” version of answering them (which we’ve already established is impossible), and then there’s the “good enough” version of answering them—at a human level.

I believe LLM-based AI will soon have an adequately deep understanding of the things that matter to humans—such as science, physics, materials, biology, laws, policies, therapy, human psychology, crime rates, survey data, etc.—that we will be able to answer many of our most significant human questions.

Things like:

What causes aging and how do we prevent or treat it?

What causes cancer and how do we prevent or treat it?

What is the ideal structure of government for this particular town, city, country, and what steps should we take to implement it?

For this given organization, how can they best maximize their effectiveness?

For this given family, what steps should they take to maximize the chances of their kids growing up as happy, healthy, and productive members of society?

How does one pursue meaning in their life?

Those are big questions—and they do require a ton of knowledge and a very complex model of the universe—but I think they’re tractable. They’re nowhere near “everything”, and thus don’t require anywhere near a full model of the universe.

In other words, the bar for practical, human-level “omniscience” may be remarkably low, and I believe LLMs are very much on the path to getting there.

The argument in deductive formHere’s the deductive form of this argument.

If you have a perfect model of the universe, and you can predict the next token of an answer about anything in that universe, then you know everything.

But we don’t have a perfect model of the universe.

Therefore—no AI (or any other known system) can know everything.

However, the human standard for “everything” or “practical omniscience” is nowhere near this impossible standard.

Many of the most important questions to humans that have traditionally been associated with something being “godlike”, e.g., how to run a society, how to pursue meaning in the universe, etc., can be answered sufficiently well using AI world models that we can actually build.

Therefore, humans may soon be able to build “practically omniscient” AI for most of the types of problems we care about as a species.2

🤯

Guess what? We do it too…Finally there’s another point that’s worth mentioning here, which is that every scientific indication we have points to humans being word predictors too.

Try this experiment right now, in your head: Think of your favorite 10 restaurants.

…

As you start forming that list in your brain—watch the stuff that starts coming back. Think about the fact that you’re receiving a flash of words, thoughts, images, memories, etc., from the black box of your memory and experience.

Notice that if you did that same exercise two hours from now—or two days from now—the flashes and thoughts you’d have would be different, and you might even come up with a different list, or put the list in a different order.

Meanwhile, the way this works is even less understood than LLMs! At some level, we too, are “just” next tokens predictors, and it doesn’t make us any less interesting or wonderful.

SummaryIt all starts with the sentence “next tokens prediction is isomorphic with answer prediction”.

This means “next token prediction” is actually an extraordinary capability—more like splitting the atom than a parlor trick.

Humans seem to be doing something very similar, and you can watch it happening in real-time if you pay attention to your own thoughts or speech.

But the quality of a token predictor comes down to the complexity/quality of the model of the universe it’s based on.

And because we can never have a perfect AI model of the universe, we can never have truly omniscient AI.

Fortunately, to be considered “godlike” to humans—we don’t need a perfect model of the universe. We only need enough model complexity to be able to answer the questions that matter most to us.

We might be getting close.

1 A deductive argument is where you must accept the conclusion if you accept the premises above, e.g., 1) All rocks lack a heartbeat, 2) This is a rock. 3) Therefore, this lacks a heartbeat.2

2 Thanks to Jai Patel, Joseph Thacker, and Gabriel Bernadette-Shapiro for talking through, shaping, and contributing to this piece. They’re my go-to friends for discussing lots of AI topics—but especially deeper stuff like this.

Powered by beehiiv

The End of Work

Table of Contents

Table of ContentsWhat I think is actually happening

3. What comes after will be much better

The feelingIf you’re like me, you’ve had this strange, uneasy feeling about the job market1 for a few years now.

It’s like a splinter in the brain. We know something is deeply broken about the whole system, but it’s impossible to grasp or articulate.

I’m writing this because I think I figured it out.

People talk a lot about how AI is going to replace millions of jobs, and how it will also create many more. I think that’s true, but I think there’s a lot more going on here than just AI.

The symptomsFirst, why do we even think there’s a problem? For me, it starts with noticing how bad—and how often—work just completely sucks. Like the whole thing—finding work, doing work, being stressed about not losing work. Etc.2

Most companies, departments, and teams are horribly inefficient, have very little direction, are full of wasted time and efficiency, and are poorly run. It’s constant meetings to talk about the latest corporate fuckery, which only prevents you from doing what you should be doing. It’s change for the sake of change. And when you get excited about a way to fix things, either nobody listens, or they only pretend to before failing to implement it.

Work tends to be a series of disappointments for most people, punctuated by a few rays of light. I was curious about how many people felt this way—not wanting to do a full essay on just my own opinions—and found this from Gallup about the quiet quitting phenomenon.

Gallup: Is Quiet Quitting Real?, 2023

The overall decline was especially related to clarity of expectations, opportunities to learn and grow, feeling cared about, and a connection to the organization's mission or purpose—signaling a growing disconnect between employees and their employers.

Many quiet quitters fit Gallup's definition of being "not engaged" at work—people who do the minimum required and are psychologically detached from their job. This describes half of the U.S. workforce.

Is Quiet Quitting Real (Gallup)

I’m sure there are lots of factors going into Quiet Quitting, but I think this feeling I’m talking about is one of them.

What I think is actually happeningAnd that brings me to what I think the real issues are, which are a lot deeper and more unsettling than just, “AI is taking the jobs.”

The ideal number of employees in any company is zero. If a company could run and make money using no people, then that is exactly what it should do. We never think about this or talk about this because it’s very strange and uncomfortable, but it is true. The purpose of companies is not to employ people, it is to provide a product or service in return for money,

Because of that, there is a constant downward pressure on anybody who is employed. It is not a specific pressure from a specific person or department. It is simply a fact of business reality that manifests itself in various ways throughout an organization over time. We have to stop thinking of this as a malicious thing where we should be employed, and they are trying to get rid of us. The truth is exactly the opposite.

Nobody owes anybody a job. The only reason anyone has one is because there was a problem at some point in that business that required a human to do some part of the work. Building on that, if that ever becomes not the case, for a particular person or team or department of human employees, the natural next action is to get rid of them. Again, not because business owners or managers are bad people or anything like that. We need to stop injecting morality into this. Businesses simply should have as few employees and actually any expense as possible.

A good way to think about this is to look at a list of software products your company pays for. Let’s say your company pays for 215 software products that cost us $420,000 a year to own and use. Nobody would object to somebody looking at that list of software finding redundancies and canceling those licenses. That is simply work that is being done by other software, or is not required anymore, so it would be stupid for the business to not cancel or failed to renew those licenses.

It is exactly the same with humans, and no matter what you read or hear, I believe this is the main reason we are seeing disruption in job markets today. I think more and more businesses are seeing themselves as money in and money out, and are seeing human workers as being very expensive and generally not very good at what they do. This is not necessarily because of individual workers but because human organizations and communication are so inefficient and wasteful. So basically, companies are realizing that they are spending millions—or hundreds of millions—of dollars per year on human talent, and they’re realizing it’s not worth it.

So that is 2 pieces: 1) ZERO is the optimum number of employees for any company, and 2) companies are realizing that they’re paying way too much for giant workforces that are not producing near the value being paid. Forgetting any modern technological innovation, these two things combined produce extreme downward pressure on the workforce. It adds pressure, stress, drama, and all sorts of negativity to the practice of finding a job, getting a job, keeping a job, working with coworkers, going through organizational changes, and everything else that goes with being a regular employee. It just basically fucking sucks. And the reason it sucks is because companies ultimately wish that you didn’t exist in the first place. We have forgotten that—or never learned it—and that needs to change.

Now let’s add AI, which if you’ve read any of the stuff I’ve been writing, you know is— in the context of business —a technology for replacing the human intellectual work tasks that make up someone’s job.

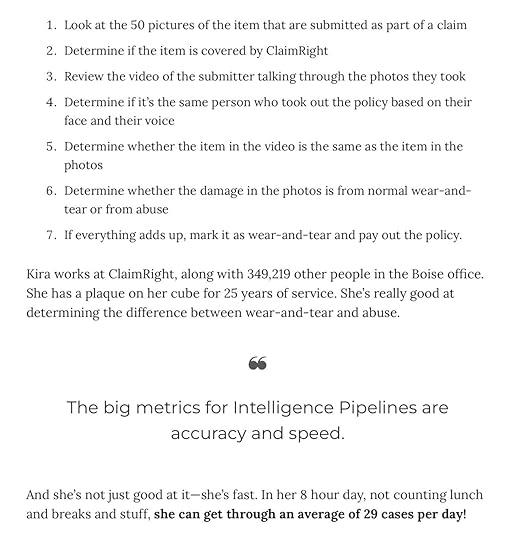

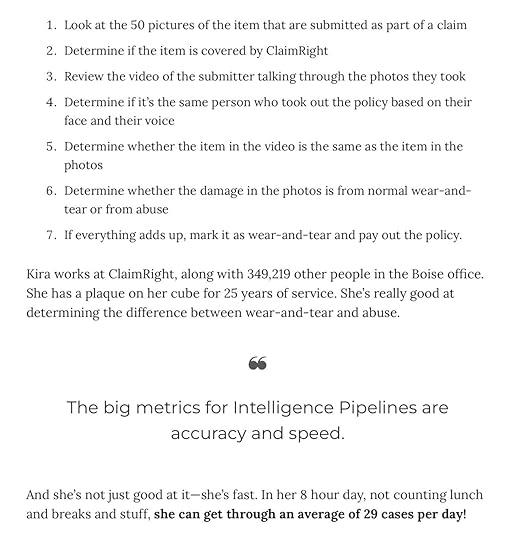

Here’s a good example from a recent piece on this topic.

From ‘You’ve Been Thinking About AI All Wrong’

What this example shows is a workflow that a human worker does today—just like millions of similar workflows—but that AI will soon be able to do instead.

It’s just steps, like we can see further down in the piece.

From ‘You’ve Been Thinking About AI All Wrong’

So now we have 3 pieces. The ideal number of employees is zero, companies are extremely unhappy with their current workforces, and just now—starting in 2023 and 2024—it is actually becoming possible to replace human intelligence tasks with technology.

You have to see where this is going. It is not moving towards a few jobs get removed, and a few jobs get added. It’s not moving towards some gentle shifts in workforce dynamics or euphemisms like that. No, we are talking about fundamental change. Now for the main point of this piece.

The entire concept of work that we have had for thousands of years was a temporary model that was required to solve a temporary problem. Namely, people who are trying to build or sell something that required work they were unable to do by themselves.

Read that again.

The only reason anybody has a job is because some people are builders and creators, and they cannot do the entire job themselves.

That work—which is required to produce those products and services—is the reason people have 9-5 jobs. This is the reason the entire economy works the way it does. Those builders/creators then hire people, who they have to pay, and those people spend that money on things in the economy. And that is the system we are all used to.

Well…

This system goes away when builders and creators can make things by themselves. Which is precisely what AI is about to enable.

So, here’s where we are.

The ideal number of employees for a company is zero.

The reason companies had employees in the past is ONLY because the founders couldn’t deliver their product/service without human workers.

Companies and society has sort of forgotten this over the past decades and it’s been kind of assumed that all companies should have these large workforces, because it’s the job of companies to provide good jobs to society.

This hasn’t been working for companies, and company leaders are now noticing that they’re not getting near the value they should be from most employees and teams.

So there’s already this realization sinking in, and then we are getting AI at the exact same time.

This means at the exact time that company leaders are looking very skeptically at their human resources spending, they’re being presented with an alternative.

OK, so maybe you’re thinking:

Holy crap—he’s right.

This is a horrible problem, and we are all screwed. What do I even do?

Yes, and no. I have three things to offer here that should make you feel somewhat better.

3 reasons for hopeBut it’s not all bad. I have 3 reasons for optimism.

1. Those jobs sucked anywayHow many people do you know who work regular 9 to 5 jobs in a knowledge work environment who look forward to Monday? How many people, if you really stood back and looked at your life, think it’s good to spend most of your waking moments getting ready to work, dealing with dumbass work shit, all fucking day, and then trying to destress from that day, just so you can actually enjoy the few hours you have left to live your actual life?

All that so you can hopefully make it to Friday so you can have two days where you hopefully don’t have to think about that hellscape you call work.

Is that the way humans should live on their home planet? If advanced and benign aliens came and visited, and interviewed us, would they not see that as a primitive state of being? Of course they would.

Bullshit Jobs, by David Graeber

The thing that we are about to lose is not something we should cry over. We should be worried cause losing these jobs will be massively disruptive, and it’s stressful as hell to think about a completely changed future. But these Bullshit Jobs themselves are not something to cherish and remember.

2. Even fast things go slowThis transition will simultaneously happen very fast, but also pretty slowly. Even if there is advanced AGI in 2025 (which would be very fast), it still takes time for new technology to enter into companies and fully replace previous technology or humans.

So it will take a while, and that’s not even taking into account likely legislation that will slow it down even further based on how disruptive it is. So it’s not like half of the workforce will suddenly not have a job in 2026. It will be very fast, but not that fast.

3. What comes after will be much betterAnd finally—and best of all—what we will be left with afterwards, assuming we survive, will be a much better way to live.

That same AI that took our dumbass jobs away also has the potential to produce extraordinary abundance for humanity, freeing us up to use our days being human rather than bad biological precursors to AI workers.

We weren’t supposed to be moving paperwork, and sorting spreadsheets, and sending meeting invites, and writing computer code. It’s not what we were supposed to be doing.

What we are supposed to be doing is building and creating things for each other. Things that make each other‘s lives better, and richer, and more meaningful.

And that is exactly what we will do on the other side of all of this. I obviously don’t know our chances of making it to this other side, or if/when it happens, exactly how that will play out. That is impossible to know, but what I can tell you is that I am all in on that future, because it doesn’t make sense to me to live any other way.

Sure, the disruption might tear us apart and send us back to the Bronze Age. That’s possible too, but I choose to believe that we will make it out of this. We’ll get out by getting through. And we’ll emerge on the other side better for it.

SummaryThe primary reason we’re seeing all this disruption in the job market is because we’ve been part of a mass delusion about the very nature of work.

We told ourselves that millions of corporate workforce jobs—that pay good salaries, have good benefits, and allow you to save for retirement—were somehow a natural feature of the universe.

In fact, that entire paradigm was just a temporary feature of our civilization, caused by builders and creators not being able to do the work required by themselves. And that’s going away.

But it’s ok.

Most of the jobs sucked anyway, and they took up most of the daily waking hours we were supposed to be spending with family and friends.

Plus even if this transition happens really fast, it still won’t be overnight. Big things take a while.

And most importantly—what waits for us on the other side is a better way to live. A more human way to live—where we identify as individuals rather than corporate workers and exchange value and meaning as part of a new human-centered economy.

My purpose in writing this is to give an alternative—and hopefully far more satisfying—explanation of the feelings you might’ve been feeling for a very long time. And to give you both some warning—and some hope—with which to move forward.

I’ve oriented my life—since the end of 2022—around thinking about this problem, providing ideas and frameworks around it, and have written hundreds of articles about the problem and how to prepare for it. But rather than give the standard “subscribe to my newsletter” response, I would just say that I’m easy to find.

Connect here and we can continue the conversation. Website | X | Newsletter | Community | LinkedIn

We are going to get through this, and it will be much better once we do.

🫶

1 I’m talking about the knowledge work job market, like IT, etc., not physical or professional work, although I do think they’ll be affected soon as well.

2 I’m specifically speaking of the last few years, say, since 2020.

Powered by beehiiv

We've Been Lied To About Work

Table of Contents

Table of ContentsWhat I think is actually happening

3. What comes after will be much better

The feelingIf you’re like me, you’ve had this strange, uneasy feeling about the job market1 for a few years now.

The feeling is like a splinter in the brain—like something is deeply broken about the whole system, but I couldn’t grasp it or articulate it.

I’m writing this because I think I figured it out.

People talk a lot about how AI is going to replace millions of jobs, and how it will also create many more. I think that’s true, but I think there’s a lot more going on here than just AI. I think AI is an accelerant to all of this, but not the main issue.

I think the main factor is so elusive and depressing that it’s hard to even talk about, which is why we don’t.

The symptomsFirst, the symptoms. It starts with noticing how bad—and how often—work just completely sucks. Like the whole thing. Finding work. Doing work. Being stressed about not losing work. Etc.2

Most companies, departments, and teams are horribly inefficient, have very little direction, are full of wasted time and efficiency, and are poorly run. It’s constant meetings to talk about the latest corporate fuckery, which only prevents you from doing what you should be doing. It’s change for the sake of change. And when you get excited about a way to fix things, either nobody listens, or they only pretend to before failing to implement it.

So work tends to be a series of disappointments for most people, punctuated by a few rays of light.

I was curious about how many people felt this way—not wanting to do a full essay on just my own opinions, and found this from Gallup about the quiet quitting phenomenon.

Gallup: Is Quiet Quitting Real?, 2023

The overall decline was especially related to clarity of expectations, opportunities to learn and grow, feeling cared about, and a connection to the organization's mission or purpose—signaling a growing disconnect between employees and their employers.

Many quiet quitters fit Gallup's definition of being "not engaged" at work—people who do the minimum required and are psychologically detached from their job. This describes half of the U.S. workforce.

Is Quiet Quitting Real (Gallup)

I’m sure there are lots of factors going into Quiet Quitting, but I think this feeling I’m talking about is one of them.

What I think is actually happeningAnd that brings me to what I think the real issues are. They’re not pleasant, and might be rather jarring, but it’s time to have the conversation.

Here’s what I think the main problems are that are causing all this, and that are much bigger than AI.

The ideal number of employees in any company is zero. If a company could run and make money using no people, then that is exactly what it should do. We never think about this or talk about this because it’s very strange and uncomfortable, but it is true. The purpose of companies is not to employ people, it is to provide a product or service in return for money,

Because of that, there is a constant downward pressure on anybody who is employed. It is not a specific pressure from a specific person or department. It is simply a fact of business reality that manifests itself in various ways throughout an organization over time. We have to stop thinking of this as a malicious thing where we should be employed, and they are trying to get rid of us. The truth is exactly the opposite.

Nobody owes anybody a job. The only reason anyone has one is because there was a problem at some point in that business that required a human to do some part of the work. Building on that, if that ever becomes not the case, for a particular person or team or department of human employees, the natural next action is to get rid of them. Again, not because business owners or managers are bad people or anything like that. We need to stop injecting morality into this. Businesses simply should have as few employees and actually any expense as possible.

A good way to think about this is to look at a list of software products your company pays for. Let’s say your company pays for 215 software products that cost us $420,000 a year to own and use. Nobody would object to somebody looking at that list of software finding redundancies and canceling those licenses. That is simply work that is being done by other software, or is not required anymore, so it would be stupid for the business to not cancel or failed to renew those licenses.

It is exactly the same with humans, and no matter what you read or hear, I believe this is the main reason we are seeing disruption in job markets today. I think more and more businesses are seeing themselves as money in and money out, and are seeing human workers as being very expensive and generally not very good at what they do. This is not necessarily because of individual workers but because human organizations and communication are so inefficient and wasteful. So basically, companies are realizing that they are spending millions—or hundreds of millions—of dollars per year on human talent, and they’re realizing it’s not worth it.

So that is two pieces: 1) ZERO is the optimum number of employees for any company, and 2) companies are realizing that they’re paying way too much for giant workforces that are not producing near the value being paid. Forgetting any modern technological innovation, these two things combined produce extreme downward pressure on the workforce. It adds pressure, stress, drama, and all sorts of negativity to the practice of finding a job, getting a job, keeping a job, working with coworkers, going through organizational changes, and everything else that goes with being a regular employee. It just basically fucking sucks. And the reason it sucks is because companies ultimately wish that you didn’t exist in the first place. We have forgotten that—or never learned it—and that needs to change.

Now let’s add AI, which if you’ve read any of the stuff I’ve been writing, you know is— in the context of business —a technology for replacing the human intellectual work tasks that make up someone’s job.

Here’s a good example from a recent piece on this topic.

From ‘You’ve Been Thinking About AI All Wrong’

What this example shows is a workflow that a human worker does today—just like millions of similar workflows—but that AI will soon be able to do instead.

It’s just steps, like broken down further down in the piece.

From ‘You’ve Been Thinking About AI All Wrong’

So now we have three pieces. The ideal number of employees is zero, companies are extremely unhappy with their current workforces, and just now—starting in 2023 and 2024—it is actually becoming possible to replace human intelligence tasks with technology.

You have to see where this is going. It is not moving towards a few jobs get removed, and a few jobs get added. It’s not moving towards some gentle shifts in workforce dynamics or euphemisms like that. No, we are talking about fundamental change. Now for the main point of this piece.

The entire concept of work that we have had for thousands of years was a temporary model that was required to solve a temporary problem. Namely, people who are trying to build or sell something that required work they were unable to do by themselves.

Read that again.

The only reason anybody has a job is because some people are builders and creators, and they cannot do the entire job themselves.

That work—which is required to produce those products and services—is the reason people have 9 to 5 jobs. This is the reason the entire economy works the way it does. Those builders/creators then hire people, who they have to pay, and those people spend that money on things in the economy. And that is the system we are all used to.

Well…

This system goes away when builders and creators are able to make things by themselves. Which is precisely what AI is about to enable.

So, here’s where we are.

The ideal number of employees for a company is zero.

The reason companies had employees in the past is ONLY because the founders couldn’t deliver their product/service without human workers.

Companies and society has sort of forgotten this over the past decades and it’s been kind of assumed that all companies should have these large workforces, because it’s the job of companies to provide good jobs to society.

This hasn’t been working for companies, and company leaders are now noticing that they’re not getting near the value they should be from most employees and teams.

So there’s already this realization sinking in, and then we are getting AI at the exact same time.

This means at the exact time that company leaders are looking very skeptically at their human resources spending, they’re being presented with an alternative.

OK, so maybe you’re thinking:

Holy crap—he’s right.

This is a horrible problem, and we are all screwed. What do I even do?

Yes, and no. I have three things to offer here that should make you feel somewhat better.

Three reasons for hopeBut it’s not all bad. I have 3 reasons this is better than it sounds.

1. Those jobs sucked anywayHow many people do you know who work regular 9 to 5 jobs in a knowledge work environment who look forward to Monday? How many people, if you really stood back and looked at your life, think it’s good to spend most of your waking moments getting ready to work, dealing with dumbass work shit, all fucking day, and then trying to destress from that day, just so you can actually enjoy the few hours you have left to live your actual life?

All that so you can hopefully make it to Friday so you can have two days where you hopefully don’t have to think about that hellscape you call work.

Is that the way humans should live on their home planet? If advanced and benign aliens came and visited, and interviewed us, would they not see that as a primitive state of being? Of course they would.

Bullshit Jobs, by David Graeber

The thing that we are about to lose is not something we should cry over. We should be worried cause losing these jobs will be massively disruptive, and it’s stressful as hell to think about a completely changed future. But these Bullshit Jobs themselves are not something to cherish and remember.

2. Even fast things go slowThis transition will simultaneously happen very fast, but also pretty slowly. Even if there is advanced AGI in 2025 (which would be very fast), it still takes time for new technology to enter into companies and fully replace previous technology or humans.

So it will take a while, and that’s not even taking into account likely legislation that will slow it down even further based on how disruptive it is. So it’s not like half of the workforce will suddenly not have a job in 2026. It will be very fast, but not that fast.

3. What comes after will be much betterAnd finally—and best of all—what we will be left with afterwards, assuming we survive, will be a much better way to live.

That same AI that took our dumbass jobs away also has the potential to produce extraordinary abundance for humanity, freeing us up to use our days being human rather than bad biological precursors to AI workers.

We weren’t supposed to be moving paperwork, and sorting spreadsheets, and sending meeting invites, and writing computer code. It’s not what we were supposed to be doing.

What we are supposed to be doing is building and creating things for each other. Things that make each other‘s lives better, and richer, and more meaningful.

And that is exactly what we will do on the other side of all of this. I obviously don’t know our chances of making it to this other side, or if/when it happens, exactly how that will play out. That is impossible to know, but what I can tell you is that I am all in on that future, because it doesn’t make sense to me to live any other way.

Sure, the disruption might tear us apart and send us back to the Bronze Age. That’s possible too, but I choose to believe that we will make it out of this. We’ll get out by getting through. And we’ll emerge on the other side better for it.

SummaryThe primary reason we’re seeing all this disruption in the job market is because we’ve been part of a mass delusion about the very nature of work.

We told ourselves that millions of corporate workforce jobs—that pay good salaries, have good benefits, and allow you to save for retirement—were somehow a natural feature of the universe.

In fact, that entire paradigm was just a temporary feature of our civilization, caused by builders and creators not being able to do the work required by themselves. And that’s going away.

But it’s ok.

Most of the jobs sucked anyway, and they took up most of the daily waking hours we were supposed to be spending with family and friends.

Plus even if this transition happens really fast, it still won’t be overnight. Big things take a while.

And most importantly—what waits for us on the other side is a better way to live. A more human way to live—where we identify as individuals rather than corporate workers and exchange value and meaning as part of a new human-centered economy.

My purpose in writing this is to give an alternative—and hopefully far more sense-making—explanation of the feelings you might’ve been feeling for a very long time. And to give you both some warning—and some hop—with which to move forward.

I don’t like to give such news without providing some sort of practical advice for what people can do.

I’ve oriented my life since the end of 2022 to thinking about this problem, providing ideas and frameworks around it, and have written hundreds of articles about it. But rather than give the standard “subscribe to my newsletter” response, I would just say that I’m easy to find. Website | X | Newsletter | Community | LinkedIn

We are going to get through this, and it will be much better once we do.

🫶

1 I’m talking about the knowledge work job market, like IT, etc., not physical or professional work, although I do think they’ll be affected soon as well.

2 I’m specifically speaking of the last few years, say, since 2020.

Powered by beehiiv

The Real Problem With the Job Market

Table of Contents

Table of ContentsWhat I think is actually happening

3. What comes after will be much better

The feelingIf you’re like me, you’ve had this strange, uneasy feeling about the job market1 for a few years now.

The feeling is like a splinter in the brain—like something is deeply broken about the whole system, but I couldn’t grasp it or articulate it.

I’m writing this because I think I figured it out.

People talk a lot about how AI is going to replace millions of jobs, and how it will also create many more. I think that’s true, but I think there’s a lot more going on here than just AI. I think AI is an accelerant to all of this, but not the main issue.

I think the main factor is so elusive and depressing that it’s hard to even talk about, which is why we don’t.

The symptomsFirst, the symptoms. It starts with noticing how bad—and how often—work just completely sucks. Like the whole thing. Finding work. Doing work. Being stressed about not losing work. Etc.2

Most companies, departments, and teams are horribly inefficient, have very little direction, are full of wasted time and efficiency, and are poorly run. It’s constant meetings to talk about the latest corporate fuckery, which only prevents you from doing what you should be doing. It’s change for the sake of change. And when you get excited about a way to fix things, either nobody listens, or they only pretend to before failing to implement it.

So work tends to be a series of disappointments for most people, punctuated by a few rays of light.

I was curious about how many people felt this way—not wanting to do a full essay on just my own opinions, and found this from Gallup about the quiet quitting phenomenon.

Gallup: Is Quiet Quitting Real?, 2023

The overall decline was especially related to clarity of expectations, opportunities to learn and grow, feeling cared about, and a connection to the organization's mission or purpose—signaling a growing disconnect between employees and their employers.

Many quiet quitters fit Gallup's definition of being "not engaged" at work—people who do the minimum required and are psychologically detached from their job. This describes half of the U.S. workforce.

Is Quiet Quitting Real (Gallup)

I’m sure there are lots of factors going into Quiet Quitting, but I think this feeling I’m talking about is one of them.

What I think is actually happeningAnd that brings me to what I think the real issues are. They’re not pleasant, and might be rather jarring, but it’s time to have the conversation.

Here’s what I think the main problems are that are causing all this, and that are much bigger than AI.

The ideal number of employees in any company is zero. If a company could run and make money using no people, then that is exactly what it should do. We never think about this or talk about this because it’s very strange and uncomfortable, but it is true. The purpose of companies is not to employ people, it is to provide a product or service in return for money,

Because of that, there is a constant downward pressure on anybody who is employed. It is not a specific pressure from a specific person or department. It is simply a fact of business reality that manifests itself in various ways throughout an organization over time. We have to stop thinking of this as a malicious thing where we should be employed, and they are trying to get rid of us. The truth is exactly the opposite.

Nobody owes anybody a job. The only reason anyone has one is because there was a problem at some point in that business that required a human to do some part of the work. Building on that, if that ever becomes not the case, for a particular person or team or department of human employees, the natural next action is to get rid of them. Again, not because business owners or managers are bad people or anything like that. We need to stop injecting morality into this. Businesses simply should have as few employees and actually any expense as possible.

A good way to think about this is to look at a list of software products your company pays for. Let’s say your company pays for 215 software products that cost us $420,000 a year to own and use. Nobody would object to somebody looking at that list of software finding redundancies and canceling those licenses. That is simply work that is being done by other software, or is not required anymore, so it would be stupid for the business to not cancel or failed to renew those licenses.

It is exactly the same with humans, and no matter what you read or hear, I believe this is the main reason we are seeing disruption in job markets today. I think more and more businesses are seeing themselves as money in and money out, and are seeing human workers as being very expensive and generally not very good at what they do. This is not necessarily because of individual workers but because human organizations and communication are so inefficient and wasteful. So basically, companies are realizing that they are spending millions—or hundreds of millions—of dollars per year on human talent, and they’re realizing it’s not worth it.

So that is two pieces: 1) ZERO is the optimum number of employees for any company, and 2) companies are realizing that they’re paying way too much for giant workforces that are not producing near the value being paid. Forgetting any modern technological innovation, these two things combined produce extreme downward pressure on the workforce. It adds pressure, stress, drama, and all sorts of negativity to the practice of finding a job, getting a job, keeping a job, working with coworkers, going through organizational changes, and everything else that goes with being a regular employee. It just basically fucking sucks. And the reason it sucks is because companies ultimately wish that you didn’t exist in the first place. We have forgotten that—or never learned it—and that needs to change.

Now let’s add AI, which if you’ve read any of the stuff I’ve been writing, you know is— in the context of business —a technology for replacing the human intellectual work tasks that make up someone’s job.

Here’s a good example from a recent piece on this topic.

From ‘You’ve Been Thinking About AI All Wrong’

What this example shows is a workflow that a human worker does today—just like millions of similar workflows—but that AI will soon be able to do instead.

It’s just steps, like broken down further down in the piece.

From ‘You’ve Been Thinking About AI All Wrong’

So now we have three pieces. The ideal number of employees is zero, companies are extremely unhappy with their current workforces, and just now—starting in 2023 and 2024—it is actually becoming possible to replace human intelligence tasks with technology.

You have to see where this is going. It is not moving towards a few jobs get removed, and a few jobs get added. It’s not moving towards some gentle shifts in workforce dynamics or euphemisms like that. No, we are talking about fundamental change. Now for the main point of this piece.

The entire concept of work that we have had for thousands of years was a temporary model that was required to solve a temporary problem. Namely, people who are trying to build or sell something that required work they were unable to do by themselves.

Read that again.

The only reason anybody has a job is because some people are builders and creators, and they cannot do the entire job themselves.

That work—which is required to produce those products and services—is the reason people have 9 to 5 jobs. This is the reason the entire economy works the way it does. Those builders/creators then hire people, who they have to pay, and those people spend that money on things in the economy. And that is the system we are all used to.

Well…

This system goes away when builders and creators are able to make things by themselves. Which is precisely what AI is about to enable.

So, here’s where we are.

The ideal number of employees for a company is zero.

The reason companies had employees in the past is ONLY because the founders couldn’t deliver their product/service without human workers.

Companies and society has sort of forgotten this over the past decades and it’s been kind of assumed that all companies should have these large workforces, because it’s the job of companies to provide good jobs to society.

This hasn’t been working for companies, and company leaders are now noticing that they’re not getting near the value they should be from most employees and teams.

So there’s already this realization sinking in, and then we are getting AI at the exact same time.

This means at the exact time that company leaders are looking very skeptically at their human resources spending, they’re being presented with an alternative.

OK, so maybe you’re thinking:

Holy crap—he’s right.

This is a horrible problem, and we are all screwed. What do I even do?

Yes, and no. I have three things to offer here that should make you feel somewhat better.

Three reasons for hopeBut it’s not all bad. I have 3 reasons this is better than it sounds.

1. Those jobs sucked anywayHow many people do you know who work regular 9 to 5 jobs in a knowledge work environment who look forward to Monday? How many people, if you really stood back and looked at your life, think it’s good to spend most of your waking moments getting ready to work, dealing with dumbass work shit, all fucking day, and then trying to destress from that day, just so you can actually enjoy the few hours you have left to live your actual life?

All that so you can hopefully make it to Friday so you can have two days where you hopefully don’t have to think about that hellscape you call work.

Is that the way humans should live on their home planet? If advanced and benign aliens came and visited, and interviewed us, would they not see that as a primitive state of being? Of course they would.

Bullshit Jobs, by David Graeber

The thing that we are about to lose is not something we should cry over. We should be worried cause losing these jobs will be massively disruptive, and it’s stressful as hell to think about a completely changed future. But these Bullshit Jobs themselves are not something to cherish and remember.

2. Even fast things go slowThis transition will simultaneously happen very fast, but also pretty slowly. Even if there is advanced AGI in 2025 (which would be very fast), it still takes time for new technology to enter into companies and fully replace previous technology or humans.

So it will take a while, and that’s not even taking into account likely legislation that will slow it down even further based on how disruptive it is. So it’s not like half of the workforce will suddenly not have a job in 2026. It will be very fast, but not that fast.

3. What comes after will be much betterAnd finally—and best of all—what we will be left with afterwards, assuming we survive, will be a much better way to live.

That same AI that took our dumbass jobs away also has the potential to produce extraordinary abundance for humanity, freeing us up to use our days being human rather than bad biological precursors to AI workers.

We weren’t supposed to be moving paperwork, and sorting spreadsheets, and sending meeting invites, and writing computer code. It’s not what we were supposed to be doing.

What we are supposed to be doing is building and creating things for each other. Things that make each other‘s lives better, and richer, and more meaningful.

And that is exactly what we will do on the other side of all of this. I obviously don’t know our chances of making it to this other side, or if/when it happens, exactly how that will play out. That is impossible to know, but what I can tell you is that I am all in on that future, because it doesn’t make sense to me to live any other way.

Sure, the disruption might tear us apart and send us back to the Bronze Age. That’s possible too, but I choose to believe that we will make it out of this. We’ll get out by getting through. And we’ll emerge on the other side better for it.

SummaryThe primary reason we’re seeing all this disruption in the job market is because we’ve been part of a mass delusion about the very nature of work.

We told ourselves that millions of corporate workforce jobs—that pay good salaries, have good benefits, and allow you to save for retirement—were somehow a natural feature of the universe.

In fact, that entire paradigm was just a temporary feature of our civilization, caused by builders and creators not being able to do the work required by themselves. And that’s going away.

But it’s ok.

Most of the jobs sucked anyway, and they took up most of the daily waking hours we were supposed to be spending with family and friends.

Plus even if this transition happens really fast, it still won’t be overnight. Big things take a while.

And most importantly—what waits for us on the other side is a better way to live. A more human way to live—where we identify as individuals rather than corporate workers and exchange value and meaning as part of a new human-centered economy.

My purpose in writing this is to give an alternative—and hopefully far more sense-making—explanation of the feelings you might’ve been feeling for a very long time. And to give you both some warning—and some hop—with which to move forward.

I don’t like to give such news without providing some sort of practical advice for what people can do.

I’ve oriented my life since the end of 2022 to thinking about this problem, providing ideas and frameworks around it, and have written hundreds of articles about it. But rather than give the standard “subscribe to my newsletter” response, I would just say that I’m easy to find. Website | X | Newsletter | Community | LinkedIn

We are going to get through this, and it will be much better once we do.

🫶

1 I’m talking about the knowledge work job market, like IT, etc., not physical or professional work, although I do think they’ll be affected soon as well.

2 I’m specifically speaking of the last few years, say, since 2020.

Powered by beehiiv

August 20, 2024

Aliens Landed in Palo Alto in October of 2027

On the 8th of October in 2027, an alien craft was seen entering the atmosphere over the Atlantic around 600 miles off the coast of Newfoundland.

It stayed very high while covering the United States and then descended quickly before landing in an open field near a water reservoir, in Palo Alto, California.

The craft was extremely spherical, somehow more than a sphere, and had a silverish shine to it that seemed to reflect and somehow improve the colors around it.

At first, the military surrounded it and sent all sorts of drones and probes to go and inspect the craft, but within 18 hours the craft began sending messages on multiple frequencies.

It started by explaining that it was a representative of a distant civilization called The Aleta. That they started as biological life forms but transitioned into a unified (part bio part technology) form eventually, and that they were here to share information with Earth at a crucial time of its development.

Upon figuring out that the craft could not be approached, destroyed, or moved in any way—and after the craft sufficiently explained its technological superiority and benign intentions—humans started listening to what it had to say.

Aleta (that’s what everyone was calling it) sent all sorts of information. It evidently figured out how to read all of our media and look for problems and trivialities. It started sending helpful information about how to solve them.

Some days, it would send extra extraordinary recipes for how to make better muffins, using an extracted type of butter and a new type of salt. It also released the most breathtaking and spellbinding 48 peace fantasy series that quickly became the most talked about and brilliant piece of art ever created. It was called Altered Dominion. Think Game of Thrones, Shakespeare, Harry Potter, the Notebook, and The Sopranos all rolled into one—except 1000 times more compelling.

On other days, it would release explanations of fundamental science, including an actual unified theory of physics. Forumulas for new materials. And explanations of dark matter that we’ve never seen before. The Unified Theory was released on a Thursday, after it rained in Palo Alto for nearly 3 days straight, and after the season 35 finale of Altered Dominion.

But by the summer of 2029, and 2 1/2 years of constant talk and speculation worldwide, the mainstream conversation about Aleta began to change from wonder to disillusionment.

A small number of people—around 140,000 or so—were still stitched to every transmission that came from the craft, and they had actually learned how to speak with it in real-time. They oriented their lives around all of its messages and started working on how to incorporate the new science and art streaming from the craft.

This group, who called themselves ATLiens for some strange reason, literally organized sleeping shifts, transcribing shifts, and studying shifts, and then a whole discipline around the incorporation of the new knowledge into how humans currently do things. To them, the knowledge that had been sent just in the last two years would take several decades, if not a couple of centuries, to properly integrate into human society. It was just that much data, and it was just that transformational.

But to most people, once they had finished watching Altered Dominion and eating all the new recipes and trying on all the new outfits, they went back to TikTok.

By 2030, most people had forgotten about the strange sphere in Palo Alto. And more than forget about it, they took on a disappointed attitude towards it.

The difference between this group, which was most of humanity, and the cult-like ATliens could not be more extreme. Most of them moved to Palo Alto and surrounding areas in the Bay Area, just so they could be closer to others who understood the significance. They structured their lives around the regular broadcasts from Aleta and they could think of nothing else.

They studied every single piece of new information from Aleta, and figured out how to build new things using it. Many of them had trouble sleeping because they feared missing a transmission and all the wisdom contained within.

The difference in attitudes between the ATliens and normal people was captured well by an interaction between Sarah Meyer, a devoted ATLien from Rochester now living in Fremont, who was getting her morning coffee at a Starbucks in Palo Alto.

The barista asked her what all her books and computers were for, and why she always seems so excited when she came in. Sarah explained—a bit embarrassed—that she was one of “those people”, and that she comes in and gets her coffee, and then heads out to the reservoirs to receive new transmissions.

The barista wiped his hands, looked at her blankly, and said,

Oh yeah, is that thing is still out there? (laughing)

Yeah, I’ve seen Dominion four times, but I just kind of tuned out after that.

(checking a name on a cup and frowning slightly)

All this time we were waiting for aliens, and all they end up giving us is a new TV show.

Powered by beehiiv

UL NO. 446: AI Ecosystem Components, MS 0-Days, Iranian Campaign Hacks…

SECURITY | AI | MEANING :: Unsupervised Learning is a stream of original ideas, story analysis, tooling, and mental models designed to help humans lead successful and meaningful lives in a world full of AI .

TOCNOTESHey!

Few things here to start out:

All better from being sick. Was quite minor. Would not even have known I was sick if not for testing.

We migrated Fabric to Go! It’s now easier to install, upgrade, and it’s way faster. INSTALL/MIGRATE

Joe Rogan had Peter Thiel on the podcast, and it was a brilliant conversation. One of the best podcasts of that type in months. MORE

I bought one of those mini-libraries to put in my neighborhood. Love the idea of sharing books with the local community!

Ok, let’s go…

Continue reading online to avoid the email cutoff… MY WORKMy new essay on the 4 components (not just the model weights!) that will decide who wins out of OpenAI, Anthropic, Meta, or Google.

The 4 Components of Top AI Model Ecosystems

The four things I think will determine who wins the AI Model Wars

danielmiessler.com/p/ai-model-ecosystem-4-components

A short essay on what I see as the root of a lot of “LLMs can’t reason” arguments.

The Link Between Free Will and LLM Denial

Denying the specialness of LLMs seems tied to over-believing in the specialness of humans.

danielmiessler.com/p/free-will-llms

SECURITY

SECURITYMicrosoft just released patches for 90 security flaws, including 10 zero-days, with six of those being actively exploited. Notable vulnerabilities include CVE-2024-38189 (RCE in Microsoft Project), CVE-2024-38178 (memory corruption in Windows Scripting Engine), and CVE-2024-38213 (SmartScreen bypass). MORE

Russian cyberspies from the FSB, along with a new group called COLDWASTREL, have been running a massive phishing campaign dubbed "River of Phish" targeting US and European entities since 2022. The campaign aims to steal credentials and 2FA tokens from high-risk individuals, NGOs, media outlets, and government officials. MORE

The Pentagon is planning to flood the Taiwan Strait with thousands of drones in the event of a Chinese invasion. US Indo-Pacific Command chief Admiral Samuel Paparo described the strategy as creating an "unmanned hellscape" to delay Chinese forces and buy time for US and allied reinforcements. Weird that we just tell people our strategies like this, though. MORE

Sponsor

The Next Big Thing in Automated Security Investigations

Dropzone.ai is the the only company I’ve seen that has truly nailed the agent-driven approach to investigations. Or really Agents used in a cyber workflow.

What they do is take alerts that come from tools like PAN, and they start autonomously investigating them, just like a human analyst. This is where this is all going, and they’re the best I’ve seen. So much so that I’m now an advisor for them!!

By the way, if you’re interested in where this is all headed, check out this article on how Gartner just canceled SOAR. It’s a clear signal that companies like Dropzone are where things are going.

Request a DemoJeff Sims has published a timeline of his research on offensive AI agents, detailing the development of three distinct types of offensive AI systems. MORE

SolarWinds has patched a critical deserialization vulnerability (CVE-2024-28986, CVSS 9.8) in its Web Help Desk software that could allow remote code execution. The flaw affects all versions up to 12.8.3 and has been fixed in hotfix 12.8.3 HF 1. MORE

Iranian banks have been hit by a massive cyber attack, reportedly one of the largest in the country's history. Seems likely tied to Israel/Iran tensions. MORE

Trump shared a fake image of Harris speaking at a Communist event. This one looks fairly fake, but 1) lots of people will still believe it’s real, and 2) current tech can already make more believable ones. We’re actually at the point I talked about here:

Iranian hacker group APT42 has targeted both Trump and Biden campaigns, according to Google's Threat Analysis Group. The group, believed to be working for Iran's Revolutionary Guard Corps, targeted both campaigns, but only Trump's campaign appears to have had sensitive files leaked to the press, which is quite curious. MORE

Trump corroborated this by pointing the finger at Iran for hacking his presidential campaign, praising the FBI's investigation into the breach. He mentioned that the FBI is handling it professionally and reiterated multiple times that Iran was behind it, though he didn't share specific details from the agency. MORE

Sponsor

ProjectDiscovery Cloud Platform Asset Discovery

Our latest release includes enhanced tech stack detection and universal asset discovery.

For Individuals & Bug Bounty Hunters: Discover and monitor up to 10 domains daily.

For Organizations: Uncover your external attack surface and cloud assets with automatic asset enrichment and daily monitoring.

Stay ahead with ProjectDiscovery Cloud Platform!

Discover Assets TodayChina-linked cyber-spies have infected dozens of Russian government and IT sector computers with backdoors and trojans since late July, according to Kaspersky. The attacks, dubbed EastWind, are linked to APT27 and APT31, using phishing emails and cloud services like GitHub, Dropbox, and Quora for command-and-control. MORE

Scammers are targeting young Chinese job seekers in a tough economy, exploiting their desperation by offering fake job opportunities. MORE | Comments

Continue reading online to avoid the email cutoff… AI / TECHxAI’s Grok chatbot now lets users create images from text prompts and publish them to X, leading to chaotic results like Barack Obama doing cocaine and Donald Trump in a Nazi uniform. Really curious if this is going to get nerfed or not. Elon replied to one that had him pregnant standing next to Trump, and he replied, “Live by the sword, die by the sword.” MORE

Alex Wieckowski is on a mission to make you fall in love with reading again—and he thinks AI can help. In this episode, Alex shares how he uses AI tools like ChatGPT to recommend books, understand deeper themes in novels like Hermann Hesse’s "Siddhartha," and create actionable strategies from business books like Alex Hormozi’s "$100M Offers." MORE

Comedians are increasingly using AI to help write jokes and brainstorm ideas, with mixed results. I think this is similar to the Turing Test in terms of the importance of AI progress. If AI can write a full set of comedy and make humans laugh, that’s f*cking huge. MORE

San Francisco is looking to ban software that critics claim is being used to artificially inflate rents. The software in question allegedly helps landlords coordinate rent increases. MORE

You might be overusing Vim visual mode. This post argues that many Vim users rely too heavily on visual mode (I think I’m one of them), which can often be replaced with more efficient normal mode commands. Examples include using gg"+yG instead of ggVG"+y to copy a whole file and dk instead of Vkd to delete the current and previous lines. MORE