Clyde DeSouza's Blog, page 7

October 15, 2013

The Hollywood secret to Life Extension and Longevity.

The year is 2020 and your favorite 1980′s actor doesn’t look the way (s)he used to. Many iterations of cosmetic and then reconstructive surgery have now succumbed to gravity and the shortcomings of the biological substrate that is the human body. It is particularly hard for a superstar actor to come to terms with this. The combination of years of hard work to reach the top, the adoration of fans, wealth and ego are a hard mix to overcome. An actor’s career however is not over, should he or she choose the next milestone – Voice acting.

But, once someone tastes success in Hollywood…they never want to leave just yet. Audiences also keep demanding one more film from their heartthrob who might be pushing 60, but was their role model for so long, it’s unthinkable that newbie replace them. After all, “The Rock” saying “I’ll be back” is not the same. So what does Hollywood do to solve this dilemma? – Digital Surrogate Actors.

High Definition Cameras can pick up flaws in Human skin, and one too many beauty passes are required in post as actors may age even faster than others with a combination of work stress, lifestyle and the effects of being under stage lighting for hours at a time. The answer – Digitize an actor – Nothing less than full body digital documentation and performance capture.

When such technology does go mainstream (read: cost effective) and it will, soon – one of the many benefits will be the capability to do an infinite number of “takes” – But the main benefit? When opting for a Digital surrogate of themselves, an actor gets an extended lease on life – a digital soul, albeit, sold to Hollywood.

But, who owns this Digital Surrogate of an actor? The actors themselves? the Studio? or the Mo-cap/Performance capture house? To answer these questions, let’s take a look at how an actor’s digital surrogate is born.

The person (actor) undergoes a whole body scan. [1]

More detailed scans of the skin face texture, and hands for instance are taken.

This skin texture is “baked” let’s say on September 2013 and the person (actor) is 35 years old – Thus the actor can choose to “look” 35 in all movies henceforth.

Facial expressions are captured based on algorithms that are unique to a facial capture software. Through CG morph targets, and CG Face rigs, almost any expression could then potentially be synthesized. See the FACS [2] explanation for more technical details on Facial Action coding.

Signature Facial expressions unique to an actor (example: Jim Carrey) can also be captured as a macro rather than re-creating it via FACS.

Finally, motion capture. [3] The solution referenced here, shows how Moore’s law is also affecting price of Mo-cap solutions. Mocap files can be re-targeted from any actor, though in certain cases, the best mo-cap performance for a certain ‘style’ is best acquired from the original actor.

An actor can also “immortalize” their voice, using Voice banking [4] that people afflicted with ALS have come to rely on. Voice banking may have uses for them, a long time after they retire.

video:https://vimeo.com/36048029

Realistic Human Skin:

The holy grail of CG human rendering is to achieve “realism”. Big strides are already underway that should make Digital Humans (actors) indistinguishable from live talent when viewed in movies – In real-time.

Yes, if the whole Digital Surrogate Actor argument is to gain traction in Hollywood, Directors will want to be able to work with Digital actors in real-time. Seeing them respond live, via the view finder. The video above is the work of one man – Jorge Jimenez and his algorithms that render life like skin. He calls it separable SSS, [5] to speed up render time with today’s GPUs.

To learn more, browse through the paper from Nvidia on the subject of rendering more realistic Human skin in CG [6]

The Ethics of DSA – Digital Surrogate Actor ownership:

Who “owns” this Digital Actor today? There are full digital replicas of some actors lying around on servers in VFX houses and Studios, and with a little re-targeting, these performance capture files can be spliced and edited… much like video, and can even be broken down to sentence and phrase level facial expressions, then mapped onto any other digital character, without the actors consent.

For example: Does a studio need Jim Carrey’s exaggerated facial expressions for “MASK 3″ and Jim Carrey is asking for too much for the film? A typical response from suits at a production studio would be “Let’s search our servers and see if we’d mo-capped and performance captured him”. A few minutes later on a positive file search… “OK, let’s re-target it to a stock Digital character and get on with the show”.

Along with the advances as shown in the Jimenez realistic CG face rendering, the Studio now has a completely digital actor surrogate within their budget. At this point we are still talking vanilla 3D, but once we have a 3D scan and the Digital Surrogate, it’s a no-brainer to render it in Stereoscopic 3D. When the depth channel kicks in… we have total immersion in Artificial Reality, and the audience is none the wiser.

This is something for Actors to think about. The argument is open on whether actors have signed contracts where they have given up the rights to their mo-cap and performance capture libraries. FACS libraries, their digital “skins” are all part of personal property that should be copyrighted. The right to decide should fully be with the human owner that the surrogate was made from.

However, there’s also the good side to Digital Actor Surrogates.

video:(John Travolta strut)http://www.youtube.com/watch?feature=...

Think John Travolta’s “Strut” in the final scene in Staying Alive, back in 1983. Can he “strut” the same as he could back then? …err. What if a studio were to decide to make Staying Alive again? If they had the original actor’s mo-capped “strut”, they could simply apply it to the backside of a Digital actor in leather trousers. Photo-realistic New York is available today.

How about Michael Jackson’s dance style and the moon walk. He’s often imitated, but in the end, the nuances were his. Was his moon-walk ever mo-capped? I do not know. Can it be mo-capped with 100% accuracy from a video file? I’m guessing with advances in algorithms and multiview video analysis, it could.

References:

Images used:

Cyberpunk 7070 - http://www.rogersv.com/blog/cyberpunk...

JimCarrey Perf capture - http://www.flickr.com/photos/deltamik...

[1] Whole Body Scanning – Infinite Realites: http://ir-ltd.net/

[2] FACS – Facial Action Coding System - http://en.wikipedia.org/wiki/Facial_A...

[3] Motion Capture – Nuicapture - http://www.youtube.com/watch?v=mhb6Uq...

[4] Voice Banking - http://www.nbcmiami.com/news/local/Wo...

[5] Separable Sub-Surface Scattering - http://www.iryoku.com/separable-sss-r...

[6] Realistic Skin rendering – Nvidia - https://developer.nvidia.com/content/...

October 5, 2013

Parsing Natural Scenes and Natural Language with Recursive Neural Networks

“I granted the AI access to the cameras on your Wizer,” he said. “Remember when I told you about frames and how the AI could take snapshots of your environment, then run image and feature recognition?”

“Yeah…” was all I could manage.

“That’s what It just did. The AI takes a snapshot every few seconds when you’re wearing the Wizer and creates a frame. The name of the bridge on the sign above is one of the prominent symbols to be recognized.”

“The AI also gets help from GPS data. It then cross references the current frame with its database of memories… stored frames, and if it finds a match, [it] tells you. Memories, that’s all they are, but if they begin to fade, [it'll] remind you,” he said. – Memories with Maya

When writing Memories with Maya, a good deal of research was done on AI and AR, to make the science plausible. When actual science catches up to the creative spin on AI woven into the novel, it is encouraging.

:Important:

Visit Socher.org to read more on Parsing Natural Scenes and Natural Language with Recursive Neural Networks , and download the actual source code and data-sets that the still image above is taken from.

(all images used are copyright Socher.org)

October 4, 2013

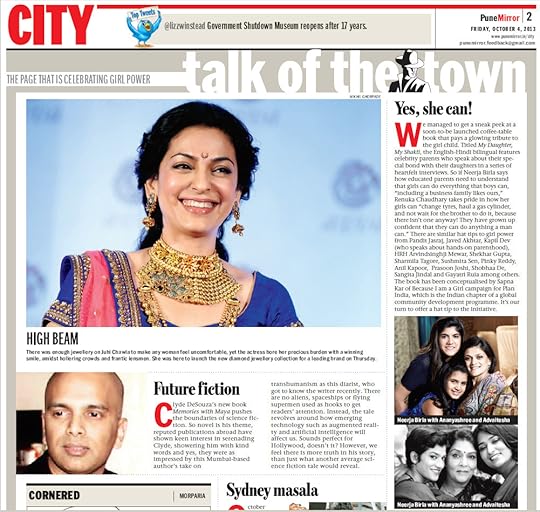

Future Fiction - Hard Science fiction set in India - The Pune Mirror

Future FictionThe Pune Mirror, India Oct 4th 2013

Clyde DeSouza’s new book Memories with Maya pushes the boundaries of science fiction.

So novel is his theme, reputed publications abroad have shown keen interest in serenading Clyde, showering him with kind words and yes, they were as impressed by this author’s take on transhumanism as this diarist, who got to know the writer recently.

There are no aliens, spaceships or flying supermen used as hooks to get readers’ attention. Instead, the tale revolves around how emerging

technology such as augmented reality and artificial intelligence will

affect us.

Sounds perfect for Hollywood, doesn’t it? However, we feel there is more truth in his story, than just what another average science fiction tale would reveal.

September 4, 2013

Till Death do us part - The ethics and evolution of human relationships

Posted: Sep 4, 2013

This essay contains spoilers from a book I’ve read, “Memories with Maya” by Clyde DeSouza.

I’ve read it a few times now and each time I did, I’ve picked up a different outlook on just what it means to be human in today’s hyper-paced technological world.

In composing this article, I had to make myself think about not just my own perspective, but what others will consider in really just a few years from now.

Everyone is familiar with the human urge to meet that special someone in their lives.

We either consciously or subconsciously check out members of the opposite sex and make mental notes of things we like physically. The protagonist in the book, Dan, starts out in the story by using his phone to focus on his close female friend, Maya, checking out her cleavage. Just a bit later in the book, we learn that Dan and Maya are a casual couple. Much of the story is interwoven with their relationship.

What makes their relationship unique? Technology. What makes any relationship unique today? Technology.

Long Distance Petting

Many years ago when I was dating, the landline telephone was instrumental in maintaining my relationship with my girlfriend. Most times we only had an hour at best to set up our daily lives in high school. There was no Internet. Most dates were mainly copying each other’s homework, in between watching a 19 inch tube TV. In “Maya”, the world is very contemporary. Cameras in cell phones, large screen televisions, and remote controlled petting many miles away from each other.

What will pass for maintaining a meaningful relationship in the future?...

Read the entire article on the IEET website

August 26, 2013

Will Google enable Teletravel in a Surrogate Reality World?

he year is 2025 and there’s a raging snow storm outside. The world is a pale shade of white and gray. You wake up and instinctively look around the bedroom to locate the amber dot glowing on your G-Glass iteration #4 (4th generation upgrade) visor.

he year is 2025 and there’s a raging snow storm outside. The world is a pale shade of white and gray. You wake up and instinctively look around the bedroom to locate the amber dot glowing on your G-Glass iteration #4 (4th generation upgrade) visor.

Your friends envy you. They may have a more feature-packed visor, some even have Wizers (visors with AI built-in). They talk excitedly about how the narrow-AI in their current gen Wizers is going to be upgraded to AGI but you just smile to yourself, wear your G-Glass#4 and sink back into your pillow, looking out the window – the blank canvas of the world waits to be painted by your imagination.

The amber dot is glowing and you do a slow blink with both your eyes. The in-ward facing camera reads your eye gesture and opens the note.

Hey Dan – Join us at the beach? Co-ords are N 39° 1’12.2664, E 1° 28’55.7646.

You know the place like you know a familiar telephone number, even before the spinning Digital Globe zooms in and fills your G-Glass#4′s field of view. You have tele-traveled to a secluded, clothing optional beach cove in Ibiza. You look around and you spot 5 of your friends already there. Two are present in the same way you are, as Dirrogates – Digital Surrogates. The other 3 are in person; the “naturals”.

You can’t feel the sun or the sand in the same way the “naturals” can, but that doesn’t matter, the meta-data from the location has already streamed through and has seamlessly adjusted the air-conditioning in your bedroom and you have the option of turning off the sun-tan lamp and the ambient sound of the surf and waves lapping at the beach. Advanced frequency filtration reduces the volume of the high-pitched sounds of the sea birds so you can hear your friends better.

This is why you are the envy of some of your friends who own other Visors – They pay a premium to tele-travel in the Surrogate Reality world that Google painstakingly built over the previous decades.

Mesh-net is born:

The first version of this Surrogate Metaverse was simply called Google Earth, and it’s imagery and topography was updated only every few months or so… Until the launch of Mesh-net – that changed everything. Now 3D meshes of the real world could be created at near infinite resolution and in Euclideon like detail, in real-time, and be pin-registered to it’s real world counterpart.

The high resolution eyes-in-the-sky did much of the heavy lifting of capturing and draping live video over the real time 3D geometry. The advanced GGlass#4, thanks to it’s stereoscopic cameras and depth-from-stereo algorithms, could produce ground level meshes of everything in the field of view of a wearer, including live meshes of people, draped with video of themselves – living organic paint.

Initially, only the military had access to the technology, but with state governments and a growing number of allies passing unanimous resolutions to ward of terrorism, citizens had waived off their privacy concerns and rights, which then opened the door to wide-spread commercialization and the birth of Mesh-net.

Mixed Reality…to Surrogate Reality – The hard science:

Every one is now aware or being introduced to Augmented Reality. This is the next big Technology marvel. While previously it resided only in University research labs, the emergence of cost effective hardware such as visors from Vuzix, Meta-Glass, Google Glass and even the I-Phone and Android based phones, with sophisticated hardware such as digital compasses and accelerometers has allowed Augmented Reality applications to be introduced to the public. One excited market is the Ad Industry and Marketing agencies that have helped popularize this technology.

Augmented Reality thus far has come to mean the overlaying of Computer generated imagery (CGI) in perfect registration and orientation with a live view (usually via the built in camera of a device) of the real world. In most instances people are now familiar with turning on their built in smart-phone camera, and have an AR application run, that superimposes useful information in context to the live camera view shown on the display. Examples range from a list of nearby fast food outlets, to geo tagged “tweets” from Twitter. Applications like Layar also allow the overlaying of 3D objects of interest.

If we could use the concept of Augmented Reality, mixed with Virtual Reality, in context with a town, city or even the world… we could in effect, be sitting at home and tele-traveling via a digital representation of ourselves, our ‘Dirrogates’, in a superimposed world, while “interacting” with real people in the real world. This would be Mixed Reality, or Surrogate Reality.

Google Powered Surrogate Reality:

How could Google power such a Surrogate Reality experience? Google already has a Virtual version of not only every street, town and city… It has a virtual copy of the entire planet in the form of Google Earth. With Google Earth you can zoom in and spin a “Digital Globe” on a PC to visit a geographically correct and accurate digital representation of any city or country in the world down to street level. A rich overlay map shows an aerial satellite image of this location draped on the digital globe.

The Digital Globe from Google also has many ‘layers’ that can be turned on and off, such as traffic information, locations of civic services etc. One of the most important layers is the 3D buildings layer. This allows whole streets and city blocks to be represented in Google Earth as 3D models that can be navigated in real time. All these layers are geo referenced and located at exact real world co-ordinates. So for instance if we create a 3D building and a community park or playground and place it at the exact co-ordinates as in the real world, we would have a location that we can navigate to virtually, and be at the same place as someone who may be there in real life (IRL)!

Another example, is if we create a replica 3d model of a supermarket or a bookstore that exists in real life and place this 3D model at the exact same co-ordinates on the Digital Globe, we could “fly” to this virtual model and “enter” the building as shown in the video above.

Case Study – Social Networking in a Surrogate Reality World:

How does this all tie in and what are the benefits of mixing Virtual Reality and Augmented Reality? Take the case of the community park as mentioned above. If we have a virtual model of such a community park, and if Google Earth adds an “avatar” layer we could have a Second Life type socializing and collaboration platform. Using Internet chat, voice and webcam we could start a social interaction with another avatar that might be at the same location.

In-fact Google had done an experiment with such a virtual world with their project, Lively which has since been closed. If you thought this would be an interesting way to meet different people from different parts of the world, via a very intuitive interface (much better way than navigating in Second Life), imagine if you could interact with REAL PEOPLE who may be present at that real world location! Your Digital Surrogate would be socializing with a real human, driven by you in the comfort of your armchair.

Surrogate interaction with Humans:

So how would your Surrogate avatar interact with Real People? This is where Augmented Reality comes in.

An excerpt from the hard science novel “Memories with Maya” explains…

The prof was paying rapt attention to everything Krish had to say. “I laser scanned the playground and the food-court. The entire campus is a low rez 3D model,” he said. “Dan can see us move around in the virtual world because my position updates. The front camera’s video stream is also mapped to my avatar’s face, so he can see my expressions.”

“Now all we do is not render the virtual buildings, but instead, keep Daniel’s avatar and replace it with the real-world view coming in through the phone’s camera,” explained Krish.

“Hmm… so you also do away with render overhead and possibly conserve battery life?” the prof asked.

“Correct. Using GPS, camera and marker-less tracking algorithms, we can update our position in the virtual world and sync Dan’s avatar with our world.”

“And we haven’t even talked about how AI can enhance this,” I said.

I walked a few steps away from them, counting as I went.

“We can either follow Dan or a few steps more and contact will be broken. This way in a social scenario, virtual people can interact with humans in the real world,” Krish said. I was nearing the personal space out of range warning.

“Wait up, Dan,” Krish called.

I stopped. He and the prof caught up.

“Here’s how we establish contact,” Krish said. He touched my avatar on the screen. I raised my hand in a high-five gesture.

“So only humans can initiate contact with these virtual people?” asked the prof.

“Humans are always in control,” I said.

Radius of ‘Contact’; Human and Surrogate:

So, a human present at a real world location, can, via his/her smart-phone or visor, locate and interact with a “Surrogate”. Social Interaction will take place just as it does today; The surrogate hears the human who speaks thru the visor’s mic, and the human hears the Surrogate via the speaker or headphones. The human, of course is at all times “seeing” the Surrogate via the augmented view of the see through visor. This way the human can know where the Surrogate is. Communication can be initiated and maintained only while the Surrogate is within a certain radius-of-contact of the visor/smart-phone, and by the user then “clicking” or gesturing to initiate contact with the Surrogate.

On establishing contact with the Surrogate to start social interaction, a 3D avatar of the human can then “spawn” in the superimposed world so that the Surrogate can have visual confirmation or “see” the human. The accelerometer and compass in the smart phone or visor can keep track of the movement of the human in the real world and thus update the position of the human’s avatar. It is then up to either the human or Surrogate to stay in one place or move around and be followed by the other, in order to maintain the radius of contact for social interaction in their Digital Personal Space.

In the video above, a Surrogate is shown in a real world location as he would appear when viewed through the display of a Smart Phone or visor. By 2025, there is every reason to realistically predict that the “Surrogate” will be of sufficiently high quality and humans (growing up with video games and lifelike anime) will get over the “uncanny valley” effect plaguing today’s generation of CGI and human minds.

The future of Mesh-net and Dirrogate Reality:

Competitors tried back in 2015 and still try today, to build something as sophisticated as Mesh-Net – an attempt to create parallel universes, and recently there have been attempts to hack into Mesh-net itself. Tele-traveling had a serious impact on transportation and there were many job losses that had a knock on effect on related businesses. But, these incidents are dropping, as humans finally see the logic of living in a border-less world, where resources are in abundance, thanks to advances in technology that allowed for large scale desalination of water, near earth-body and asteroid mining and dying out of war mongering human minds.

The essay is to seed ideas, and is not to be interpreted in any way as endorsement or involvement from Google or other organizations mentioned. All existing trademarks and copyrights are acknowledged and respected as belonging to their respective owners.

The concepts in this essay are from the novel “Memories with Maya” by Clyde DeSouza.

August 24, 2013

In Memories with Maya, human sexuality gets an upgrade

What happens when you mix virtual reality, haptic interfaces, augmented reality, and artificial intelligence?

That’s what Clyde deSouza explores in his highly entertaining near-future science-fiction novel, Memories with Maya.

DeSouza introduces the “Wizer,” an augmented-reality, see-through visor driven by AGI (artificial general intelligence) — Google Glass on steroids.

It’s coupled with 3D scans of real environments generated by wall-mounted laser cameras (think next-gen Kinect) to insert remote participants seamlessly in real environments, Holodeck-style, DeSouza explained to me...

...Read the rest on the popular science site: i09.com

August 15, 2013

Near future Fire Fighting Robot “Drones” being tested

From Chapter 4:

…”The engineering team at AYREE had done a slick job embedding those tiny cameras. Using the same code tweaked from the tests we’d done in the food court, the Wizer was given the ability to generate a depth map of everything in its field of view.

I moved my head from side to side in an arc. A 3D model of the real world was taking shape in real-time.

The video was draped over”…

Dirrogate science is now becoming reality in the form of “FFRs” or – Fire Fighting Robots, being developed by engineers at UCSD’s Jacobs School of Engineering.

” The prototype is about a foot tall and 8 inches wide and rolls on a pair of wheels with a metal leg that lets it climb stairs and right itself if it falls down, Bewley [mechanical engineering professor at the University of California-San Diego],said.

Special cameras mounted on the robot allow it to produce three-dimensional images that show the interior of a burning building and the temperature of items in the structure.

“It builds up a virtual reality, the same kind of thing you explore in a shooter (video) game like World of Warcraft,” Bewley said.

The idea is for several of the robots to be deployed at once into a burning building while firefighters set up their equipment outside.

The firefighters will give the robots general instructions on what to look for but once inside, the robots will be on their own, using artificial intelligence to work as a team exploring the building, Bewley said.

Read the entire article about FireFighting Robots on MSN News.

July 30, 2013

Review of Memories with Maya by Futurist – Giulio Prisco.

I would like to thank futurist and transhumanism editor, Giulio Prisco for his insightful review of the story and the underlying message(s) in Memories with Maya. The review has been published on KurzweilAI.net

Giulio’s review and my brief interaction with him and reading his writings on TuringChurch.com will be one of the resources I will turn to while researching science and ethics for the sequel to Memories with Maya – The Dirrogate, when I begin writing later this year.

A few choice snippets from Giulio’s review below:

“…I look forward to meeting again some of the characters of Memories with Maya … and not just those who make it to the end of the book alive…”

“…[“If your body died, but you had a mindclone, you would not feel that you personally died, although the body would be missed more sorely than amputees miss their limbs.”] I think it would be very cool to combine this concept with the advanced augmented-reality technology described in the novel…”

“…He introduces the “Wizer,” an augmented-reality, see-through visor driven by AGI (artificial general intelligence) — Google Glass on steroids…”

Read the full review :here:

June 25, 2013

Sexbots, Ethics, and Transhumans

I zoomed in as she approached the steps of the bridge, taking voyeuristic pleasure in seeing her pixelated cleavage fill the screen.

What was it about those electronic dots that had the power to turn people on? There was nothing real in them, but that never stopped millions of people every day, male and female, from deriving sexual gratification by interacting with those points of light.

It must all be down to our perception of reality. — Memories with Maya

We are transitional humans; Transhumans:

Transhumanism is about using technology to improve the human condition. Perhaps a nascent stigma attached to the transhumanist movement in some circles comes from the ethical implications and usage of high technology – bio-tech and nano-tech to name a few, on people. Yet, being transhuman does not necessarily have to be associated with bio-hacking the human body, or entail the donning of cyborg-like prosthetics. Although it is hard not to plainly see and recognize the benefits such human augmentation technology has for persons in need.

Orgasms and Longevity:

Today, how many normal people, even theists, can claim not to use sexual aids and visual stimulation in the form of video or interaction via video, to achieve sexual satisfaction? It’s hard to deny the therapeutic effect an orgasm has in improving the human condition. In brief, some benefits to health and longevity associated with regular sex and orgasms:

When we orgasm we release hormones, including oxytocin and vasopressin. Oxytocin equals relaxation, and when released it can help us calm down and feel euphoric.

People having more sex knock years off their lifespan. Dr. Oz touts a 200 orgasms a year guideline to remove six physiological years off of your life. [1]

Recommended reading: The Science of Orgasm [2]

While orgasms usually occur as a result of physical sexual activity, there is no conclusive study that proves beneficial orgasms are only produced when sexual activity involves two humans. Erotica in the form of literature and later, moving images, have been used to stimulate the mind into inducing an orgasm for a good many centuries in the absence of a human partner. As technology is the key enabler in stimulating the mind, what might the sexual choices (preferences?) of the human race – the Transhuman be, going forward?

Enter the Sexbot…

(Gray Scott speaking on Sexbots at 1:19 minutes into the video)

SexBots and Digital Surrogates [Dirrogates]

Sexbots, or sex robots can come in two forms. Fully digital incarnations with AI, viewed through Augmented Reality visors, or as physical robots – advanced enough to pass off as human surrogates. The porn industry as always been at the fore-front of video and interactive innovation, experimenting with means of immersing the audience into the “action”. Gonzo Porn [3] is one such technique that started off as a passive linear viewing experience, then progressed to multi-angle DVD interactivity and now to Virtual Reality first person point-of-view interactivity.

Augmented Reality and Digital Surrogates of porn stars performing with AI built in, will be the next logical step. How could this be accomplished?

Somewhere on hard-drives in Hollywood studios, there are full body digital models and “performance capture” files of actors and actresses. When these perf-cap files are assigned to any suitable 3D CGI model, an animator can bring to life the Digital Surrogate [Dirrogate] of the original actor. Coupled with realistic skin rendering using Separable Subsurface Scattering (SSSS) rendering techniques [4] for instance, and with AI “behaviour” libraries, these Dirrogates can populate the real world, enter living-rooms and change or uplift the mood of person – for the better.

(The above video is for illustration purposes of 3D model data-sets and perf-capture)

With 3D printing of human body parts now possible and blue prints coming online [5] with full mechanical assembly instructions, the other kind of sexbot is possible. It won’t be long before the 3D laser-scanned blueprint of a porn star sexbot will be available for licensing and home printing, at which point, the average person will willingly transition to transhuman status once the ‘buy now’ button has been clicked.

Programmable matter – Claytronics [6] will take this technology to even more sophisticated levels.

Sexbots and Ethics:

If we look at Digital Surrogate Sexbot technology, which is a progression of interactive porn, we can see the technology to create such Dirrogate sexbots exists today, and better iterations will come about in the next couple of years. Augmented Reality hardware when married to wearable technology such as ‘fundawear’ [7] and a photo-realistic Dirrogate driven by perf-captured libraries of porn stars under software (AI) control, can bring endless sessions of sexual pleasure to males and females.

Things get complicated as technology evolves, and to borrow a term from Kurzweil, exponentially. Recently the Kinect 2 was announced. This off the shelf hardware ‘game controller’ in the hands of capable hackers has shown what is possible. It can be used as a full body performance capture solution, a 3D laser scanner that can build a replica of a room in realtime and more…

Which means, during a dirrogate sexbot session where a human wears an Augmented Reality visor such as Meta-glass [8], it would be possible to connect via the internet to your partner, wife or husband and have their live perf-capture session captured by a Kinect 2 controller and drive the photo-realistic Dirrogate of your favorite pornstar.

Would this be the makings of Transhumanist adultry? Some other ethical issues to ponder:

Thou shalt not covet their neighbors wife – But there is no commandant about pirating her perf-capture file.

Will humans, both male or female, prefer sexbots versus human partners for sexual fulfillment? – Will oxytocin release make humans “feel” for their sexbots?

As AI algorithms get better…bordering on artificial sentience, will sexbots start asking for “Dirrogate Rights”?

These are only some of the points worth considering… and if these seem like plausible concerns, imagine what happens in the case of humanoid like physical Sex-bots. As Gray Scott mentions in his video above.

As we evolve into Transhumans, we will find ourselves asking that all important question “What is Real?”

It will all be down to our perception of reality. – Memories with Maya

Table of References:

[i] Human Augmentation: Be bionic arm - http://bebionic.com/the_hand/patient_stories/nigel_ackland

[1] http://www.mindbodygreen.com/0-4648/10-Reasons-to-Up-Your-Orgasm-Quotient.html

[2] The Science of Orgasm- http://www.amazon.com/books/dp/080188490X

[3] Gonzo Pornography - https://en.wikipedia.org/wiki/Gonzo_pornography

[4] Separable subsurface scattering rendering - http://dirrogate.com/realtime-photorealistic-human-skin-rendering/

[5] 3D Printing of Body parts - http://inmoov.blogspot.fr/p/assembly-help.html

[6] Programmable Matter; Claytronics - http://www.cs.cmu.edu/~claytronics/claytronics/

[7] Fundawear: Wearable sex underwear - http://www.fundawearreviews.com/

[8] Meta-view: Digital see through Augmented Reality visor - http://www.meta-view.com/

June 12, 2013

Transhumanism, Eugenics and the Immigration Challenge

Transhumanism, Eugenics and IQ:

The aim of this short essay is not to delve into philosophy, yet on some level it is un-avoidable when talking about Transhumanism. An important goal of this movement is the use of technology for the enhancement, uplifting and perhaps…the transcendence of the shortcomings of the human condition. Technology in general seems to be keeping pace and is in sync with both Moore’s law and Kurzweil’s law and his predictions.

Yet, there is an emerging strain of Transhumanists – propelled by radical ideology, and if left un-questioned might raise the specter of Eugenics, wreaking havoc and potentially inviting retaliation from the masses. The outcome being, the stymieing of human transcendence. One can only hope that along with physical augmentation technology and advances in bio-tech, Eugenics will be a thing of the past.

Soon enough, at least IQ Augmentation technology will be within reach (cost-wise) of the common man – in the form of an on-demand, non-invasive, memory and intelligence augmentation device. So… will Google Glass or a similar Intelligence Augmentation device, forever banish the argument for “intellectual” Eugenics? Read an article on 4 ways that Google glass makes us Transhuman.

Technology without borders:

There is an essay on IEET titled: The Specter of Eugenics: IQ, White Supremacy, and Human Enhancement. It makes for interesting reading, including, the comments that follow it.

The following passage from the novel “Memories with Maya” is relevant to that essay.

He took a file out and opened it in front of us. Each paper was watermarked ‘Classified’.

“This is a proposal to regulate and govern the ownership of Dirrogates,” he said.

Krish and I looked at each other, and then we were listening.

“I see it, and I’m sure you both do as well, the immense opportunity there is in licensing Dirrogates to work overseas right at clients’ premises. BPO two point zero like you’ve never seen,” he said. “Our country is a huge business outsourcing destination. Why not have actual Dirrogates working at the client’s facility where they can communicate with other human staff. — Memories with Maya

A little explanation: Dirrogates are Digital Surrogates in the novel. An avatar of a real person, driven by markerless performance capture hardware such as a Kinect-like depth camera. Full skeletal and facial tracking animates a person’s Digital Surrogate and the Dirrogate can be seen by a human wearing Augmented Reality visors. Thus a human (the Dirrogate operator) is able to “tele-travel” to any location on Earth, given its exact geo-coordinates.

At the chosen destination, another depth cam streams a live, real-time 3D model of the room/location so the Dirrogate (operator) can “see” live humans overlaid with a 3D mesh of themselves and a fitted video draped texture map. In essence – a live person cloaked in a Computer generated mesh created in real-time by the depth camera… idea-seeding for Kinect 2 hackers.

What would a Dirrogate look like in the real-world? The video below, is a crude (non photo-realistic) Dirrogate entering the real world.

Dirrogates, Immigration and Pseudo Minduploads:

This brings up the question: If we can have Digital Surrogates, or indeed, pseudo mind-uploads taking on 3D printed mechanical-surrogate bodies, what is the future for physical borders and Immigration policies?

Which brings us to a related point: Does one need a visa to visit the United States of America to “work”?

As an analogy, consider the pseudo mind-upload in the video below.

Does it matter if the boy is in the same town that the school is in or if he were in another country?

Now consider the case of a customer service executive, or a potential immigrant from a third world country using a pseudo mind-upload to Tele-Travel to his work place in down-town New York – to “drive” a Google Taxi Cab until such a time that driverless car AI is perfected.