R. Scott Bakker's Blog, page 20

December 26, 2013

But Philosophers Really Are Insane…

… if one uses Einstein’s famous definition of insanity as “doing the same thing over and over again and expecting different results.”

Gary has posted Wittgenstein’s famous quote on the sanity of philosophers over at Minds and Brains and I thought it worth a quick holiday riff. On my Blind Brain account, philosophers of mind can be seen as blind mechanics in the workshop of nature, using fingers they barely feel to swap between tools they cannot see to repair breakdowns in their ability to repair. Since they are all blind, they remain convinced they can see everything that needs to be seen, so they continue using pliers to file, hammers to tighten, and screwdrivers to measure, thinking that if they just hold this or that implement just the right way, things can be repaired just enough, maybe.

This picture actually accords with Einstein’s definition quite well. To advert to Cogski speak: Conscious (System 2) cognition unconsciously engages heuristics adapted to domain-specific problem ecologies. Blind to the fractionate, heuristic character of its resources, it assumes the universal sufficiency of those resources. Conscious (System 2) cognition, accordingly, missapplies heuristics oblivious to those misapplications–it is stymied without the least inkling as to why. Conscious (System 2) cognition thus compulsively repeats these misapplications, over and over and over through the ages.

December 22, 2013

Cognition Obscura (Reprise)

(Originally posted September 24, 2013… Wishing everyone a thoughtful holiday!)

The Amazing Complicating Grain

On July 4th, 1054, Chinese astronomers noticed the appearance of a ‘guest star’ in the proximity of Zeta Tauri lasting for nearly two years before becoming too faint to be detected by the naked eye. The Chaco Canyon Anasazi also witnessed the event, leaving behind this famous petroglyph:

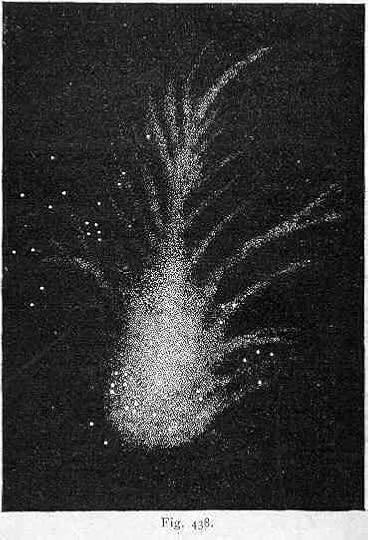

Centuries would pass before John Bevis would rediscover it in 1731, as would Charles Messier in 1758, who initially confused it with Halley’s Comet, and decided to begin cataloguing ‘cloudy’ celestial objects–or ‘nebulae’–to help astronomers avoid his mistake. In 1844, William Parsons, the Earl of Rosse, made the following drawing of the guest star become comet become cloudy celestial object:

It was on the basis of this diagram that he gave what has since become the most studied extra-solar object in astronomical history its contemporary name: the ‘Crab Nebula.’ When he revisited the object with his 72-inch reflector telescope in 1848, however, he saw something quite different:

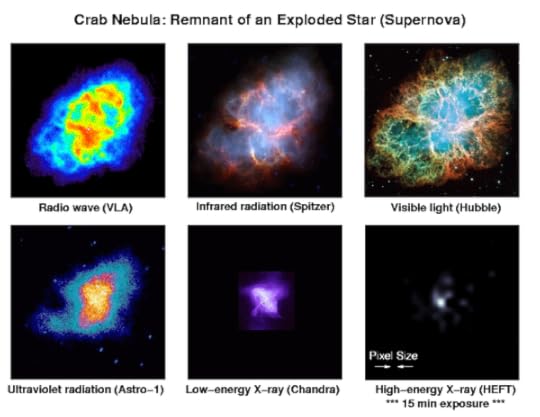

In 1921, John Charles Duncan was able to discern the expansion of the Crab Nebula using the revolutionary capacity of the Mount Wilson Observatory to produce images like this:

In 1921, John Charles Duncan was able to discern the expansion of the Crab Nebula using the revolutionary capacity of the Mount Wilson Observatory to produce images like this:

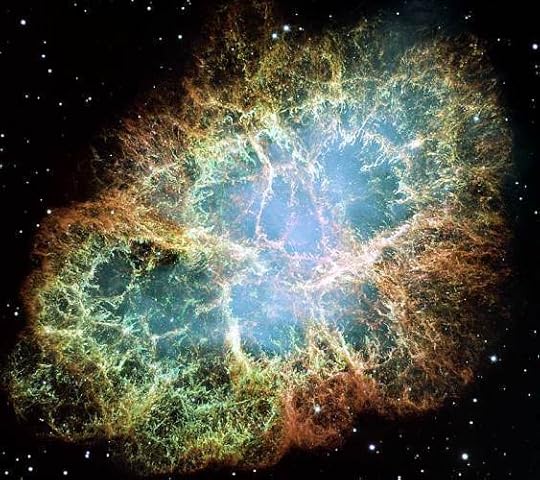

And nowadays, of course, we are regularly dazzled not only by photographs like this:

produced by Hubble, but those produced by a gallery of other observational platforms as well:

The tremendous amount of information produced has provided astronomers with an incredibly detailed understanding of supernovae and nebula formation.

The tremendous amount of information produced has provided astronomers with an incredibly detailed understanding of supernovae and nebula formation.

What I find so interesting about this progression lies in what might be called the ‘amazing complicating grain.’ What do I mean by this? Well, there’s the myriad ways the accumulation of data feeds theory formation, of course, how scientific models tend to become progressively more accurate as the kinds and quantities of information accessed increases. But what I’m primarily interested in is what happens when you turn this structure upside down, when you look at the Chinese ‘guest star’ or Anasazi petroglyph against the baseline of what we presently know. What assumptions were made and why? How were those assumptions overthrown? Why were those assumptions almost certain to be wrong?

Why, for instance, did the Chinese assume that SN1054 was simply another star, notable only for its ‘guest-like’ transience? I’m sure a good number of people might think this is a genuinely stupid question: the imperialistic nature of our preconceptions seems to go without saying. The medieval Chinese thought SN1054 was another star rather than a supernova simply because points of light in the sky, stars, were pretty much all they knew. The old provides our only means of understanding the new. This is arguably why Messier first assumed the Crab Nebula was another comet in 1758: it was only when he obtained information distinguishing it (the lack of visible motion) from comets that he realized he was looking at something else, a cloudy celestial object.

But if you think about it, these ‘identification effects’–the ways the absence of systematic differences making systematic differences (or information) underwrite assumptions of ‘default identity’–are profoundly mysterious. Our cosmological understanding has been nothing if not a process of continual systematic differentiation or ever increasing resolution in the polydimensional sense of the natural. In a peculiar sense, our ignorance is our fundamental medium, the ‘stuff’ from which the distinctions pertaining to actual cognition are hewn.

.

The Superunknown

Another way to look at this transformation of detail and understanding is in terms of ‘unknown unknowns,’ or as I’ll refer to it here, the ‘superunknown’ (cue crashing guitars). The Hubble image and the Anasazi petroglyph not only provide drastically different quantities of information organized in drastically different ways, they anchor what might be called drastically different information ecologies. One might say that they are cognitive ‘tools,’ meaningful to the extent they organize interests and practices, which is to say, possess normative consequences. Or one might say they are ‘representations,’ meaningful insofar as they ‘correspond’ to what is the case. The perspective I want to take here, however, is natural, that of physical systems interacting with physical systems. On this perspective, information our brain cannot access makes no difference to cognition. All the information we presently possess regarding supernova and nebula formulation simply was not accessible to the ancient Anasazi or Chinese. As a result, it simply could not impact their attempts to cognize SN-1054. More importantly, not only did they lack access to this information, they also lacked access to any information regarding this lack of information. Their understanding was their only understanding, hedged with portent and mystery, certainly, but sufficient for their practices nonetheless.

The bulk of SN-1054 as we know it, in other words, was superunknown to our ancestors. And, the same as the spark-plugs in your garage make no difference to the operation of your car, that information made no cognizable difference to the way they cognized the skies. The petroglyph understanding of the Anasazi, though doubtless hedged with mystery and curiosity, was for them the entirety of their understanding. It was, in a word, sufficient. Here we see the power–if it can be called such–exercised by the invisibility of ignorance. Who hasn’t read ancient myths or even contemporary religious claims and wondered how anyone could have possibly believed such ‘nonsense’? But the answer is quite simple: those lacking the information and/or capacity required to cognize that nonsense as nonsense! They left the spark-plugs in the garage.

Thus the explanatory ubiquity of ‘They didn’t know any better.’ We seem to implicitly understand, if not the tropistic or mechanistic nature of cognition, then at least the ironclad correlation between information availability and cognition. This is one of the cornerstones of what is called ‘mindreading,’ our ability to predict, explain, and manipulate our fellows. And this is how the superunknown, information that makes no cognizable difference, can be said to ‘make a difference’ after all–and a profound one at that. The car won’t run, we say, because the spark-plugs are in the garage. Likewise, medieval Chinese astronomers, we assume, believed SN-1054 was a novel star because telescopes, among other things, were in the future. In other words, making no difference makes a difference to the functioning of complex systems attuned to those differences.

This is the implicit foundational moral of Plato’s Allegory of the Cave: How can shadows come to seem real? Well, simply occlude any information telling you otherwise. Next to nothing, in other words, can strike us as everything there is, short of access to anything more–such as information pertaining to the possibility that there is something more. And this, I’m arguing, is the best way of looking at human metacognition at any given point in time, as a collection of prisoners chained inside the cave of our skull assuming they see everything there is to see for the simple want of information–that the answer lies in here somehow! On the one hand we have the question of just what neural processing gets ‘lit up’ in conscious experience (say, via information integration or EMF effects) given that an astronomical proportion of it remains ‘dark.’ What are the contingencies underwriting what accesses what for what function? How heuristically constrained are those processes? On the other hand we have the problem of metacognition, the question of the information and cognitive resources available for theoretical reflection on the so-called ‘first-person.’ And, once again, what are the contingencies underwriting what accesses what for what function? How heuristically constrained are those processes?

The longer one mulls these questions, the more the concepts of traditional philosophy of mind come to resemble Anasazi petroglyphs–which is to say, an enterprise requiring the superunknown. Placed on this continuum of availabilty, the assumption that introspection, despite all the constraints it faces, gets enough of the information it needs to at least roughly cognize mind and consciousness as they are becomes at best, a claim crying out for justification, and at worst, wildly implausible. To say philosophy lacks the information and/or cognitive resources it requires to resolve its debates is a platitude, one so worn as not chafe any contemplative skin whatsoever. No enemy is safer or more convenient as an old enemy, and skepticism is as ancient as philosophy itself. But to say that science is showing that metacognition lacks the information and/or cognitive resources philosophy requires to resolve its debates is to say something quite a bit more prickly.

Cognitive science is revealing the superunknown of the soul as surely as astronomy and physics are revealing the superunknown of the sky. Whether we move inward or outward the process is pretty much the same, as we should suspect, given that the soul is simply more nature. The tie-dye knot of conscious experience has been drawn from the pot and is slowly being unravelled, and we’re only now discovering the fragmentary, arbitrary, even ornamental nature of what we once counted as our most powerful and obvious ‘intuitions.’

This is how the Blind Brain Theory treats the puzzles of the first-person: as artifacts of illusion and neglect. The informatic and heuristic resources available for cognition at any given moment constrains what can be cognized. We attribute subjectivity to ourselves as well as to others, not because we actually have subjectivity, but because it’s the best we can manage given the fragmentary information we got. Just as the medieval Chinese and Anasazi were prisoners of their technical limitations, you and I are captives of our metacognitive neural limitations.

As straightforward as this might sound, however, it turns out to be far more difficult to conceptualize in the first-person than in astronomy. Where the incorporation of the astronomical superunknown into our understanding of SN-1054 seems relatively intuitive, the incorporation of the neural superunknown into our understanding of ourselves threatens to confound intelligibility altogether. So why the difference?

The answer lies in the relation between the information and the cognitive resources we have available. In the case of SN-1054, the information provided happens to be the very information that our cognitive systems have evolved to decipher, namely, environmental information. The information provided by Hubble, for instance, is continuous with the information our brain generally uses to mechanically navigate and exploit our environments—more of the same. In the case of the first-person, however, the information accessed in metacognition falls drastically short what our cognitive systems require to conceive us in an environmentally continuous manner. And indeed, given the constraints pertaining to metacognition, the inefficiencies pertaining to evolutionary youth, the sheer complexity of its object, not to mention its structural complicity with its object, this is precisely what we should expect: selective blindness to whole dimensions of information.

So one might visualize the difference between the Anasazi and our contemporary astronomical understanding of SN-1054 as progressive turns of a screw:

where the contemporary understanding can be seen as adding more and more information, ‘twists,’ to the same set of dimensions. The difference between our intuitive and our contemporary neuroscientific understanding of ourselves, on the other hand, is more like:

where our screw is viewed on end instead of from the side, occluding the dimensions constitutive of the screw. The ‘O’ is actually a screw, obviously so, but for the simple want of information appears to be something radically different, something self-continuous and curiously flat, completely lacking empirical depth. Since these dimensions remain superunknown, they quite simply make no metacognitive difference. In the same way additional environmental information generally always complicates prior experiential and cognitive unities, the absence of information can be seen as simplifying experiential unities. Paint can become swarming ants, and swarming ants can look like paint. The primary difference with the first-person, once again, is that the experiential simplification you experience, say, watching a movie scene fade to white is ‘bent’ across entire dimensions of missing information—as is the case with our ‘O’ and our screw. The empirical depth of the latter is folded into the flat continuity of the former. On this line of interpretation, the first-person is best understood as a metacognitive version of the ‘flicker fusion’ effect in psycho-physics, or the way sleep can consign an entire plane flight to oblivion. You might say that neglect is the sleep of identity.

As the only game in information town, the ‘O’ intuitively strikes us as essentially what we are, rather than a perspectival artifact of information scarcity and heuristic inapplicability. And since this ‘O’ seems to frame the possibility of the screw, things are apt to become more confusing still, with proponents of ‘O’-ism claiming the ontological priority of an impoverished cognitive perspective over ‘screwism’ and its embarrassment of informatic riches, and with proponents of screwism claiming the reverse, but lacking any means to forcefully extend and demonstrate their counterintuitive positions.

One can analogically visualize this competition of framing intuitions as the difference between,

where the natural screw takes the ‘O’ as its condition, and,

where the natural screw takes the ‘O’ as its condition, and,

where the ‘O’ takes the natural screw as its condition, with the caveat that one understands the margins of the ‘O’ asymptotically, which is to say, as superunknown. A better visualize analogy lies in the margins of your present visual field, which is somehow bounded without possessing any visible boundary. Since the limits of conscious cognition always outrun the possibility of conscious cognition, conscious cognition, or ‘thought,’ seems to hang ‘nowhere,’ or at the very least ‘beyond’ the empirical, rendering the notion of ‘transcendental constraint’ an easy-to-intuit metacognitive move.

where the ‘O’ takes the natural screw as its condition, with the caveat that one understands the margins of the ‘O’ asymptotically, which is to say, as superunknown. A better visualize analogy lies in the margins of your present visual field, which is somehow bounded without possessing any visible boundary. Since the limits of conscious cognition always outrun the possibility of conscious cognition, conscious cognition, or ‘thought,’ seems to hang ‘nowhere,’ or at the very least ‘beyond’ the empirical, rendering the notion of ‘transcendental constraint’ an easy-to-intuit metacognitive move.

In this way, one might diagnose the constitutive transcendental as a metacognitive artifact of neglect. A symptom of brain blindness.

This is essentially the ambit of the Blind Brain Theory: to explain the incompatibility of the intentional with the natural in terms of what information we should expect to be available to metacognition. Insofar as the whole of traditional philosophy turns on ‘reflection,’ BBT amounts to a wholesale reconceptualization of the philosophical tradition as well. It is, without any doubt, the most radical parade of possibilities to ever trammel my imagination—a truly post-intentional philosophy—and I feel as though I have just begun to chart the troubling extent of its implicature. The motive of this piece is to simply convey the gestalt of this undiscovered country with enough sideways clarity to convince a few daring souls to drag out their theoretical canoes.

To summarize then: Taking the mechanistic paradigm of the life sciences as our baseline for ontological accuracy (and what else would we take?), the mental can be reinterpreted in terms of various kinds of dimensional loss. What follows is a list of some of these peculiarities and a provisional sketch of their corresponding ‘blind brain’ explanation. I view each of these theoretical vignettes as nothing more than an inaugural attempt, pixillated petroglyphs that are bound to be complicated and refined should the above hunches find empirical confirmation. If you find yourself reading with a squint, I ask only that you ponder the extraordinary fact that all these puzzling phenomena are characterized by missing information. Given the relation between information availability and cognitive reliability, is it simply a coincidence that we find them so difficult to understand? I’ll attempt to provide ways to visualize these sketches to facilitate understanding where I can, keeping in mind the way diagrams both elide and add dimensions.

.

Concept/Intuition Kind of Informatic Loss/Incapacity

Nowness - Insufficient temporal information regarding the time of information processing is integrated into conscious awareness. Metacognition, therefore, cannot make second-order before-and-after distinctions (or, put differently, is ‘laterally insensitive’ to the ‘time of timing’), leading to the faulty assumption of second-order temporal identity, and hence the ‘paradox of the now’ so famously described by Aristotle and Augustine.

So again, metacognitive neglect means our brains simply cannot track the time of their own operations the way it can track the time of the environment that systematically engages it. Since the absence of information is the absence of distinctions, our experience of time as metacognized ‘fuses’ into the paradoxical temporal identity in difference we term the now.

So again, metacognitive neglect means our brains simply cannot track the time of their own operations the way it can track the time of the environment that systematically engages it. Since the absence of information is the absence of distinctions, our experience of time as metacognized ‘fuses’ into the paradoxical temporal identity in difference we term the now.

Reflexivity - Insufficient temporal information regarding the time of information processing is integrated into conscious awareness. Metacognition, therefore, can only make granular second-order sequential distinctions, leading to the faulty metacognitive assumption of mental reflexivity, or contemporaneous self-relatedness (either intentional as in the analytic tradition, or nonintentional as well, as posited in the continental tradition), the sense that cognition can be cognized as it cognizes, rather than always only post facto. Thus, once again, the mysterious (even miraculous) appearance of the mental, since mechanically, all the processes involved in the generation of consciousness are irreflexive. Resources engaged in tracking cannot themselves be tracked. In nature the loop can be tightened, but never cinched the way it appears to be in experience.

Once again, experience as metacognized fuses, consigning vast amounts of information to the superunknown, in this case, the dimension of irreflexivity. The mental is not only flattened into a mere informatic shadow, it becomes bizarrely self-continuous as well.

Once again, experience as metacognized fuses, consigning vast amounts of information to the superunknown, in this case, the dimension of irreflexivity. The mental is not only flattened into a mere informatic shadow, it becomes bizarrely self-continuous as well.

Personal Identity - Insufficient information regarding the sequential or irreflexive processing of information integrated into conscious awareness, as per above. Metacognition attributes psychological continuity, even ontological simplicity, to ‘us’ simply because it neglects the information required to cognize myriad, and many cases profound, discontinuities. The same way sleep elides travel, making it seem like you simply ‘awaken someplace else,’ so too does metacognitive neglect occlude any possible consciousness of moment to moment discontinuity.

Conscious Unity - Insufficient information regarding the disparate neural complexities responsible for consciousness. Metacognition, therefore, cannot make the relevant distinctions, and so assumes unity. Once again, the mundane assertion of identity in the absence of distinctions is the culprit. So the character below strikes us as continuous,

X

even though it is actually composite,

simply for want of discriminations, or additional information.

Meaning Holism - Insufficient information regarding the disparate neural complexities responsible for conscious meaning. Metacognition, therefore, cannot make the high-dimensional distinctions required to track external relations, and so mistakes the mechanical systematicity of the pertinent neural structures and functions (such as the neural interconnectivity requisite for ‘winner take all’ systems) for a lower dimensional ‘internal relationality.’ ‘Meaning,’ therefore, appears to be differential in some elusive formal, as opposed to merely mechanical, sense.

Volition - Insufficient information regarding neural/environmental production and attenuation of behaviour integrated into conscious awareness. Unable to track the neurofunctional provenance of behaviour, metacognition posits ‘choice,’ the determination of behaviour ex-nihilo.

Once again, the lack of access to a given dimension of information forces metacognition to rely on an ad hoc heuristic, ‘choice,’ which only becomes a problem when theoretical metacognition, blind to its heuristic occlusion of dimensionality, feeds it to cognitive systems primarily adapted to high-dimensional environmental information.

Once again, the lack of access to a given dimension of information forces metacognition to rely on an ad hoc heuristic, ‘choice,’ which only becomes a problem when theoretical metacognition, blind to its heuristic occlusion of dimensionality, feeds it to cognitive systems primarily adapted to high-dimensional environmental information.

Purposiveness - Insufficient information regarding neural/environmental production and attenuation of behaviour integrated into conscious awareness. Cognition thus resorts to noncausal heuristics keyed to solving behaviours rather than those keyed to solving environmental regularities—or ‘mindreading.’ Blind to the heuristic nature of these systems, theoretical metacognition attributes efficacy to predicted outcomes. Constraint is intuited in terms of the predicted effect of a given behaviour as opposed to its causal matrix. What comes after appears to determine what comes before, or ‘cranes,’ to borrow Dennett’s metaphor, become ‘skyhooks.’ Situationally adapted behaviours become ‘goal-directed actions.’

Value – Insufficient information regarding neural/environmental production and attenuation of behaviour integrated into conscious awareness. Blind to the behavioural feedback dynamics that effect avoidance or engagement, metacognition resorts to heuristic attributions of ‘value’ to effect further avoidance or engagement (either socially or individually). Blind to the radically heuristic nature of these attributions, theoretical metacognition attributes environmental reality to these attributions.

Normativity - Insufficient information regarding neural/environmental production and attenuation of behaviour integrated into conscious awareness. Cognition thus resorts to noncausal heuristics geared to solving behaviours rather than those geared to solving environmental regularities. Blind to these heuristic systems, deliberative metacognition attributes efficacy or constraint to predicted outcomes. Constraint is intuited in terms of the predicted effect of a given behaviour as opposed to its causal matrix. What comes after appears to determine what comes before. Situationally adapted behaviours become ‘goal-directed actions.’ Blind to the dynamics of those behavioural patterns producing environmental effects that effect their extinction or reproduction (that generate attractors), metacognition resorts to drastically heuristic attributions of ‘rightness’ and ‘wrongness,’ further effecting the extinction or reproduction of behavioural patterns (either socially or individually). Blind to the heuristic nature of these attributions, theoretical metacognition attributes environmental reality to them. Behavioural patterns become ‘rules,’ apparent noncausal constraints.

Aboutness (or Intentionality Proper) - Insufficient information regarding processing of environmental information integrated into conscious awareness. Even though we are mechanically embedded as a component of our environments, outside of certain brute interactions, information regarding this systematic causal interrelation is unavailable for cognition. Forced to cognize/communicate this relation absent this causal information, metacognition resorts to ‘aboutness’. Blind to the radically heuristic nature of aboutness, theoretical metacognition attributes environmental reality to the relation, even though it obviously neglects the convoluted circuit of causal feedback that actually characterizes the neural-environmental relation.

The easiest way to visualize this dynamic is to evince it as,

where the screw diagrams the dimensional complexity of the natural within an apparent frame that collapses many of those dimensions—your present ‘first person’ experience of seeing the figure above. This allows us to complicate the diagram thus,

where the screw diagrams the dimensional complexity of the natural within an apparent frame that collapses many of those dimensions—your present ‘first person’ experience of seeing the figure above. This allows us to complicate the diagram thus,

bearing in mind that the explicit limit of the ‘O’ diagramming your first-person experiential frame is actually implicit or asymptotic, which is to say, occluded from conscious experience as it was in the initial diagram. Since the actual relation between ‘you’ (or your ‘thought,’ or your ‘utterance,’ or your ‘belief,’ and ‘etc.’) and what is cognized/perceived—experienced—outruns experience, you find yourself stranded with the bald fact of a relation, an ineluctable coincidence of you and your object, or ‘aboutness,’

bearing in mind that the explicit limit of the ‘O’ diagramming your first-person experiential frame is actually implicit or asymptotic, which is to say, occluded from conscious experience as it was in the initial diagram. Since the actual relation between ‘you’ (or your ‘thought,’ or your ‘utterance,’ or your ‘belief,’ and ‘etc.’) and what is cognized/perceived—experienced—outruns experience, you find yourself stranded with the bald fact of a relation, an ineluctable coincidence of you and your object, or ‘aboutness,’

where ‘you’ simply are related to an independent object world. The collapse of the causal dimension of your environmental relatedness into the superunknown requires a variety of ‘heuristic fixes’ to adequately metacognize. This then provides the basis for the typically mysterious metacognitive intuitions that inform intentional concepts such as representation, reference, content, truth, and the like.

where ‘you’ simply are related to an independent object world. The collapse of the causal dimension of your environmental relatedness into the superunknown requires a variety of ‘heuristic fixes’ to adequately metacognize. This then provides the basis for the typically mysterious metacognitive intuitions that inform intentional concepts such as representation, reference, content, truth, and the like.

Representation – Insufficient information regarding processing of environmental information integrated into conscious awareness. Even though we are mechanically embedded as a component of our environments, outside of certain brute interactions, information regarding this systematic causal interrelation is unavailable for cognition. Forced to cognize/communicate this relation absent this causal information, metacognition resorts to ‘aboutness’. Blind to the radically heuristic nature of aboutness, theoretical metacognition attributes environmental reality to the relation, even though it obviously neglects the convoluted circuit of causal feedback that actually characterizes the neural-environmental relation. Subsequent theoretical analysis of cognition, therefore, attributes aboutness to the various components apparently identified, producing the metacognitive illusion of representation.

Our implicit conscious experience of some natural phenomenon,

replacing the simple unmediated (or ‘transparent’) intentionality intuited in the former with a more complex mediated intentionality that is more easily shoehorned into our natural understanding of cognition, given that the latter deals in complex mechanistic mediations of information.

replacing the simple unmediated (or ‘transparent’) intentionality intuited in the former with a more complex mediated intentionality that is more easily shoehorned into our natural understanding of cognition, given that the latter deals in complex mechanistic mediations of information.

Truth – Insufficient information regarding processing of environmental information integrated into conscious awareness. Even though we are mechanically embedded as a component of our environments, outside of certain brute interactions, information regarding this systematic causal interrelation is unavailable for cognition. Forced to cognize/communicate this relation absent this causal information, metacognition resorts to ‘aboutness’. Since the mechanical effectiveness of any specific conscious experience is a product of the very system occluded from metacognition, it is intuited as given in the absence of exceptions—which is to say, as ‘true.’ Truth is the radically heuristic way the brain metacognizes the effectiveness of its cognitive functions. Insofar as possible exceptions remain superunknown, the effectiveness of any relation metacognized as ‘true’ will remain apparently exceptionless, what obtains no matter how we find ourselves environmentally embedded—as a ‘view from nowhere.’ Thus your ongoing first-person experience of,

will be implicitly assumed true period, or exceptionless, (sufficient for effective problem solving in all ecologies), barring any quirk of information availability (‘perspective’) that flags potential problem solving limitations, such as a diagnosis of psychosis, awakening from a nap, the use of a microscope, etc. This allows us to conceive the natural basis for the antithesis between truth and context: as a heuristic artifact of neglect, truth literally requires the occlusion of information pertaining to cognitive function to be metacognitively intuited.

will be implicitly assumed true period, or exceptionless, (sufficient for effective problem solving in all ecologies), barring any quirk of information availability (‘perspective’) that flags potential problem solving limitations, such as a diagnosis of psychosis, awakening from a nap, the use of a microscope, etc. This allows us to conceive the natural basis for the antithesis between truth and context: as a heuristic artifact of neglect, truth literally requires the occlusion of information pertaining to cognitive function to be metacognitively intuited.

So in terms of our visual analogy, truth can be seen as the cognitive aspect of ‘O,’ how the screw of nature appears with most of its dimensions collapsed, as apparently ‘timeless and immutable,’

for simple want of information pertaining to its concrete contingencies. As more and more of the screw’s dimensions are revealed, however, the more temporal and mutable—contingent—it becomes. Truth evaporates… or so we intuit.

for simple want of information pertaining to its concrete contingencies. As more and more of the screw’s dimensions are revealed, however, the more temporal and mutable—contingent—it becomes. Truth evaporates… or so we intuit.

.

Lacuna Obligata

Given the facility with which Blind Brain Theory allows these concepts to be naturally reconceptualised, my hunch is that many others may be likewise demystified. Aprioricity, for instance, clearly turns on some kind of metacognitive ‘priority neglect,’ whereas abstraction clearly involves some kind of ‘grain neglect.’ It’s important to note that these diagnoses do not impeach the implicit effectiveness of many of these concepts so much as what theoretical metacognition, or ‘philosophical reflection,’ has generally made of them. It is precisely the neglect of information that allows our naive employment of these heuristics to be effective within the limited sphere of those problem ecologies they are adapted to solve. This is actually what I think the later Wittgenstein was after in his attempts to argue the matching of conceptual grammars with language-games: he simply lacked the conceptual resources to see that normativity and aboutness were of a piece. It is only when philosophers, as reliant upon deliberative theoretical metacognition as they are, misconstrue what are parochial problem solvers for more universal ones, that we find ourselves in the intractable morass that is traditional philosophy.

To understand the difference between the natural and the first-person we need a positive way to characterize that difference. We have to find a way to let that difference make a difference. Neglect is that way. The trick lies in conceiving the way the neglect of various dimensions of information dupes theoretical metacognition into intuiting the various structural peculiarities traditionally ascribed to the first-person. So once again, where running the clock of astronomical discovery backward merely subtracts information from a fixed dimensional frame,

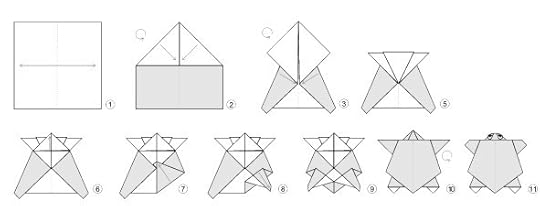

explaining the first-person requires the subtraction of dimensions as well,

explaining the first-person requires the subtraction of dimensions as well,

that we engage in a kind of ‘conceptual origami,’ conceive the first-person, in spite of its intuitive immediacy, as what the brain looks like when whole dimensions of information are folded away.

that we engage in a kind of ‘conceptual origami,’ conceive the first-person, in spite of its intuitive immediacy, as what the brain looks like when whole dimensions of information are folded away.

And this, I realize, is not easy. Nevertheless, tracking environments requires resources which themselves cannot be tracked, thus occluding cognitive neurofunctionality from cognition—imposing ‘medial neglect.’ The brain thus becomes superunknown relative to itself. Its own astronomical complexity is the barricade that strands metacognitive intuitions with intentionality as traditionally conceived. Anyone disagreeing with this needs to explain how it is human metacognition overcomes this boggling complexity. Otherwise, all the provocative questions raised here remain: Is it simply a coincidence that intentional concepts exhibit such similar patterns of information privation? For instance, is it a coincidence that the curious causal bottomlessness that haunts normativity—the notion of ‘rules’ and ‘ends’ somehow ‘constraining’ via some kind of obscure relation to causal mechanism—also haunts the ‘aiming’ that informs conceptions of representation? Or volition? Or truth?

The Blind Brain theory says, Nope. If the lack of information, the ‘superunknown,’ is what limits our ability to cognize nature, then it makes sense to assume that it also limits our ability to cognize ourselves. If the lack of information is what prevents us from seeing our way past traditional conceits regarding the world, it makes sense to think it also prevents us from seeing our way past cherished traditional conceits regarding ourselves. If information privation plays any role in ignorance or misconception at all, we should assume that the grandiose edifice of traditional human self-understanding is about to founder in the ongoing informatic Flood…

That what we have hitherto called ‘human’ has been raised upon shades of cognition obscura.

December 16, 2013

Life as Singularity

I’ve had a couple of quite different quotes rattling about in my bean of late, the first hailing from about 150 years ago:

“I do not hesitate to maintain, that what we are conscious of is constructed out of what we are not conscious of–that our whole knowledge, in fact, is made up of the unknown and incognisable.” Sir William Hamilton, Lectures on Metaphysics, p. 348, 1865

And a second from 1999:

“Human consciousness appears to be an emergent brain function that permits an individual to experience a subjective sense of reality. By its nature, consciousness conveys a sense of what is real now as well as what was real in the past. It provides a sense of contiguity for the self and thereby mediates how individuals perceive and deal with the external world.” George P. Prigatano, “Disorders of Behavior and Self-awareness.”

I often marvel thinking about the first simply because of the degree to which subsequent social and cognitive psychological research has borne out what must have seemed a mad claim in the 19th century. The second struck me both because of its pragmatic nature–to discuss problems of awareness you need to at least provisionally define what awareness means–and because of the way it makes no damned sense whatsoever, even though it makes all the sense in the world.

Since “experience a subjective sense of reality” means to be conscious of the world, we can rewrite it as:

“Human consciousness appears to be an emergent brain function that permits an individual to [be conscious of the world]. By its nature, consciousness conveys a sense of what is real now as well as what was real in the past. It provides a sense of contiguity for the self and thereby mediates how individuals perceive and deal with the external world.”

Since the ‘real’ refers to what lies beyond consciousness, and since ‘sense’ means to be conscious of, ”consciousness conveys a sense of what is real” can be rephrased as “consciousness conveys a consciousness of what exceeds consciousness,” allowing us to rewrite the passage as:

“Human consciousness appears to be an emergent brain function that permits an individual to [be conscious of the world]. By its nature, [consciousness conveys a consciousness of what exceeds consciousness] now as well as what [exceeds consciousness] in the past. It provides a sense of contiguity for the self and thereby mediates how individuals perceive and deal with the external world.”

Extending this logic of substitution to the last sentence yields:

“Human consciousness appears to be an emergent brain function that permits an individual to [be conscious of the world]. By its nature, [consciousness conveys a consciousness of what exceeds consciousness] now as well as what [exceeded consciousness] in the past. It provides a [consciousness] of contiguity [of consciousness] and thereby mediates how individuals [are conscious of] and deal with [what exceeds consciousness].”

Which is to say, a definition that really doesn’t define that much at all. In fact, it brings to mind another old favourite quote of mine regarding the ‘one-dimensionality of experience,’ how “experience is experience, only experience, and nothing but experience” (Floridi, The Philosophy of Information, 296, 2011). It demonstrates, in other words, the way consciousness seems to only have consciousness to go on. In a sense, this is ‘what is it likeness’ in a nutshell, the apparent inability to explicate the experience of experience short of referencing more experience. It’s important to understand how profound a limitation this is, and how it almost certainly generates profound distortions and illusions as a result.

A good part of BBT can be read as an attempt to naturalistically explain this bewildering characteristic of conscious experience, the fact that it possesses the strange Klein bottle structure that it does. What Hamilton is referring to is consciousness as component, the consciousness that you actually have, where each moment of consciousness possesses vectors of functionality completely orthogonal to what you can become ‘conscious of.’ What Prigatano is referring to is consciousness as metacognized, what consciousness becomes when understood through the lens of itself–the only lens that it has. Information that doesn’t make it to consciousness does not exist for consciousness, which has the effect of rendering consciousness everything that there is, both inside and outside. So what Hamilton is referring to is the outside that lies outside the inside/outside dichotomy. And what Prigatano is referring to is simply everything, as far as consciousness is concerned.

Thus the powerful and pervasive cognitive illusion that I’ve been calling ‘noocentrism.’ Noocentrism can be seen as an inevitable consequence of this latter consciousness, the one everyone thinks needs to be explained even though it doesn’t exist. Consciousness only has itself to credit when attempting to access the origins of whatever flits through its lens. Even though they do all the lifting, Hamilton’s orthogonal vectors of functionality simply do not exist. So consciousness credits itself, makes itself central to its own happening. And herein lies the dilemma for the human species: we’ve raised our entire self-understanding, all that is supposed ‘human,’ upon what is almost certainly a metacognitive illusion.

What does it mean to be something ‘unthinkable’?

December 11, 2013

The Philosopher’s Trilemma (is just the tip of the iceberg)

I just wanted to link another excellent contemplation of BBT and its implications over at noir-realism…

And I also wanted to let you all know that the venerable CBC radio documentary series, Ideas, with Paul Kennedy, will air “The Fool’s Dilemma,” this Thursday, December the 12th, 9PM EST, featuring me and a bunch of people who actually know what they’re talking about. Aside from having a ball doing the interview, I can’t really remember what I talked about (always the sign of a great interviewer). My guess is that I talked about how we should just be happy, follow our heart, make opportunities happen, trust our gut, believe in ourselves, just do it, pray for a better tomorrow, be thankful for what we got, dare to dream, and find ourselves…

Fuck you and goodnight.

December 9, 2013

Death of a Dichotomy

And new philosophy calls all in doubt,

The element of fire is quite put out

–John Donne, “The First Anniversary”

.

What would it mean for thinking to leave most all the traditional philosophical dichotomies behind? What would it mean for philosophy to become post-intentional, to hew to an idiom that abandons most all our traditional theoretical regimentations of the ‘human’? More specifically, what happens when we come to see subjects and objects as relics of a more superstitious age?

I once had the sorrow of watching encephalitis dismantle the soul of an old friend of mine. I was able to understand the why of what he said and did simply because I had a rough understanding that things were shutting down. The increasingly rote character of his responses suggested a gradual and global degradation of cognitive capacity. The loss of the right-hand side of the world meant left hemispheric damage, especially to the regions involved in the construction of ‘extrapersonal space.’ The flat-affect suggested destruction in regions involved in integrating or expressing emotions. These crude assumptions at least seemed enough to make sense of what would be hard-to-reckon behaviour otherwise. Near the end, he had been stripped down to whatever generated his quavering voice, staring out at shadows, saying, without any fear or remorse or anything, “I think I’m dying.” It fucking haunts me still.

Certain behavioural oddities can only be understood by positing pathological losses of some kind. When neurologists make a diagnosis of neglect or anosognosia they know only a limited number of correlations between various symptoms and neuropathologies. They don’t know the mechanical specifics, but they know that it’s mechanical (and so not a matter of denial), and that it somehow involves the inability of the system to adequately access or process information regarding the cognitive deficit at issue. As Prigatano notes, “Such patients often complain that they “cannot believe” that they have suffered a brain injury because nothing in their experience allows them to believe that a brain injury has actually occurred.” Disorders of Behavior and Self Awareness. Though observers have no difficulty distinguishing the deviant patterns of behaviour as deviant, the patient simply cannot consciously access or process that information.

The Blind Brain Theory essentially takes the same morbid attitude to the hitherto intractable question of the first-person. By doing so, it exploits the way information (understood as systematic difference making differences) can be tracked across the neurobiological/phenomenological divide via the idiom of neglect, how one can understand differences in experience in terms of differences in brain function. It then sets out to show how certain brute mechanical limitations on the brain’s capacity to cognize brains–both itself and others–saddle cognition with several profound forms of ‘metacognitive neglect.’ It then posits a variety of ways these forms of neglect compromise and confound our attempts to make sense of what we are. The perennial puzzles of consciousness and intentionality, it argues, can be explained away in terms of the metacognitive inability to access or process the requisite information.

The subject/object dichotomy has been a centerpiece of those ‘explanations away’ these past couple years. When we see the subject/object dichotomy in terms of neglect, it becomes an obvious artifact of how constraints imposed on the way the brain is a component of its environment prevent the brain from metacognizing itself as a component of its environments. Put simply, being the product of an environment renders cognition systematically insensitive to various dimensions of that environment. All of us accordingly suffer from what might be called medial neglect. The first-person perspectival experience that you seem to be enjoying this very moment is itself a ‘product’ of medial neglect. At no point do the causal complexities bound to any fraction of conscious experience arise as such in conscious experience. As a matter of brute empirical fact, you are a component system nested within an assemblage of superordinate systems, and yet, when you reflect ‘you’ seem to stand opposite the ‘world,’ to be a hanging relation, a living dichotomy, rather than the causal system that you are. Medial neglect is this blindness, the metacognitive insensitivity to our matter of fact componency, the fact that the neurofunctionality of experience nowhere appears in experience. In a strange sense, it simply is the ‘transparency of experience,’ an expression of the brain’s utter inability to cognize itself the way it cognizes its natural environments.

On BBT, the basic ‘aboutness’ that the philosophical tradition has sculpted into a welter of wildly divergent forms (Dasein, cogito, transcendental apperception, Idea, deontic scorekeepers, differance, correlation, etc.) is a kind of heuristic, a way to track componential relations absent direct access to those relations. As a heuristic it has an adaptive problem-ecology, a range of problems that it is adapted to solve, and as it turns out, that problem-ecology is pretty easy to delimit, given the kind of information neglected, namely, causal information pertaining to instrumental processes. To say that aboutness is a product of medial neglect is to say that the aboutness heuristic is instrumentally blind, and therefore adapted to functionally independent problem-ecologies. You can repair your lawnmower because its functionality is independent of (runs lateral to) your cognition. You cannot likewise repair your spouse because his or her functionality (fortunately or unfortunately) is not independent of (runs medial to) your cognition–because you are functionally entangled. These problem-ecologies require a different set of heuristics to solve, as do all situations where so-called ’observer effects’ introduce incalculable ’blind spot information.’

On BBT, this is why the subject/object dichotomy seems to break down, not only at the quantum scale, but in social, cognitive, and phenomenological problem ecologies as well. If aboutness is adapted to the solution of functionally independent problem-ecologies, then how reliable could ‘thinking about our thinking’ be? This is also why the early Heidegger thought that theoretical focus on the instrumental (as he saw it) dissolved the dichotomy altogether. To focus on the instrumental (or better yet, the medial) is to embody the very enabling dimension aboutness neglects. And this also explains, I think, the severe functional hygiene that characterizes the ’scientific attitude,’ the need to erase instrumental impact and ’objectify’ the targets of research. I could go on, but suffice to say, even in just this one respect, BBT casts a very long abductive shadow.

Even after such a brief account, the explosive potential of this way of thinking should be clear. Not only does it provide a naturalistic way to diagnose various philosophical attempts to massage and sculpt our ‘aboutness instinct,’ it opens the door to a thought that surpasses the subject/object dichotomy, a thought that allows us to see this dichotomy for the informatically impoverished heuristic that it is. Because, when all is said and done, that is what the subject/object dichotomy is, a cognitive tool adapted to specific problem-ecologies that we could never intuit as a tool, thanks to metacognitive neglect, and so compulsively took to be a universal problem solver. The long tyranny of the subject/object paradigm, in other words, is the result of a profound self-awareness deficit, a kind of ‘natural anosognosia.’ To think that all this time we were so convinced we could see!

Typical.

If you think about it, any escape from the prisonhouse of traditional philosophy was bound to be enormously destructive. Since the mistake was there from the very beginning of philosophy, it had to be a mistake that every philosopher throughout the history of philosophy had committed–some kind of problem intrinsic to the limitations of that great gift of the Ancient Greeks, theoretical metacognition. What could our inability to solve the problem of ourselves be other than some kind of ‘self-awareness deficit’?

Solving the problem of ourselves, it turns out, requires seeing through what we took ourselves to be–disenchanting nature’s last unclaimed corner. We are vastly complicated, environmentally entangled systems, components, generating signal and noise. No one argues with this, and yet, under the spell of the Only-game-in-town Effect, the vast majority assumes that intentional idioms (folk-psychological or otherwise) are the only viable way to problem-solve in the register of the human. This is just not the case. The post-intentional reinterpretation of the human has scarcely begun, while the rush to economically exploit the mechanicity of the human is well under way. Kant’s Kingdom of Ends is being socially and economically integrated into the Empire of Causes at an ever increasing rate, progressively personalizing the interface with individuals, while consistently depersonalizing the processes informing that interface. In a sense, post-intentional philosophy is a latecomer to the entrenched trends of akratic society.

Besides, as a machine, you are now as big as the cosmos, as old as Evolution, or the even the Big Bang–or as young as a string of neural coincidences in your frontal cortex. You are now a genuine part of nature, not something magically bootstrapped. As such, you can zoom in or out when asking the question of the human; you can draft ‘mechanism sketches’ of any component at any scale.

Now you might think you could have done this anyway – there certainly seems to be a rising tide of people attempting to think their way beyond the bourne of intentionality. But the fact is that all these attempts represent a repudiation of a phenomena that is simply not understood. In creative terms, they are doubtless ground-breaking, but absent any principled, naturalistic understanding of the ground broken, they have no principled way to distinguish the space they occupy as ‘post-intentional.’ Perhaps they have cheated, allowed post hoc importations of the subject/object paradigm to finesse the more notorious problems facing naturalization. The problem isn’t that there is only occultism, edifying verbiage draped about some will to escape, short of some comprehensive way to naturalistically explain away subjectivity; the problem is that there is no way to distinguish between such occultism and any genuinely post-intentional insight.

Even worse, in critical terms these attempts are at sea, bereft of any decisive means of defending themselves against their traditional skeptics, who regard them as more a product of exhaustion than any genuine understanding–and for good reason! Indeed, one would think that a genuine post-intentional philosophy would possess teeth, that it would raise questions that the tradition has no resources to answer, reveal ignorances, even as it clears away the mysteries generated by our past missapprehensions. One would think, in other words, that it would provide a way of thinking that would just as readily unlock the thought of the past as it would lay out possibilities for the future.

How can we talk action without autonomy? How can we think conduct without competence, or instrumentality without ends, or desire without value, or accuracy without truth, or experience without subjectivity? I’m sure many reading this find the prospect either preposterous or monstrous or both. I can’t answer to the charge of monstrosity, because I often think as much myself. But if you take the same patience to the Blind Brain Theory that you take to reading Kant, say, or Wittgenstein, or any philosophy that needs to be understood on its own terms to be understood at all, you will see that these questions, far from preposterous, are well-nigh unavoidable–especially now that we are fully invested in reverse-engineering the ancient, alien technology that we happen to be.

No matter what, the long discursive hegemony of the Grand Dichotomy is over. Traditional theoretical intentional thought now has an other.

The old fires are dying, our eyes are adjusting to the gloom, and we can finally see that we were so much more than faces hanging in the black.

December 5, 2013

The Myth of the Nonexistent Variable

Noocentrism is the intuitive presumption of ‘hanging efficacy,’ the kind of acausal constraint metacognition attributes to aboutness or willing or rule-following or purposiveness.

Metacognitive neglect means that we ‘float’ through our environments blind to the causal systems that actually constrain us and–crucially–blind to this blindness as well. We are thus forced to rely on the various heuristic systems we’ve evolved to manage our environments absent this information, systems that metacognition, in its present acculturated form, tracks as aboutness, willing, rule-following, and purposiveness. Since metacognition cannot track these heuristic systems for the specialized ecomechanisms they in fact are, it assumes a singular cognitive and metacognitive capacity possessing universal scope. Thus the dogmatists and the early modern faith in the adequacy of metacognition. The history of philosophy is the history of throwing ourselves into the metacognitive breach time and again, groping our way forward primarily by our failure to agree. In the Western philosophical tradition, Hume was the first to definitively isolate the constructed nature of experience, and thus the first to make explicit the implicit performative dimension of the first-person. Kant was the first to raise a whole Weltanschaung about it, and an extravagant one at that. Because this is so obviously ‘the way it is’ in philosophy, the tendency is to be blind to what is a truly extraordinary fact: that untutored metacognition is blind to the implicit performative dimension of all thought and experience. What was an inert blank found itself populated by performative blurs. And now, thanks to the sciences of the brain, those blurs are coming into sharper and sharper focus at last.

The third variable problem is the problem of hidden mechanisms. Given the systematicy of our environments, correlations abound. Given the complexity or our environments, the chances of mistaking correlation for causation are high. You fuel gauge indicates empty and your car coughs to a stop and so you assume you’ve run out of gas not knowing that your fuel pump has died. Researchers note a correlation between violent behaviour in youth and broken homes and so assume broken homes primarily cause violent behaviour, not knowing that association with other violent youth is the primary cause. The crazy thing to note here is how the problem of metacognitive neglect is also a problem of hidden mechanisms. In a very real sense, what is presently coming into focus are all the third variables of experience, all the mechanisms actually constraining our thought and behaviour.

In this sense, noocentrism is the myth of the nonexistent variable. The innumerable third variable processes that traverse the whole of conscious experience simply do not exist for conscious experience. Since metacognition is blind to these mechanisms, it can only posit constraints orthogonal to this activity, those belonging, as we saw above, to aboutness, willing, rule-following, and purposiveness. Since metacognition is blind to this orthogonality, the fact that it is positing constraints in the absence of any information regarding what is actually constraining thought and behaviour, the constraints posited suffer a profound version of the Only-game-in-town Effect. Given the mechanical systematicity of the brain, correlations abound in thought and behaviour: conscious experience appears to possess its own orthogonal, noocentric systematicity. Given neglect of the brain’s mechanical systematicity, those correlations appear to be the only game in town, to at once ‘autonomous,’ and the only way the systematicity of thought and behaviour can possibly be cognized. Historically, the bulk of philosophy has been given over to the task of properly describing that orthogonal noocentric systematicity using only the dregs of metacognitive intuition–or ‘reflection.’

Only now, thanks to the fount of information provided by the cognitive sciences, can we see the hopelessness of such a project. Decisions can be tracked prior to a subject’s ability to report them. The feeling of willing can be readily duped and is therefore interpretative. Memory turns out to be fractionate and nonveridical. Moral argumentation is self-promotional rather than truth-seeking. Attitudes appear to be introspectively inaccessible. The feeling of certainty has a dubious connection to rational warrant. The more we learn, the more faulty our traditional intuitions become. It’s as if our metacognitive portrait had been painted across a canvas concealing myriad, intricate folds, and whose kinks and dimensions can only now be teased into visibility. Only now are we discovering just how many of our traditional verities turn on ignorance. The myth of the nonexistent variable can no longer be sustained.

We must learn to intellectually condition our metacognitive sense of ourselves, to dim the bright intuitions of sufficiency and efficacy, and to think thought for what it is, a low-dimensional inkling bound to the back of far more high-dimensional processes. We must appreciate how all thought and behaviour is shot through with hidden variables, how this very exercise pitches within some tidal unknown. And we must see noocentrism as the latest of the great illusions to be overturned by the scientific discovery of the unseen machinery of things.

November 29, 2013

Skyhook Theory: Intentional Systems versus Blind Brains

So I recently finished Michael Graziano’s Consciousness and the Social Brain, and I’m hoping to provide a short review in the near future. Although he comes nowhere near espousing anything resembling the Blind Brain Theory, he does take some steps in its direction - but most of them turn out to be muddled versions of the same steps taken by Daniel Dennett decades ago. Since Dennett’s position remains the closest to my own, I thought it might be worthwhile to show how BBT picks up where Dennett’s Intentional Systems Theory (IST) ends.

Dennett, of course, espouses what might be called ‘evolutionary externalism’: meaning not only outruns any individual brain, it outruns any community of brains as well, insofar both are the products of the vast ‘design space’ of evolution. As he writes:

“There is no way to capture the semantic properties of things (word tokens, diagrams, nerve impulses, brain states) by a micro-reduction. Semantic properties are not just relational but, you might say, super-relational, for the relation a particular vehicle of content, or token, must bear in order to have content is not just a relation it bears to other similar things (e.g., other tokens, or parts of tokens, or sets of tokens, or causes of tokens) but a relation between the token and the whole life–and counterfactual life–of the organism it ‘serves’ and that organism’s requirements for survival and its evolutionary ancestry.” The Intentional Stance, 65

Now on Dennett’s evolutionary account we always already find ourselves caught up in this super-relational totality: we attribute mind or meaning as a way to manage the intractable complexities of our natural circumstance. The patterns corresponding to ‘semantic properties’ are perspectival, patterns that can only be detected from positions embedded within the superordinate system of systems tracking systems. As Don Ross excellently puts it:

“A stance is a foregrounding of some (real) systematically related aspects of a system or process against a compensating backgrounding of other aspects. It is both possible and useful to pick out these sets of aspects because (as a matter of fact) the boundaries of patterns very frequently do not correspond to the boundaries of the naive realist’s objects. If they always did correspond, the design and intentional stances would be worthless, though there would have been no selection pressure to design a community in which this could be thought; and if they never corresponded, the physical stance, which puts essential constraints on reasonable design- and intentional-stance accounts, would be inaccessible. Because physical objects are stable patterns, there is a reliable logical basis for further order, but because many patterns are not coextensive with physical objects (in any but a trivial sense of ‘‘physical object’’), a sophisticated informavore must be designed to, or designed to learn to, track them. To be a tracker of patterns under more than one aspectualization is to be a taker of stances.” Dennett’s Philosophy, 20-21.

Dennett, in other words, is no mere instrumentalist. Attributions of mind, he wants to argue, are real enough given the reality of the patterns they track – the reality that renders the behaviour of certain systems predictable. The fact that some patterns can only be picked out perspectivally in no way impugns the reality of those patterns. But certainly the burning question then becomes one of how Dennett’s intentional stance manages to do this. As Robert Cummins writes, “I have doubts, however, about Dennett’s ‘intentional systems theory’ that would have us indulge in such characterization without worrying about how the intentional characterizations in question relate to characterization based on explicit representation” (The World in the Head, 87). How can a system ‘take the intentional stance’ in the first place? What is it about systems that render them explicable in intentional terms? How does this relation between these capacities ‘to take as’ and ‘to be taken as’ leverage successful prediction? These would seem to be both obvious and pressing questions. And yet Dennett replies,

“I propose we simply postpone the worrisome question of what really has a mind, about what the proper domain of the intentional stance is. Whatever the right answer to this question is – if it has a right answer – this will not jeopardize the plain fact that the intentional stance works remarkably well as a prediction method in these other areas, almost as well as it works in our daily lives as folk psychologists dealing with other people. This move of mine annoys and frustrates some philosophers, who want to blow the whistle and insist on properly settling the issue of what a mind, a belief, a desire is before taking another step. Define your terms, sir! No, I won’t. That would be premature. I want to explore first the power and extent of application of this good trick, the intentional stance.” Intuition Pumps, 79

Dennett goes on to describe this strategy as one of ‘nibbling,’ but he never really explains what makes it a good strategy aside from murky suggestions that sinking our teeth into the problem is somehow ‘premature.’ To me this sounds suspiciously like trying to make a virtue out of ignorance. I used to wonder why it was Dennett has consistently refused to push his imagination past the bourne of IST, why he would cling to intentional stances as his great unexplained explainer. Has he tried, only to retreat baffled, time and again? Or does he really believe his ‘prematurity thesis,’ that the time, for some unknown reason, is not ripe to press forward with a more detailed analysis of what makes intentional stances tick? Or has he planted his flag on this particular boundary and foresworn all further imperial ambitions for more political reasons (such as blunting the charge of eliminativism)?

Perhaps there’s an element of truth to all three of these scenarios, but more and more, I’m beginning to think that Dennett has simply run afoul of the very kind of deceptive intuition he is so prone to pull out of the work of others. Since this gaff is a gaff, it prevents him from pushing IST much further than he already has. But since this gaff allows him to largely avoid the eliminativist consequences his view would have otherwise, he really has no incentive to challenge his own thinking. If you’re going to get stuck, better the top of some hill.

BBT, for its part, agrees with the bulk of the foregoing. Since the mechanical complexities of brains so outrun the cognitive capacities of brains, managing brains (other’s or our own) requires a toolbox of very specialized tools, ‘fast and frugal’ heuristics that enable us to predict/explain/manipulate brains absent information regarding their mechanical complexities. What Dennett calls the ‘taking the intentional stance’ occurs whenever conditions trigger the application of these heuristics to some system in our environment.

But because heuristics systematically neglect information, they find themselves bound to specific sets of problems. The tool analogy is quite apt: it’s awful hard to hammer nails with a screwdriver. Thus the issue of the ‘proper domain’ that Dennett mentions in the above quote: to say that intentional problem-solving is heuristic is to say that it possesses a specific problem-ecology, a set of environments possessing the information structure that a given heuristic is adapted to solve. And this is where Dennett’s problems begin.

Dennett agrees that when we adopt the intentional stance we’re “finessing our ignorance of the details of the processes going on in each other’s skulls (and in our own!)” (Intuition Pumps, 83), but he fails to consider this ‘ignorance of the details’ in any detail. He seems to assume, rather, that these heuristics are merely adapted to the management of complexity. Perhaps this is why he never rigorously interrogates the question of whether the ’intentional stance’ itself belongs to the set of problems the intentional stance can effectively solve. For Dennett, the fact that the intentional stance picks out ‘real patterns’ is warrant enough to take what he calls the ‘stance stance,’ or the intentional characterization of intentional characterization. He doesn’t think that ‘finessing our ignorance’ poses any particular problem in this respect.

As we saw above, BBT characterizes the intentional stance in mechanical terms, as the environmentally triggered application of heuristic devices adapted to solving social problem-ecologies. From the standpoint of IST, this amounts to taking another kind of stance, the ‘physical stance.’ From the standpoint of BBT, this amounts to the application of heuristic devices adapted to solving causal problem-ecologies, or in other words, the devices underwriting the mechanical paradigm of the natural sciences.

So what’s the difference? The first thing to note is that both ways of looking at things, heuristic application versus stance taking, involve neglect or the ‘finessing of ignorance.’ Heuristic application as BBT has it counts as what Carl Craver would call a ’mechanism sketch,’ a mere outline of what is an astronomically more complicated picture. In fact, one of the things that make causal cognition so powerful is the way it can be reliably deployed across multiple ‘levels of description’ without any mystery regarding how we get from one level to the next. Mechanical thinking, in other words, allows us to ignore as much or as little information as we want (a point Dennett never considers to my knowledge).

This means the issue between IST and BBT doesn’t so much turn on the amount of information neglected as the kinds of information neglected. And this is where the superiority of the latter, mechanical characterization leaps into view. Intentional cognition, as we saw, turns on heuristics adapted to solving problems in the absence of causal information. This means that taking the ‘stance stance,’ as Dennett does, effectively shuts out the possibility of mechanically understanding IST.

IST thus provides Dennett with a kind of default ’skyhook,’ a way to tacitly install intentionality into ‘presupposition space.’ This way, he can always argue with the intentionalist that the eliminativist necessarily begs the very intentionality they want to eliminate. If BBT argues that heuristic application is what is really going on (because, well, it is – and by Dennett’s own lights no less!), IST can argue that this is simply one more ‘stance,’ and so yank the issue back onto intentional ground (a fact that Brandom, for instance, exploits to bootstrap his inferentialism).

But as should be clear, it becomes difficult at this point to understand precisely what IST is even a theory about. On BBT, no one ever has or ever will ‘take an intentional stance.’ What we do is rely on certain heuristic systems adapted to certain problem-ecologies. The ‘intentional stance,’ on this account, is what this heuristic reliance looks like when we rely on those self-same heuristics to solve it. Doubtless there are a variety of informal problem-ecologies that can be effectively solved by taking the IST approach (such as blunting charges of eliminativism). But for better or worse, the natural scientific question of what is going on when we solve via intentionality is not one of them. Obviously so, one would think.

November 27, 2013

“Reinstalling Eden”

So several month back I was going through my daily blog roll and I noticed that Eric Schwitzgebel, a well-known skeptic and philosopher of mind, had posted a small fictional piece on Splintered Minds dealing with the morality of creating artificial consciousnesses. Forget 3D printing: what happens when we develop the power to create ’secondary worlds’ filled with sentient and sapient entities, our own DIY Matrices, in effect? I’m not sure why, but for some reason, a sequel to his story simply leapt into my head. Within 20 minutes or so I had broken one of the more serious of the Ten Blog Commandments: ‘Thou shalt not comment to excess.’ But, as is usually the case, my exhibitionism got the best of me, and I posted it, entirely prepared to apologize for my breach of e-etiquette if need be. As it turned out, Eric loved the piece, so much so he emailed me suggesting that we rewrite both parts for possible publication. Since looooonnng form fiction is my area of expertise I contacted a friend of mine, Karl Schroeder, asking him what kind of venue would be appropriate, and he suggested we pitch Nature - [cue heavenly choir] – who has a coveted page dedicated to short pieces of speculative fiction.

And lo, it came to pass – largely thanks to Eric, who looked after all the technical details, and who was able to cajole the subpersonal herd of cats I call my soul into actually completing something short for a change. The piece can be found here. And be warned that, henceforth, anyone who trips me up on some point of reason will be met with, “Oh yeeeah. Like, I’m published in Nature, maan.”

‘Cause as we all know, Nature rocks.

November 22, 2013

God Decays and Bakker Speaks, But Not In Any Particular Order

Aside from apologizing for my absence of late, I have a couple of announcements.

First and foremost, Ben Cain, a regular guest-blogger here at TPB, has turned his undead mind to the living dead with his first novel, God Decays. I fear I haven’t had a chance to read it yet, but Ben’s writing both here and on his blog, Rants Within the Undead God, should be more than recommendation enough. The man is both brilliant and twisted.

.

Also, for those within striking distance, I’m giving a public talk at The University of Western Ontario sponsored by the Creative Writing Club and the Arts and Humanities Student’s Council entitled, “Technology and the Death of the Literary Ecosystem,” where I’ll be arguing, among other things, that literature, understood in any meaningful sense, only ever happens in books like Ben’s anymore. It’s scheduled for next Wednesday, November 27th, at 6pm in University College, Room 224A…

Which, I just realized is also my PS3 hockey night… Fuck. Sorry boys.

October 15, 2013

Godelling in the Valley

“Either mathematics is too big for the human mind or the human mind is more than a machine” – Kurt Godel

.

Okay, so this is purely speculative, but it is interesting, and I think worthwhile farming out to brains far better trained than mine.

So BBT suggests that the ‘a priori’ is best construed as a kind of cognitive illusion, a consequence of the metacognitive opacity of those processes underwriting those ‘thoughts’ we are most inclined to call ‘analytic’ and ‘a priori.’ The necessity, abstraction, and internal relationality that seem to characterize these thoughts can all be understood in terms of information privation, the consequence of our metacognitive blindness to what our brain is actually doing when we engage in things like mathematical cognition. The idea is that our intuitive sense of what it is we think we’re doing when we do math—our ‘insights’ or ‘inferences,’ our ‘gists’ or ‘thoughts’—is fragmentary and deceptive, a drastically blinkered glimpse of astronomically complex, natural processes.

The ‘a priori,’ on this view, characterizes the inscrutability, rather than the nature, of mathematical cognition. Even without empirical evidence of unconscious processing, mathematical reasoning has always been deeply mysterious, apparently the most certain form of cognition when performed, and yet perennially resistant to decisive second order reflection. We can do it well enough—well enough to radically transform the world when applied in concert with empirical observation—and yet none of us can agree on just what it is that’s being done.

On BBT, our various second-order theoretical interpretations of mathematics are chronically underdetermined for the same reason any theoretical interpretation in science is underdetermined: the lack of information. What dupes philosophers into transforming this obvious epistemic vice into a beguiling cognitive virtue is simply the fact that we also lack any information pertaining to the lack of this information. Since they have no inkling that their murky inklings involve ‘murkiness’ at all, they simply assume the sufficiency of those inklings.