Michael Greger's Blog

September 11, 2025

The Validity of SIBO Tests

Even if we could accurately diagnose small intestinal bacterial overgrowth (SIBO), if there is no difference in symptoms between those testing positive and those testing negative, what’s the point?

Gastrointestinal symptoms like abdominal pain and bloating account for millions of doctor visits every year. One of the conditions that may be considered for such a “nonspecific presentation” of symptoms is SIBO, a concept that “has gained popularity on the internet in addition to certain clinical and research circles.” SIBO is “broadly defined as excessive bacteria in the small intestine” and typically treated with antibiotics, but “dispensing antibiotics to patients with the nonspecific, common symptoms associated with SIBO is not without risks,” such as the fostering of antibiotic resistance, the emergence of side effects, and the elimination of our good bacteria that could set us up for an invasion of bad bugs like C. diff—all for a condition that may not even be real.

Even alternative medicine journals admit that SIBO is being overdiagnosed, creating “confusion and fear.” SIBO testing “is overused and overly relied upon. Diagnoses are often handed out quickly and without adequate substantiation. Patients can be indoctrinated into thinking SIBO is a chronic condition that can not be cleared and will require lifelong management. This is simply not true for most and is an example of the damage done by overzealousness.” “The ‘monster’ that we now perceive SIBO to be may be no more than a phantom.”

The traditional method for a diagnosis was a small bowel aspiration, an invasive test where a long tube is snaked down the throat to take a sample and count the bugs down there, as you can see at 2:10 in my video Are Small Intestinal Bacterial Overgrowth (SIBO) Tests Valid?.

This method has been almost entirely replaced with breath tests. Normally, a sugar called glucose is almost entirely absorbed in the small intestine, so it never makes it down to the colon. So, the presence of bacterial fermentation of that sugar suggests there are bacteria in the small intestine. Fermentation can be detected because the bacteria produce specific gases that get absorbed in our bloodstream before being exhaled from our lungs, which can then be detected with a breathalyzer-type machine.

Previously, the sugar lactulose was used, but “lactulose breath tests do not reliably detect the overgrowth of bacteria,” so researchers switched to glucose. However, when glucose was finally put to the test, it didn’t work. The bacterial load in the small intestine was similar for those testing positive or negative, so that wasn’t a useful test either. It turns out that glucose can make it down to the colon after all.

Researchers labeled the glucose dose with a tracer and found that nearly half of the positive results from glucose breath tests were false positives because individuals were just fermenting it down in their colon, where our bacteria are supposed to be. So, “patients who are incorrectly labeled with SIBO may be prescribed multiple courses of antibiotics” for a condition they don’t even have.

Why do experts continue to recommend breath testing? Could it be because the “experts” were at a conference supported by a breath testing company, and most had personally received funds from SIBO testing or antibiotic companies?

Even if we could properly diagnose SIBO, does it matter? For those with digestive symptoms, there is a massive range of positivity for SIBO from approximately 4 percent to 84 percent. Researchers “found there to be no difference in overall symptom scores between those testing positive against those testing negative for SIBO…” So, a positive test result could mean anything. Who cares if some people have bacteria growing in their small intestines if it doesn’t correlate with symptoms?

Now, antibiotics can make people with irritable bowel-type symptoms who have been diagnosed with SIBO feel better. Does that prove SIBO was the cause? No, because antibiotics can make just as many people feel better who are negative for SIBO. Currently, the antibiotic rifaximin is most often used for SIBO, but it is “not currently FDA-approved for use in this indication, and its cost can be prohibitive.” (The FDA is the U.S. Food and Drug Administration.) In fact, no drug has been approved for SIBO in the United States or Europe, so even with good insurance, it may cost as much as $50 a day in out-of-pocket expenses, and the course is typically two weeks.

What’s more, while antibiotics may help in the short term, they may make matters worse in the long term. Those “who are given a course of antibiotics are more than three times as likely to report more bowel symptoms 4 months later than controls.” So, what can we do for these kinds of symptoms? That’s exactly what I’m going to turn to next.

September 9, 2025

Preventing Hair Loss and Promoting Hair Growth

In every grade school class photo, I seem to have a mess of tousled hair on my head. No matter how much my mom tried to tame my hair, it was a little unruly. (I sported the windblown look without even trying.) Later came my metalhead phase, with headbangable hair down to the middle of my back. Sadly, though, like many of the men in my family, it started to thin, then disappear. Studies show that by age 50, approximately half of men and women will experience hair loss. Why do some lose their hair and others don’t? How can we preserve the looks of our locks?

What Causes Hair Loss?

As I discuss in my video Supplements for Hair Growth, we don’t lose our hair by washing or brushing it too much––two of the many myths out there. The majority of hair loss with age is genetic for both women and men. Based on twin studies, the heritability of baldness in men is 79%, meaning about 80% of the differences in hair loss between men is genetically determined, but that leaves some wiggle room.

Look at identical twins, for instance: Identical twin sisters with the same DNA had different amounts of hair loss, thanks to increased stress, increased smoking, having more children, or having a history of high blood pressure or cancer.

Indeed, smoking can contribute to the development of both male and female pattern baldness because the genotoxic compounds in cigarettes may damage the DNA in our hair follicles and cause microvascular poisoning in their base.

Other toxic agents associated with hair loss include mercury; it seems to concentrate about 250-fold in growing scalp hair. William Shakespeare may have started losing his hair due to mercury poisoning from syphilis treatment. Thankfully, doctors don’t give their patients mercury anymore. These days, as the Centers for Disease Control and Prevention point out, mercury mainly enters the body through seafood consumption.

Consider this: A woman went to her physician, concerned about her hair loss. Blood tests indicated elevated mercury levels, which makes sense as her diet was high in tuna. When she stopped eating tuna, her mercury levels fell and her hair started to grow back within two months. After seven months on a fish-free diet, her hair completely regrew. Doctors should consider screening for mercury toxicity when they see hair loss.

How to Prevent Hair Loss

In addition to not smoking, managing our stress, and avoiding seafood, is there anything else we can do to prevent hair loss?

We can make sure we don’t have scurvy, severe vitamin C deficiency. We’ve known for centuries that scurvy can cause hair loss, but once we have enough vitamin C so our gums aren’t bleeding, there are no data correlating vitamin C levels and hair loss. So, make sure you have a certain baseline sufficiency.

Foods for Our Hair

What about foods for hair loss? What role might diet play in the treatment of hair loss?

As I discuss in my video Food for Hair Growth, population studies have found that male pattern baldness is associated with poor sleeping habits and the consumption of meat and junk food, whereas protective associations were found for the consumption of raw vegetables, fresh herbs, and soy milk. Drinking soy beverages on a weekly basis was associated with 62% lower odds of moderate to severe hair loss, raising the possibility that there may be compounds in plants that may be protective.

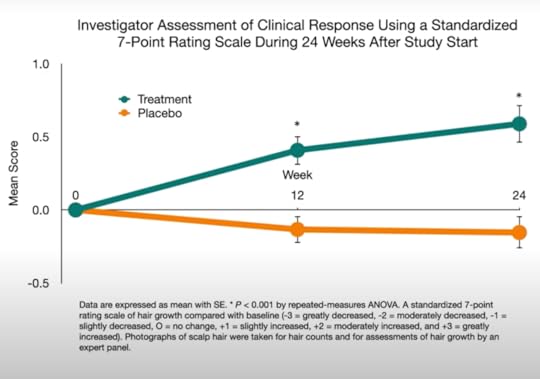

A randomized, double-blind, placebo-controlled study of compounds in hot peppers and soy found significantly higher promotion of hair growth, and the doses used were reasonable: 6 milligrams of capsaicin a day and 75 milligrams of isoflavones. How does that translate into actual food? We can get 6 milligrams of capsaicin in just a quarter of a fresh jalapeño pepper a day and 75 milligrams of isoflavones eating just three-quarter cup of tempeh or soybeans.

Researchers also investigated pumpkin seeds and hair loss. For a few months, 76 men with male pattern baldness received 400 milligrams of pumpkin seed oil a day hidden in capsules (the equivalent of eating about two and a half pumpkin seeds a day) or took placebo capsules. After 24 weeks of treatment, self-rated improvement and satisfaction scores in the pumpkin group were higher, and they objectively had more hair—a 40% increase in hair counts, compared to only 10% in the placebo group. In the pumpkin group, 95% remained either unchanged or improved, whereas in the control group, more than 90% remained unchanged or worsened. Given such a pronounced effect, there was concern about sexual side effects, but researchers looked before and after at an index of erectile dysfunction and found no evidence of adverse effects.

The most common ingredient in top-selling hair loss products is vitamin B7, also known as biotin. Biotin deficiency causes hair loss, but there are no evidence-based data that supplementing biotin promotes hair growth. And severe biotin deficiency in healthy individuals eating a normal diet has never been reported. However, if you eat raw egg whites, you can acquire a biotin deficiency, since there are compounds that attach to biotin and prevent it from being absorbed. Other than rare deficiency syndromes, though, it’s a myth that biotin supplements increase hair growth.

Can we just adopt the attitude that it can’t hurt, so we might as well see if it helps? No, because there is a lack of regulatory oversight of the supplement industry and, in the case of biotin, interference with lab tests. Many dietary supplements promoted for hair health contain biotin levels up to 650 times the recommended daily intake of biotin. And excess biotin in the blood can play haywire on a bunch of different blood tests, including thyroid function, other hormone tests (including pregnancy), and the test performed to determine if you’ve had a heart attack––so it could potentially even be life or death.

Do Hair Growth Pills Really Work?

What about drugs? We only have good evidence for efficacy for the two drugs approved by the U.S. Food and Drug Administration: finasteride, sold as Propecia, and minoxidil, sold as Rogaine. It’s considered a myth that all the patented hair-loss supplements on the market will increase hair growth. And they may actually be more expensive, with over-the-counter supplement regimens costing up to more than $1,000 a year, whereas the drugs may cost only $100 to $300 a year. As I discuss in my video Pills for Hair Growth, the drugs can help, but they can also cause side effects. Propecia can diminish libido, cause sexual disfunction, and have been associated with impotence, testicular pain, and breast enlargement, while the topical Minoxidil can cause itching, for example.

How do they work (if they work at all)? Androgens are the principal drivers of hair growth in both men and women. Testosterone is the primary androgen circulating in the blood, and it can be converted to dihydrotestosterone, which is even more powerful, by an enzyme called 5-alpha reductase. That’s the enzyme that is blocked by Propecia, so it inhibits the souping up of testosterone. This is why pre-menopausal women are not supposed to take it, since it could feminize male fetuses, whereas for men, it has sexual side effects like erectile dysfunction, which can affect men for years even after stopping the medication and may even be permanent. Indeed, up to 20% of people reporting persistent sexual dysfunction for six or more years after stopping the drug, suggesting the possibility that it may never go away.

Pass on the Pills and Reach for a Fork

Given the side effects of the current drug options, I encourage you to incorporate hair-friendly foods in your daily routine.

September 4, 2025

Do Fruits and Vegetables Boost Our Mood?

A randomized controlled trial investigates diet and psychological well-being.

“Psychological health can be broadly conceptualized as comprising 2 key components: mental health (i.e., the presence of absence of mental health disorders such as depression) and psychological well-being (i.e., a positive psychological state, which is more than the absence of a mental health disorder,” and that is the focus of an “emerging field of positive psychology [that] focuses on the positive facts of life, including happiness, life satisfaction, personal strengths, and flourishing.” This may translate to physical “benefits of enhanced well-being, including improvements in blood pressure, immune competence, longevity, career success, and satisfaction with personal relationships.”

What is “The Contribution of Food Consumption to Well-Being,” the title of an article in Annals of Nutrition & Metabolism? Studies have “linked the consumption of fruits and vegetables with enhanced well-being.” A systematic review of research found evidence that fruit and vegetable intake “was associated with increased psychological well-being.” Only an association?

There is “a famous criticism in this area of research—namely, that deep-down personality or family upbringing might lead people simultaneously to eat in a healthy way and also to have better mental well-being, so that diet is then merely correlated with, but incorrectly gives the appearance of helping to cause, the level of well-being.” However, recent research circumvented this problem by examining if “changes in diet are correlated with changes in mental well-being”—in effect, studying the “Evolution of Well-Being and Happiness After Increases in Consumption of Fruit and Vegetables.” As you can see below and at 1:37 in my video Fruits and Vegetables Put to the Test for Boosting Mood, as individuals began eating more fruits and veggies, there was a straight-line increase in their change in life satisfaction over time.

“Increased fruit and vegetable consumption was predictive of increased happiness, life satisfaction, and well-being. They were up to 0.24 life-satisfaction points (for an increase of 8 portions a day), which is equal in size to the psychological gain of going from unemployment to employment.” (My Daily Dozen recommendation is for at least nine servings of fruits and veggies a day.)

That study was done in Australia. It was repeated in the United Kingdom, and researchers found the same results, though Brits may need to bump up their daily minimum consumption of fruits and vegetables to more like 10 or 11 servings a day.

As researchers asked in the title of their paper, “Does eating fruit and vegetables also reduce the longitudinal risk of depression and anxiety?” Improved well-being is nice, but “governments and medical authorities are often interested in the determinants of major mental ill-health conditions, such as depression and high levels of anxiety, and not solely in a more typical citizen’s level of well-being”—for instance, not just life satisfaction. And, indeed, using the same dataset but instead looking for mental illness, researchers found that “eating fruit and vegetables may help to protect against future risk of clinical depression and anxiety,” as well.

A systematic review and meta-analysis of dozens of studies found “an inverse linear association between fruit or vegetable intake and risk of depression, such that every 100-gram increased intake of fruit was associated with a 3% reduced risk of depression,” about half an apple. Yet, “less than 10% of most Western populations consume adequate levels of whole fruits and dietary fiber, with typical intake being about half of the recommended levels.” Maybe the problem is we’re just telling people about the long-term benefits of fruit intake for chronic disease prevention, rather than the near-immediate improvements in well-being. Maybe we should be advertising the “happiness’ gains.” Perhaps, but we first need to make sure they’re real.

We’ve been talking about associations. Yes, “a healthy diet may reduce the risk of future depression or anxiety, but being diagnosed with depression or anxiety today could also lead to lower fruit and vegetable intake in the future.” Now, in these studies, we can indeed show that the increase in fruit and vegetable consumption came first, and not the other way around, but as the great enlightenment philosopher David Hume pointed out, just because the rooster crows before the dawn doesn’t mean the rooster caused the sun to rise.

To prove cause and effect, we need to put it to the test with an interventional study. Unfortunately, to date, many studies have compared fruit to chocolate and chips, for instance. Indeed, study participants randomized to eat fruit showed significant improvements in anxiety, depression, fatigue, and emotional distress, which is amazing, but that was compared to chocolate and potato chips, as you can see below and at 4:26 in my video. Apples, clementines, and bananas making people feel better than assorted potato chips and chunky chocolate wafers is not exactly a revelation.

This is the kind of study I’ve been waiting for: a randomized controlled trial in which young adults were randomized to one of three groups—a diet-as-usual group, a group encouraged to eat more fruits and vegetables, or a third group given two servings of fruits and vegetables a day to eat in addition to their regular diet. Those in the third group “showed improvements to their psychological well-being with increases in vitality, flourishing, and motivation” within just two weeks. However, simply educating people to eat their fruits and vegetables may not be enough to reap the full rewards, so perhaps greater emphasis needs to be placed on providing people with fresh produce—for example, offering free fruit for people when they shop. I know that would certainly make me happy!

September 2, 2025

Taking Advantage of Sensory-Specific Satiety

How can we use sensory-specific satiety to our advantage?

When we eat the same foods over and over, we become habituated to them and end up liking them less. That’s why the “10th bite of chocolate, for example, is desired less than the first bite.” We have a built-in biological drive to keep changing up our foods so we’ll be more likely to hit all our nutritional requirements. The drive is so powerful that even “imagined consumption reduces actual consumption.” When study participants imagined again and again that they were eating cheese and were then given actual cheese, they ate less of it than those who repeatedly imagined eating that food fewer times, imagined eating a different food (such as candy), or did not imagine eating the food at all.

Ironically, habituation may be one of the reasons fad “mono diets,” like the cabbage soup diet, the oatmeal diet, or meal replacement shakes, can actually result in better adherence and lower hunger ratings compared to less restrictive diets.

In the landmark study “A Satiety Index of Common Foods,” in which dozens of foods were put to the test, boiled potatoes were found to be the most satiating food. Two hundred and forty calories of boiled potatoes were found to be more satisfying in terms of quelling hunger than the same number of calories of any other food tested. In fact, no other food even came close, as you can see below and at 1:14 in my video Exploiting Sensory-Specific Satiety for Weight Loss.

No doubt the low calorie density of potatoes plays a role. In order to consume 240 calories, nearly one pound of potatoes must be eaten, compared to just a few cookies, and even more apples, grapes, and oranges must be consumed. Each fruit was about 40 percent less satiating than potatoes, though, as shown here and at 1:45 in my video. So, an all-potato diet would probably take the gold—the Yukon gold—for the most bland, monotonous, and satiating diet.

A mono diet, where only one food is eaten, is the poster child for unsustainability—and thank goodness for that. Over time, they can lead to serious nutrient deficiencies, such as blindness from vitamin A deficiency in the case of white potatoes.

The satiating power of potatoes can still be brought to bear, though. Boiled potatoes beat out rice and pasta in terms of a satiating side dish, cutting as many as about 200 calories of intake off a meal. Compared to boiled and mashed potatoes, fried french fries or even baked fries do not appear to have the same satiating impact.

To exploit habituation for weight loss while maintaining nutrient abundance, we could limit the variety of unhealthy foods we eat while expanding the variety of healthy foods. In that way, we can simultaneously take advantage of the appetite-suppressing effects of monotony while diversifying our fruit and vegetable portfolio. Studies have shown that a greater variety of calorie-dense foods, like sweets and snacks, is associated with excess body fat, but a greater variety of vegetables appears protective. When presented with a greater variety of fruit, offered a greater variety of vegetables, or given a greater variety of vegetable seasonings, people may consume a greater quantity, crowding out less healthy options.

The first 20 years of the official Dietary Guidelines for Americans recommended generally eating “a variety of foods.” In the new millennium, they started getting more precise, specifying a diversity of healthier foods, as seen below and at 3:30 in my video.

A pair of Harvard and New York University dietitians concluded in their paper “Dietary Variety: An Overlooked Strategy for Obesity and Chronic Disease Control”: “Choose and prepare a greater variety of plant-based foods,” recognizing that a greater variety of less healthy options could be counterproductive.

So, how can we respond to industry attempts to lure us into temptation by turning our natural biological drives against us? Should we never eat really delicious food? No, but it may help to recognize the effects hyperpalatable foods can have on hijacking our appetites and undermining our body’s better judgment. We can also use some of those same primitive impulses to our advantage by minimizing our choices of the bad and diversifying our choices of the good. In How Not to Diet, I call this “Meatball Monotony and Veggie Variety.” Try picking out a new fruit or vegetable every time you shop.

In my own family’s home, we always have a wide array of healthy snacks on hand to entice the finickiest of tastes. The contrasting collage of colors and shapes in fruit baskets and vegetable platters beat out boring bowls of a single fruit because they make you want to mix it up and try a little of each. And with different healthy dipping sauces, the possibilities are endless.

August 28, 2025

Dietary Diversity and Overeating

Big Food uses our hard-wired drive for dietary diversity against us.

How did we evolve to solve the daunting task of selecting a diet that supplies all the essential nutrients? Dietary diversity. By eating a variety of foods, we increase our chances of hitting all the bases. If we only ate for pleasure, we might just stick with our favorite food to the exclusion of all others, but we have an innate tendency to switch things up.

Researchers found that study participants ended up eating more calories when provided with three different yogurt flavors than just one, even if that one is the chosen favorite. So, variation can trump sensation. They don’t call it the spice of life for nothing.

It appears to be something we’re born with. Studies on newly weaned infants dating back nearly a century show that babies naturally choose a variety of foods even over their preferred food. This tendency seems to be driven by a phenomenon known as sensory-specific satiety.

Researchers found that, “within 2 minutes after eating the test meal, the pleasantness of the taste, smell, texture, and appearance of the eaten food decreased significantly more than for the uneaten foods.” Think about how the first bite of chocolate tastes better than the last bite. Our body tires of the same sensations and seeks out novelty by rekindling our appetite every time we’re presented with new foods. This helps explain the “dessert effect,” where we can be stuffed to the gills but gain a second wind when dessert arrives. What was adaptive for our ancient ancestors to maintain nutritional adequacy may be maladaptive in the age of obesity.

When study participants ate a “varied four-course meal,” they consumed 60 percent more calories than those given the same food for each course. It’s not only that we get bored; our body has a different physiological reaction.

As you can see below and at 2:13 in my video How Variation Can Trump Sensation and Lead to Overeating, researchers gave people a squirt of lemon juice, and their salivary glands responded with a squirt of saliva. But when they were given lemon juice ten times in a row, they salivated less and less each time. When they got the same amount of lime juice, though, their salivation jumped right back up. We’re hard-wired to respond differently to new foods.  Whether foods are on the same plate, are at the same meal, or are even eaten on subsequent days, the greater the variety, the more we tend to eat. When kids had the same mac and cheese dinner five days in a row, they ended up eating hundreds fewer calories by the fifth day, compared to kids who got a variety of different meals, as you can see below and at 2:35 in my video.

Whether foods are on the same plate, are at the same meal, or are even eaten on subsequent days, the greater the variety, the more we tend to eat. When kids had the same mac and cheese dinner five days in a row, they ended up eating hundreds fewer calories by the fifth day, compared to kids who got a variety of different meals, as you can see below and at 2:35 in my video.

Even just switching the shape of food can lead to overeating. When kids had a second bowl of mac and cheese, they ate significantly more when the noodles were changed from elbow macaroni to spirals. People allegedly eat up to 77 percent more M&Ms if they’re presented with ten different colors instead of seven, even though all the colors taste the same. “Thus, it is clear that the greater the differences between foods, the greater the enhancement of intake,” the greater the effect. Alternating between sweet and savory foods can have a particularly appetite-stimulating effect. Do you see how, in this way, adding a diet soda, for instance, to a fast-food meal can lead to overconsumption?

The staggering array of modern food choices may be one of the factors conspiring to undermine our appetite control. There are now tens of thousands of different foods being sold.

The so-called supermarket diet is one of the most successful ways to make rats fat. Researchers tried high-calorie food pellets, but the rats just ate less to compensate. So, they “therefore used a more extreme diet…[and] fed rats an assortment of palatable foods purchased at a nearby supermarket,” including such fare as cookies, candy, bacon, and cheese, and the animals ballooned. The human equivalent to maximize experimental weight gain has been dubbed the cafeteria diet.

It’s kind of the opposite of the original food dispensing device I’ve talked about before. Instead of all-you-can-eat bland liquid, researchers offered free all-you-can-eat access to elaborate vending machines stocked with 40 trays with a dizzying array of foods, like pastries and French fries. Participants found it impossible to maintain energy balance, consistently consuming more than 120 percent of their calorie requirements.

Our understanding of sensory-specific satiety can be used to help people gain weight, but how can we use it to our advantage? For example, would limiting the variety of unhealthy snacks help people lose weight? Two randomized controlled trials made the attempt and failed to show significantly more weight loss in the reduced variety diet, but they also failed to get people to make much of a dent in their diets. Just cutting down on one or two snack types seems insufficient to make much of a difference, as seen below and at 4:44 in my video. A more drastic change may be needed, which we’ll cover next.

August 26, 2025

Hijacking Our Appetites

I debunk the myth of protein as the most satiating macronutrient.

The importance of satiety is underscored by a rare genetic condition known as Prader-Willi syndrome. Children with the disorder are born with impaired signaling between their digestive system and their brain, so they don’t know when they’re full. “Because no sensation of satiety tells them to stop eating or alerts their body to throw up, they can accidentally consume enough in a single binge to fatally rupture their stomach.” Without satiety, food can be “a death sentence.”

Protein is often described as the most satiating macronutrient. People tend to report feeling fuller after eating a protein-rich meal, compared to a carbohydrate- or fat-rich one. The question is: Does that feeling of fullness last? From a weight-loss standpoint, satiety ratings only matter if they end up cutting down on subsequent calorie intake, and even a review funded by the meat, dairy, and egg industries acknowledges that this does not seem to be the case for protein. Hours later, protein consumed earlier doesn’t tend to end up cutting calories later on.

Fiber-rich foods, on the other hand, can suppress appetite and reduce subsequent meal intake more than ten hours after consumption—even the next day—because their site of action is 20 feet down in the lower intestine. Remember the ileal brake from my Evidence-Based Weight Loss lecture? When researchers secretly infused nutrients into the end of the small intestine, study participants spontaneously ate as many as hundreds fewer calories at a meal. Our brain gets the signal that we are full, from head to tail.

We were built for gluttony. “It is a wonderful instinct, developed over millions of years, for times of scarcity.” Stumbling across a rare bounty, those who could fill themselves the most to build up the greatest reserves would be more likely to pass along their genes. So, we are hard-wired not just to eat until our stomach is full, but until our entire digestive tract is occupied. Only when our brain senses food all the way down at the end does our appetite fully dial down.

Fiber-depleted foods get rapidly absorbed early on, though, so much of it never makes it down to the lower gut. As such, if our diet is low in fiber, no wonder we’re constantly hungry and overeating; our brain keeps waiting for the food that never arrives. That’s why people who even undergo stomach-stapling surgeries that leave them with a tiny two-tablespoon-sized stomach pouch can still eat enough to regain most of the weight they initially lost. Without sufficient fiber, transporting nutrients down our digestive tract, we may never be fully satiated. But, as I described in my last video, one of the most successful experimental weight-loss interventions ever reported in the medical literature involved no fiber at all, as you can see here and at 2:47 in my video Foods Designed to Hijack Our Appetites.

At first glance, it might seem obvious that removing the pleasurable aspects of eating would cause people to eat less, but remember, that’s not what happened. The lean participants continued to eat the same amount, taking in thousands of calories a day of the bland goop. Only those who were obese went from eating thousands of calories a day down to hundreds, as shown below and at 3:22 in my video. And, again, this happened inadvertently without them apparently even feeling a difference. Only after eating was disconnected from the reward was the body able to start rapidly reining in the weight.

At first glance, it might seem obvious that removing the pleasurable aspects of eating would cause people to eat less, but remember, that’s not what happened. The lean participants continued to eat the same amount, taking in thousands of calories a day of the bland goop. Only those who were obese went from eating thousands of calories a day down to hundreds, as shown below and at 3:22 in my video. And, again, this happened inadvertently without them apparently even feeling a difference. Only after eating was disconnected from the reward was the body able to start rapidly reining in the weight.

We appear to have two separate appetite control systems: “the homeostatic and hedonic pathways.” The homeostatic pathway maintains our calorie balance by making us hungry when energy reserves are low and abolishes our appetite when energy reserves are high. “In contrast, hedonic or reward-based regulation can override the homeostatic pathway” in the face of highly palatable foods. This makes total sense from an evolutionary standpoint. In the rare situations in our ancestral history when we’d stumble across some calorie-dense food, like a cache of unguarded honey, it would make sense for our hedonic drive to jump into the driver’s seat to consume the scarce commodity. Even if we didn’t need the extra calories at the time, our body wouldn’t want us to pass up that rare opportunity. Such opportunities aren’t so rare anymore, though. With sugary, fatty foods around every corner, our hedonic drive may end up in perpetual control, overwhelming the intuitive wisdom of our bodies.

We appear to have two separate appetite control systems: “the homeostatic and hedonic pathways.” The homeostatic pathway maintains our calorie balance by making us hungry when energy reserves are low and abolishes our appetite when energy reserves are high. “In contrast, hedonic or reward-based regulation can override the homeostatic pathway” in the face of highly palatable foods. This makes total sense from an evolutionary standpoint. In the rare situations in our ancestral history when we’d stumble across some calorie-dense food, like a cache of unguarded honey, it would make sense for our hedonic drive to jump into the driver’s seat to consume the scarce commodity. Even if we didn’t need the extra calories at the time, our body wouldn’t want us to pass up that rare opportunity. Such opportunities aren’t so rare anymore, though. With sugary, fatty foods around every corner, our hedonic drive may end up in perpetual control, overwhelming the intuitive wisdom of our bodies.

So, what’s the answer? Never eat really tasty food? No, but it may help to recognize the effects hyperpalatable foods can have on hijacking our appetites and undermining our body’s better judgment.

Ironically, some researchers have suggested a counterbalancing evolutionary strategy for combating the lure of artificially concentrated calories. Just as pleasure can overrule our appetite regulation, so can pain. “Conditioned food aversions” are when we avoid foods that made us sick in the past. That may just seem like common sense, but it is actually a deep-seated evolutionary drive that can defy rationality. Even if we know for a fact a particular food was not the cause of an episode of nausea and vomiting, our body can inextricably tie the two together. This happens, for example, with cancer patients undergoing chemotherapy. Consoling themselves with a favorite treat before treatment can lead to an aversion to their favorite food if their body tries to connect the dots. That’s why oncologists may advise the “scapegoat strategy” of only eating foods before treatment that you are okay with, never wanting to eat again.

Researchers have experimented with inducing food aversions by having people taste something before spinning them in a rotating chair to cause motion sickness. Eureka! A group of psychologists suggested: “A possible strategy for encouraging people to eat less unhealthy food is to make them sick of the food, by making them sick from the food.” What about using disgust to promote eating more healthfully? Children as young as two-and-a-half years old will throw out a piece of previously preferred candy scooped out of the bottom of a clean toilet.

Thankfully, there’s a way to exploit our instinctual drives without resorting to revulsion, aversion, or bland food, which we’ll explore next.

August 21, 2025

Lose 200 Lbs Without Feeling Hungry

I dive into one of the most fascinating series of studies I’ve ever come across.

Anyone can lose weight by eating less food. Anyone can be starved thin. Starvation diets are rarely sustainable, though, since hunger pangs drive us to eat. We feel unsatisfied and unsatiated on low-calorie diets. We do have some level of voluntary control, of course, but our deep-seated instinctual drives may win out in the end.

For example, we can consciously hold our breath. Try it right now. How long can you go before your body’s self-preservation mechanisms take over and overwhelm your deliberate intent not to breathe? Our body has our best interests at heart and is too smart to allow us to suffocate ourselves—or starve ourselves, for that matter. If our body were really that smart, though, how could it let us become obese? Why doesn’t our body realize when we’re too heavy and allow us the leeway to slim down? Maybe our body is very aware and actively trying to help, but we’re somehow undermining those efforts. How could we test this theory to see if that’s true?

So many variables go into choosing what we eat and how much. “The eating process involves an intricate mixture of physiologic, psychologic, cultural, and esthetic considerations.” To strip all that away and stick just to the physiologic variable, Columbia University researchers designed a series of famous experiments using a “food dispensing device.” The term “food” is used very loosely here. As you can see at 2:02 in my video 200-Pound Weight Loss Without Hunger, the researchers’ feeding machine was a tube hooked up to a pump that delivered a mouthful of bland liquid formula every time a button was pushed. Research participants were instructed to eat as much or as little as they wanted at any time. In this way, eating was reduced to just the rudimentary hunger drive. Without the usual trappings of “sociability,” meal ceremony, and the pleasures of the palate, how much would people be driven to eat?  Put a normal-weight person in this scenario, and something remarkable happens. Day after day, week after week, with nothing more than their hunger to guide them, they eat exactly as much as they need, perfectly maintaining their weight, as shown below and at 2:36 in my video.

Put a normal-weight person in this scenario, and something remarkable happens. Day after day, week after week, with nothing more than their hunger to guide them, they eat exactly as much as they need, perfectly maintaining their weight, as shown below and at 2:36 in my video.

They needed about 3,000 calories a day, and that’s just how much they unknowingly gave themselves. Their body just intuitively seemed to know how many times to press that button, as seen here and at 2:48 in my video.

Put a person with obesity in that same scenario, and something even more remarkable happens. Driven by hunger alone, with the enjoyment of eating stripped away, they wildly undershoot, giving themselves a mere 275 calories a day, total. They could eat as much as they wanted, but they just weren’t hungry. It’s as if their body knew how massively overweight they were, so it dialed down their natural hunger drive to almost nothing. One participant started the study at 400 pounds and steadily lost weight. After 252 days of sipping the bland liquid, he lost 200 pounds, as you can see here and at 3:35 in my video.

This groundbreaking discovery was initially interpreted to mean that obesity is not caused by some sort of metabolic disturbance that drives people to overeat. In fact, the study suggested quite the opposite. Instead, overeating appeared to be a function of the meaning people attached to food, “aside from its use as fuel,” whether as a source of pleasure or perhaps as relief from boredom or stress. In this way, obesity seemed more psychological than physical. Subsequent experiments with the feeding machine, though, flipped such conceptions on their head once again.

When researchers covertly doubled the calorie concentration of the formula given to lean study participants, they unconsciously cut their consumption in half to continue to perfectly maintain their weight, as seen here and at 4:24 in my video. Their body somehow detected the change in calorie load and sent signals to the brain to press the button half as often to compensate. Amazing!

When the same was done with people with obesity, though, nothing changed. They continued to drastically undereat just as much as before. Their body seems incapable of detecting or reacting to the change in calorie load, suggesting a physiological inability to regulate intake, as shown below and at 4:40 in my video.  Might the brains of persons with obesity somehow be insensitive to internal satiety signals? We don’t know if it’s cause or effect. Maybe that’s why they’re obese in the first place, or maybe the body knows how obese it is and shuts down its hunger drive regardless of the calorie concentration. Indeed, the participants with obesity continued to steadily lose weight eating out of the machine, regardless of the calorie concentration and the food being dispensed, as you can see here and at 5:19 in my video.

Might the brains of persons with obesity somehow be insensitive to internal satiety signals? We don’t know if it’s cause or effect. Maybe that’s why they’re obese in the first place, or maybe the body knows how obese it is and shuts down its hunger drive regardless of the calorie concentration. Indeed, the participants with obesity continued to steadily lose weight eating out of the machine, regardless of the calorie concentration and the food being dispensed, as you can see here and at 5:19 in my video.  It would be interesting to see if they regained the ability to respond to changing calorie intake once they reached their ideal weight. Regardless, what can we apply from these remarkable studies to facilitate weight loss out in the real world? We’ll explore just that question next.

It would be interesting to see if they regained the ability to respond to changing calorie intake once they reached their ideal weight. Regardless, what can we apply from these remarkable studies to facilitate weight loss out in the real world? We’ll explore just that question next.

August 19, 2025

What Is “Pine Mouth Syndrome”?

Why do some pine nuts cause a bad taste in your mouth that can last for weeks?

The reason I make pesto with walnuts instead of the more traditional pine nuts isn’t only because walnuts are probably healthier with 20 times more polyphenols, but also because of a mysterious phenomenon known as PMS. Not that PMS. Pine mouth syndrome is characterized by what has become my favorite word of the week: cacogeusia, meaning a bad taste in your mouth. You can get cacogeusia from heavy metal toxicity, seafood toxins, certain nutritional and neurologic disorders, or the wrong kind of pine nuts. “Termed ‘Pine Mouth’ by the public, cases present in a roughly similar fashion: a persistent metallic or bitter taste beginning 1–3 days following ingestion of pine nuts lasting for up to 2 weeks.”

As I discuss in my video Pine Mouth Syndrome: Prolonged Bitter Taste from Certain Pine Nuts, thousands of cases have been reported, and it doesn’t seem to matter if the pine nuts are raw or cooked. Could the cause be an unidentified toxin present in some varieties of non-edible pine nuts? Indeed, “out of more than 100 species of the Pinus genus, [only] 30 are considered to be edible by the Food and Agriculture Organisation of the United Nations.”

Researchers analyzed pine nut samples from consumers who had fallen ill and found that, indeed, they all contained nuts from Chinese white pine, which is not reported to be edible. That tree is typically used only for lumber. You can see photos of inedible and edible pine nuts below and at 1:36 in my video.

More photos can be seen here and at 1:40.

More photos can be seen here and at 1:40.

We don’t know it’s the Chinese white pine nuts, though, until we put it to the test. Researchers gave study participants six to eight Chinese white pine nuts. Most hadn’t ever heard of pine mouth syndrome, and they all developed symptoms. We still don’t know exactly what it is in those nuts that causes such a bizarre reaction. We know to stay away from those kinds of pine nuts.

So, what kinds of pine nuts are on shelves in the United States? All kinds, apparently, “including those associated with pine mouth.” You can see more examples below and at 2:19 in my video.

Unsurprisingly, hundreds of cases of PMS have been reported in the United States. Most of the implicated nuts “were predominantly reported to be labeled from or originating from Asia, and in most cases China,” as seen here and at 2:30 in my video.

Unsurprisingly, hundreds of cases of PMS have been reported in the United States. Most of the implicated nuts “were predominantly reported to be labeled from or originating from Asia, and in most cases China,” as seen here and at 2:30 in my video.

The European Union demanded that China stop sending them toxic nuts, which they did beginning in 2011. “This export restriction likely resulted in a global export restriction of these species to the US as well,” given the decline in cases going into 2012, as shown below and at 2:47.

Rare cases still occur, though, as evidenced by an active Facebook group entitled “Damn you, Pine Nuts.” The primary reason I made this video is to allay fears should this ever happen to you. “There are no proven therapies for PMS. The only treatment is to cease ingesting implicated nuts and to wait for symptoms to abate.” Thankfully, pine mouth syndrome appears to be benign and goes away on its own.

Rare cases still occur, though, as evidenced by an active Facebook group entitled “Damn you, Pine Nuts.” The primary reason I made this video is to allay fears should this ever happen to you. “There are no proven therapies for PMS. The only treatment is to cease ingesting implicated nuts and to wait for symptoms to abate.” Thankfully, pine mouth syndrome appears to be benign and goes away on its own.

August 14, 2025

Are Raw Mushrooms Safe to Eat?

Microwaving is probably the most efficient way to reduce agaritine levels in fresh mushrooms.

There is a toxin in plain white button mushrooms called agaritine, which may be carcinogenic. Plain white button mushrooms grow to be cremini (brown) mushrooms, and cremini mushrooms grow to be portobello mushrooms. They’re all the very same mushroom, similar to how green bell peppers are just unripe red bell peppers. The amount of agaritine in these mushrooms can be reduced through cooking: Frying, microwaving, boiling, and even just freezing and thawing lower the levels. “It is therefore recommended to process/cook Button Mushroom before consumption,” something I noted in a video that’s now more than a decade old.

However, as shown below and at 0:51 in my video Is It Safe to Eat Raw Mushrooms?, if you look at the various cooking methods, the agaritine in these mushrooms isn’t completely destroyed. Take dry baking, for example: Baking for ten minutes at about 400° Fahrenheit (“a process similar to pizza baking”) only cuts the agaritine levels by about a quarter, so 77 percent still remains.

Boiling looks better, appearing to wipe out more than half the toxin after just five minutes, but the agaritine isn’t actually eliminated. Instead, it’s just transferred to the cooking water. So, levels within the mushrooms drop by about half at five minutes and by 90 percent after an hour, but that’s mostly because the agartine is leaching into the broth. So, if you’re making soup, for instance, five minutes of boiling is no more effective than dry baking for ten minutes, and, even after an hour, about half still remains.

Boiling looks better, appearing to wipe out more than half the toxin after just five minutes, but the agaritine isn’t actually eliminated. Instead, it’s just transferred to the cooking water. So, levels within the mushrooms drop by about half at five minutes and by 90 percent after an hour, but that’s mostly because the agartine is leaching into the broth. So, if you’re making soup, for instance, five minutes of boiling is no more effective than dry baking for ten minutes, and, even after an hour, about half still remains.

Frying for five to ten minutes eliminates a lot of agartine, but microwaving is not only a more healthful way to cook, but it works even better, as you can see here and at 1:39 in my video. Researchers found that just one minute in the microwave “reduced the agaritine content of the mushrooms by 65%,” and only 30 seconds of microwaving eliminated more than 50 percent. So, microwaving is probably the easiest way to reduce agaritine levels in fresh mushrooms.  My technique is to add dried mushrooms into the pasta water when I’m making spaghetti. Between the reductions of 20 percent or so from the drying and 60 percent or so from boiling for ten minutes and straining, more than 90 percent of agaritine is eliminated.

My technique is to add dried mushrooms into the pasta water when I’m making spaghetti. Between the reductions of 20 percent or so from the drying and 60 percent or so from boiling for ten minutes and straining, more than 90 percent of agaritine is eliminated.

Should we be concerned about the residual agaritine? According to a review funded by the mushroom industry, not at all. “The available evidence to date suggests that agaritine from consumption of…mushrooms poses no known toxicological risk to healthy humans.” The researchers acknowledge agartine is considered a potential carcinogen in mice, but then that data needs to be extrapolated to human health outcomes.

The Swiss Institute of Technology, for example, estimated that the average mushroom consumption in the country would be expected to cause about two cases of cancer per one hundred thousand people. That is similar to consumption in the United States, as seen below and at 3:00 in my video, so “one could theoretically expect about 20 cancer deaths per 1 x 106 [one million] lives from mushroom consumption.” In comparison, typically, with a new chemical, pesticide, or food additive, we’d like to see the cancer risk lower than one in a million. “By this approach, the average mushroom consumption of Switzerland is 20-fold too high to be acceptable. To remain under the limit”—and keep risk down to one in a million—“‘mushroom lovers’ would have to restrict their consumption of mushrooms to one 50-g serving every 250 days!” That’s about a half-cup serving once in just over eight months. To put that into perspective, even if you were eating a single serving every single day, the resulting additional cancer risk would only be about one in ten thousand. “Put another way, if 10,000 people consumed a mushroom meal daily for 70 years, then in addition to the 3000 cancer cases arising from other factors, one more case could be attributed to consuming mushrooms.”  But, again, this is all based “on the presumption that results in such mouse models are equally valid in humans.” Indeed, this is all just extrapolating from mice data. What we need is a huge prospective study to examine the association between mushroom consumption and cancer risk in humans, but there weren’t any such studies—until now.

But, again, this is all based “on the presumption that results in such mouse models are equally valid in humans.” Indeed, this is all just extrapolating from mice data. What we need is a huge prospective study to examine the association between mushroom consumption and cancer risk in humans, but there weren’t any such studies—until now.

Researchers titled their paper: “Mushroom Consumption and Risk of Total and Site-Specific Cancer in Two Large U.S. [Harvard] Prospective Cohorts” and found “no association between mushroom consumption and total and site-specific cancers in U.S. women and men.”

Eating raw or undercooked shiitake mushrooms can cause something else, though: shiitake mushroom flagellate dermatitis. Flagellate as in flagellation, whipping, flogging. Below and at 4:48 in my video, you can see a rash that makes it look as if you’ve been whipped.

Here and at 4:58 in my video is another photo of the rash. It’s thought to be caused by a compound in shiitake mushrooms called lentinan, but because heat denatures it, it only seems to be a problem with raw or undercooked mushrooms.

Now, it is rare. Only about 1 in 50 people are even susceptible, and it goes away on its own in a week or two. Interestingly, it can strike as many as ten days after eating shiitake mushrooms, which is why people may not make the connection. One unfortunate man suffered on and off for 16 years before a diagnosis. Hopefully, a lot of doctors will watch this video, and if they ever see a rash like this, they’ll tell their patients to cook their shiitakes.

August 12, 2025

Why I Don’t Recommend Moringa Leaf Powder

“Clearly, in spite of the widely held ‘belief’ in the health benefits of M. oleifera [moringa], the interest of the international biomedical community in the medicinal potential of this plant has been rather tepid.” In fact, it has been “spectacularly hesitant in exploring its nutritional and medicinal potential. This lukewarm attitude is curious, as other ‘superfoods’ such as garlic and green tea have enjoyed better reception,” but those have more scientific support. There are thousands of human studies on garlic and more than ten thousand on green tea, but only a few hundred on moringa.

The most promising appears to be moringa’s effects on blood sugar control. Below and at 0:55 in my video The Efficacy and Side Effects of Moringa Leaf Powder, you can see the blood sugar spikes after study participants ate about five control cookies each (top line labeled “a”), compared with cookies containing about two teaspoons of moringa leaf powder into the batter (bottom line labeled “b”). Even with the same amount of sugar and carbohydrates as the control cookies, the moringa-containing cookies resulted in a dampening of the surge in blood sugar.

Researchers found that drinking just one or two cups of moringa leaf tea before a sugar challenge “suppressed the elevation in blood glucose [sugar] in all cases compared to controls that did not receive the tea initially” and instead drank plain water. As you can see here and at 1:16 in my video, drinking moringa tea with sugar dampened blood sugar spikes after 30 minutes of consumption of the same amount of sugar without moringa tea. It’s no wonder that moringa is used in traditional medicine practice for diabetes, but we don’t really know if it can help until we put it to the test.  People with diabetes were given about three-quarters of a teaspoon of moringa leaf powder every day for 12 weeks and had significant improvements in measures of inflammation and long-term blood sugar control. The researchers called it a “quasi-experimental study” because there was no control group. They just took measurements before and after the study participants took moringa powder, and we know that simply being in a dietary study can lead some to eat more healthfully, whether consciously or unconsciously, so we don’t know what effect the moringa itself had. However, even in a moringa study with a control group, it’s not clear if the participants were randomly allocated. The researchers didn’t even specify how much moringa people were given—just that they took “two tablets daily with one tablet each after breakfast and dinner,” but what does “one tablet” mean? There was no significant improvement in this study, but perhaps the participants weren’t given enough moringa. Another study used a tablespoon a day and not only saw a significant drop in fasting blood sugars, but a significant drop in LDL cholesterol as well, as seen below and at 2:27 in my video.

People with diabetes were given about three-quarters of a teaspoon of moringa leaf powder every day for 12 weeks and had significant improvements in measures of inflammation and long-term blood sugar control. The researchers called it a “quasi-experimental study” because there was no control group. They just took measurements before and after the study participants took moringa powder, and we know that simply being in a dietary study can lead some to eat more healthfully, whether consciously or unconsciously, so we don’t know what effect the moringa itself had. However, even in a moringa study with a control group, it’s not clear if the participants were randomly allocated. The researchers didn’t even specify how much moringa people were given—just that they took “two tablets daily with one tablet each after breakfast and dinner,” but what does “one tablet” mean? There was no significant improvement in this study, but perhaps the participants weren’t given enough moringa. Another study used a tablespoon a day and not only saw a significant drop in fasting blood sugars, but a significant drop in LDL cholesterol as well, as seen below and at 2:27 in my video.

Two teaspoons of moringa a day didn’t seem to help, but what about a third, making it a whole tablespoon? Apparently not, since, finally, a randomized, placebo-controlled study using one tablespoon of moringa a day failed to show any benefit on blood sugar control in people with type 2 diabetes.

Two teaspoons of moringa a day didn’t seem to help, but what about a third, making it a whole tablespoon? Apparently not, since, finally, a randomized, placebo-controlled study using one tablespoon of moringa a day failed to show any benefit on blood sugar control in people with type 2 diabetes.

So, we’re left with a couple of studies showing potential, but most failing to show benefit. Why not just give moringa a try to see for yourself? That’s a legitimate course of action in the face of conflicting data when we’re talking about safe, simple, side–effect–free solutions, but is moringa safe? Probably not during pregnancy, as “about 80% of women folk” in some areas of the world use it to abort pregnancies, and its effectiveness for that purpose has been confirmed (at least in rats), though breastfeeding women may get a boost of about half a cup in milk production based on six randomized, blinded, placebo-controlled clinical trials.

Just because moringa has “long been used in traditional medicine” does not in any way prove that the plant is safe to consume. A lot of horribly toxic substances, like mercury and lead, have been used in traditional medical systems the world over, but at least “no major harmful effects of M. oleifera [moringa]…have been reported by the scientific community.” More accurately, “no adverse effects were reported in any of the human studies that have been conducted to date.” In other words, no harmful effects had been reported until now.

Stevens-Johnson syndrome (SJS) is probably the most dreaded drug side effect, “a rare but potentially fatal condition characterized by…epidermal detachment and mucous membrane erosions.” In other words, your skin may fall off. Fourteen hours after consuming moringa, a man broke out in a rash. The same thing had happened three months earlier, the last time he had eaten moringa, causing him to suffer “extensive mucocutaneous lesions with blister formation over face, mouth, chest, abdomen, and genitalia.” “This case report suggests that consumption of Moringa leaf is better avoided by individuals who are at risk of developing SJS.” Although it can happen to anyone, HIV is a risk factor.

My take on moringa is that the evidence of benefit isn’t compelling enough to justify shopping online for something special when you can get healthy vegetables in your local market, like broccoli, which has yet to be implicated in any genital blistering.